PyTorch implementations of popular NLP Transformers

157.4k

Ultralytics YOLOv5 🚀 for object detection, instance segmentation and image classification.

56.9k

A Robustly Optimized BERT Pretraining Approach

32.2k

Transformer models for English-French and English-German translation.

32.2k

Wide Residual Networks

17.5k

Award winning ConvNets from 2014 ImageNet ILSVRC challenge

17.5k

Alexnet-level accuracy with 50x fewer parameters.

17.5k

An efficient ConvNet optimized for speed and memory, pre-trained on ImageNet

17.5k

Next generation ResNets, more efficient and accurate

17.5k

Deep residual networks pre-trained on ImageNet

17.5k

Efficient networks optimized for speed and memory, with residual blocks

17.5k

Also called GoogleNetv3, a famous ConvNet trained on ImageNet from 2015

17.5k

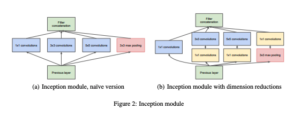

GoogLeNet was based on a deep convolutional neural network architecture codenamed “Inception” which won ImageNet 2014.

17.5k

Fully-Convolutional Network model with ResNet-50 and ResNet-101 backbones

17.5k

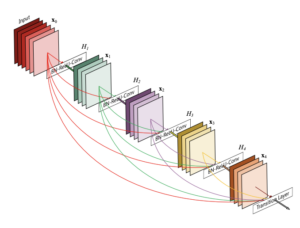

Dense Convolutional Network (DenseNet), connects each layer to every other layer in a feed-forward fashion.

17.5k

The 2012 ImageNet winner achieved a top-5 error of 15.3%, more than 10.8 percentage points lower than that of the runner up.

17.5k

DeepLabV3 models with ResNet-50, ResNet-101 and MobileNet-V3 backbones

17.5k

WaveGlow model for generating speech from mel spectrograms (generated by Tacotron2)

14.7k

The Tacotron 2 model for generating mel spectrograms from text

14.7k

Single Shot MultiBox Detector model for object detection

14.7k

ResNeXt with Squeeze-and-Excitation module added, trained with mixed precision using Tensor Cores.

14.7k

ResNet with bottleneck 3×3 Convolutions substituted by 3×3 Grouped Convolutions, trained with mixed precision using Tensor Cores.

14.7k

ResNet50 model trained with mixed precision using Tensor Cores.

14.7k

EfficientNets are a family of image classification models, which achieve state-of-the-art accuracy, being an order-of-magnitude smaller and faster. Trained with mixed precision using Tensor Cores.

14.7k

The HiFi GAN model for generating waveforms from mel spectrograms

14.7k

GPUNet is a new family of Convolutional Neural Networks designed to max out the performance of NVIDIA GPU and TensorRT.

14.7k

The FastPitch model for generating mel spectrograms from text

14.7k

Pre-trained Voice Activity Detector

8.4k

A set of compact enterprise-grade pre-trained TTS Models for multiple languages

5.8k

A set of compact enterprise-grade pre-trained STT Models for multiple languages.

5.8k

MiDaS models for computing relative depth from a single image.

5.3k

Brain-inspired Multilayer Perceptron with Spiking Neurons

4.4k

Efficient networks by generating more features from cheap operations

4.4k

X3D networks pretrained on the Kinetics 400 dataset

3.5k

SlowFast networks pretrained on the Kinetics 400 dataset

3.5k

Resnet Style Video classification networks pretrained on the Kinetics 400 dataset

3.5k

A new ResNet variant.

3.3k

YOLOP pretrained on the BDD100K dataset

2.2k

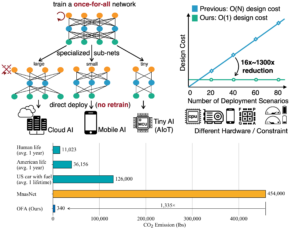

Once-for-all (OFA) decouples training and search, and achieves efficient inference across various edge devices and resource constraints.

1.9k

High-quality image generation of fashion, celebrity faces

1.6k

A simple generative image model for 64×64 images

1.6k

Reference implementation for music source separation

1.5k

Proxylessly specialize CNN architectures for different hardware platforms.

1.4k

Networks with domain/appearance invariance

807

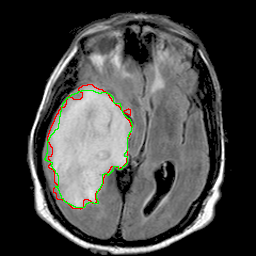

U-Net with batch normalization for biomedical image segmentation with pretrained weights for abnormality segmentation in brain MRI

770

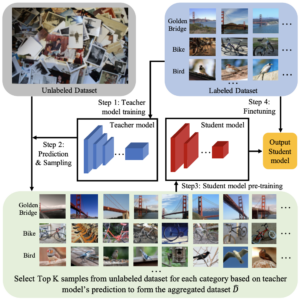

Boosting Tiny and Efficient Models using Knowledge Distillation.

701

HybridNets – End2End Perception Network

664

ResNext models trained with billion scale weakly-supervised data.

602

Harmonic DenseNet pre-trained on ImageNet

370

ResNet and ResNext models introduced in the “Billion scale semi-supervised learning for image classification” paper

246

Lets Keep it simple, Using simple architectures to outperform deeper and more complex architectures

54

classify birds using this fine-grained image classifier

34