TorchServe

⚠️ Notice: Limited Maintenance

This project is no longer actively maintained. While existing releases remain available, there are no planned updates, bug fixes, new features, or security patches. Users should be aware that vulnerabilities may not be addressed.

TorchServe is a performant, flexible and easy to use tool for serving PyTorch models in production.

What’s going on in TorchServe?

High performance Llama 2 deployments with AWS Inferentia2 using TorchServe

Deploying your Generative AI model in only four steps with Vertex AI and PyTorch

Grokking Intel CPU PyTorch performance from first principles: a TorchServe case study

Grokking Intel CPU PyTorch performance from first principles( Part 2): a TorchServe case study

Case Study: Amazon Ads Uses PyTorch and AWS Inferentia to Scale Models for Ads Processing

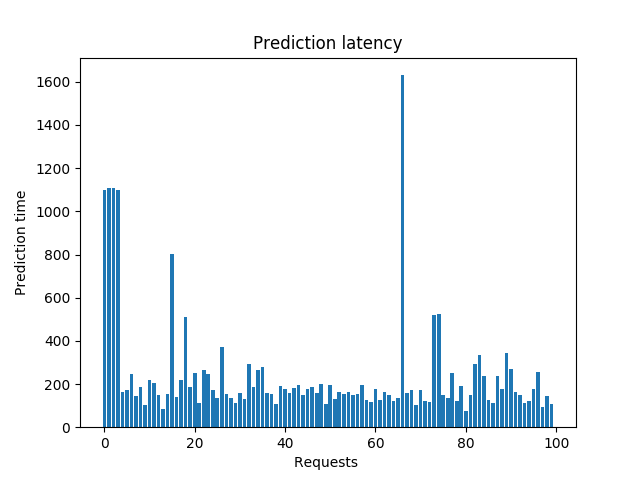

Optimize your inference jobs using dynamic batch inference with TorchServe on Amazon SageMaker

Evolution of Cresta’s machine learning architecture: Migration to AWS and PyTorch