Spectrogram

- class torchaudio.transforms.Spectrogram(n_fft: int = 400, win_length: ~typing.Optional[int] = None, hop_length: ~typing.Optional[int] = None, pad: int = 0, window_fn: ~typing.Callable[[...], ~torch.Tensor] = <built-in method hann_window of type object>, power: ~typing.Optional[float] = 2.0, normalized: ~typing.Union[bool, str] = False, wkwargs: ~typing.Optional[dict] = None, center: bool = True, pad_mode: str = 'reflect', onesided: bool = True, return_complex: ~typing.Optional[bool] = None)[source]

Create a spectrogram from a audio signal.

- Parameters:

n_fft (int, optional) – Size of FFT, creates

n_fft // 2 + 1bins. (Default:400)win_length (int or None, optional) – Window size. (Default:

n_fft)hop_length (int or None, optional) – Length of hop between STFT windows. (Default:

win_length // 2)pad (int, optional) – Two sided padding of signal. (Default:

0)window_fn (Callable[..., Tensor], optional) – A function to create a window tensor that is applied/multiplied to each frame/window. (Default:

torch.hann_window)power (float or None, optional) – Exponent for the magnitude spectrogram, (must be > 0) e.g., 1 for energy, 2 for power, etc. If None, then the complex spectrum is returned instead. (Default:

2)normalized (bool or str, optional) – Whether to normalize by magnitude after stft. If input is str, choices are

"window"and"frame_length", if specific normalization type is desirable.Truemaps to"window". (Default:False)wkwargs (dict or None, optional) – Arguments for window function. (Default:

None)center (bool, optional) – whether to pad

waveformon both sides so that the -th frame is centered at time . (Default:True)pad_mode (string, optional) – controls the padding method used when

centerisTrue. (Default:"reflect")onesided (bool, optional) – controls whether to return half of results to avoid redundancy (Default:

True)return_complex (bool, optional) – Deprecated and not used.

- Example

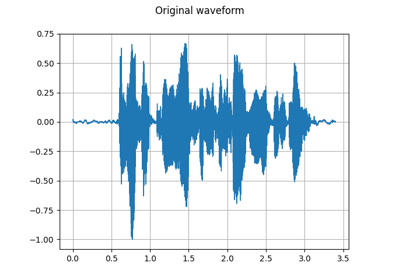

>>> waveform, sample_rate = torchaudio.load("test.wav", normalize=True) >>> transform = torchaudio.transforms.Spectrogram(n_fft=800) >>> spectrogram = transform(waveform)

- Tutorials using

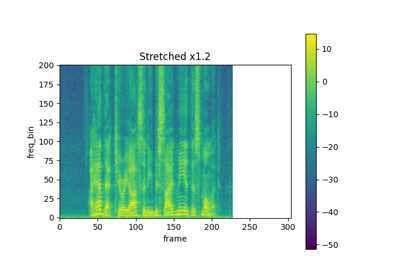

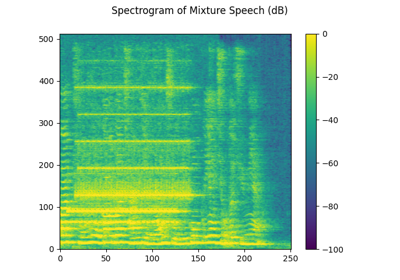

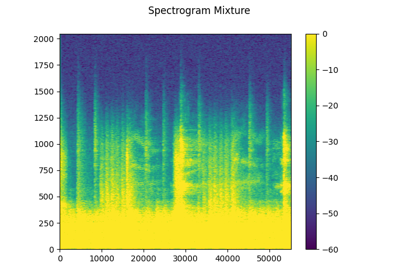

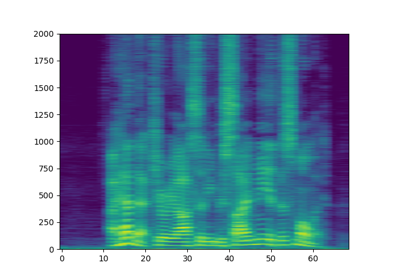

Spectrogram: