WaveRNN

- class torchaudio.models.WaveRNN(upsample_scales: List[int], n_classes: int, hop_length: int, n_res_block: int = 10, n_rnn: int = 512, n_fc: int = 512, kernel_size: int = 5, n_freq: int = 128, n_hidden: int = 128, n_output: int = 128)[source]

WaveRNN model from Efficient Neural Audio Synthesis [Kalchbrenner et al., 2018] based on the implementation from fatchord/WaveRNN.

The original implementation was introduced in Efficient Neural Audio Synthesis [Kalchbrenner et al., 2018]. The input channels of waveform and spectrogram have to be 1. The product of upsample_scales must equal hop_length.

See also

torchaudio.pipelines.Tacotron2TTSBundle: TTS pipeline with pretrained model.

- Parameters:

upsample_scales – the list of upsample scales.

n_classes – the number of output classes.

hop_length – the number of samples between the starts of consecutive frames.

n_res_block – the number of ResBlock in stack. (Default:

10)n_rnn – the dimension of RNN layer. (Default:

512)n_fc – the dimension of fully connected layer. (Default:

512)kernel_size – the number of kernel size in the first Conv1d layer. (Default:

5)n_freq – the number of bins in a spectrogram. (Default:

128)n_hidden – the number of hidden dimensions of resblock. (Default:

128)n_output – the number of output dimensions of melresnet. (Default:

128)

- Example

>>> wavernn = WaveRNN(upsample_scales=[5,5,8], n_classes=512, hop_length=200) >>> waveform, sample_rate = torchaudio.load(file) >>> # waveform shape: (n_batch, n_channel, (n_time - kernel_size + 1) * hop_length) >>> specgram = MelSpectrogram(sample_rate)(waveform) # shape: (n_batch, n_channel, n_freq, n_time) >>> output = wavernn(waveform, specgram) >>> # output shape: (n_batch, n_channel, (n_time - kernel_size + 1) * hop_length, n_classes)

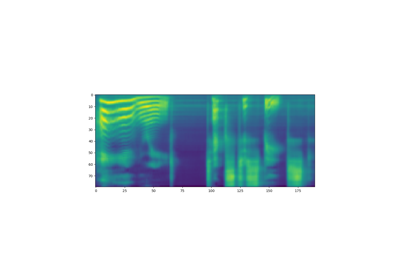

- Tutorials using

WaveRNN:

forward

- WaveRNN.forward(waveform: Tensor, specgram: Tensor) Tensor[source]

Pass the input through the WaveRNN model.

- Parameters:

waveform – the input waveform to the WaveRNN layer (n_batch, 1, (n_time - kernel_size + 1) * hop_length)

specgram – the input spectrogram to the WaveRNN layer (n_batch, 1, n_freq, n_time)

- Returns:

shape (n_batch, 1, (n_time - kernel_size + 1) * hop_length, n_classes)

- Return type:

Tensor

infer

- WaveRNN.infer(specgram: Tensor, lengths: Optional[Tensor] = None) Tuple[Tensor, Optional[Tensor]][source]

Inference method of WaveRNN.

This function currently only supports multinomial sampling, which assumes the network is trained on cross entropy loss.

- Parameters:

specgram (Tensor) – Batch of spectrograms. Shape: (n_batch, n_freq, n_time).

lengths (Tensor or None, optional) – Indicates the valid length of each audio in the batch. Shape: (batch, ). When the

specgramcontains spectrograms with different durations, by providinglengthsargument, the model will compute the corresponding valid output lengths. IfNone, it is assumed that all the audio inwaveformshave valid length. Default:None.

- Returns:

- Tensor

The inferred waveform of size (n_batch, 1, n_time). 1 stands for a single channel.

- Tensor or None

If

lengthsargument was provided, a Tensor of shape (batch, ) is returned. It indicates the valid length in time axis of the output Tensor.

- Return type:

(Tensor, Optional[Tensor])