RReLU¶

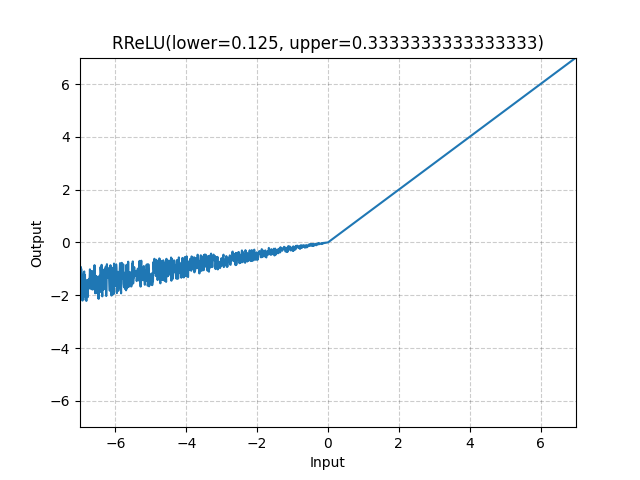

- class torch.nn.RReLU(lower=0.125, upper=0.3333333333333333, inplace=False)[source][source]¶

Applies the randomized leaky rectified linear unit function, element-wise.

Method described in the paper: Empirical Evaluation of Rectified Activations in Convolutional Network.

The function is defined as:

where is randomly sampled from uniform distribution during training while during evaluation is fixed with .

- Parameters

- Shape:

Input: , where means any number of dimensions.

Output: , same shape as the input.

Examples:

>>> m = nn.RReLU(0.1, 0.3) >>> input = torch.randn(2) >>> output = m(input)