Resample

- class torchaudio.transforms.Resample(orig_freq: int = 16000, new_freq: int = 16000, resampling_method: str = 'sinc_interpolation', lowpass_filter_width: int = 6, rolloff: float = 0.99, beta: Optional[float] = None, *, dtype: Optional[dtype] = None)[source]

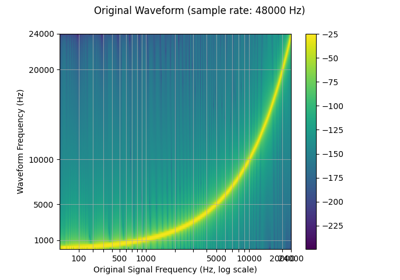

Resample a signal from one frequency to another. A resampling method can be given.

Note

If resampling on waveforms of higher precision than float32, there may be a small loss of precision because the kernel is cached once as float32. If high precision resampling is important for your application, the functional form will retain higher precision, but run slower because it does not cache the kernel. Alternatively, you could rewrite a transform that caches a higher precision kernel.

- Parameters:

orig_freq (int, optional) – The original frequency of the signal. (Default:

16000)new_freq (int, optional) – The desired frequency. (Default:

16000)resampling_method (str, optional) – The resampling method to use. Options: [

sinc_interpolation,kaiser_window] (Default:"sinc_interpolation")lowpass_filter_width (int, optional) – Controls the sharpness of the filter, more == sharper but less efficient. (Default:

6)rolloff (float, optional) – The roll-off frequency of the filter, as a fraction of the Nyquist. Lower values reduce anti-aliasing, but also reduce some of the highest frequencies. (Default:

0.99)beta (float or None, optional) – The shape parameter used for kaiser window.

dtype (torch.device, optional) – Determnines the precision that resampling kernel is pre-computed and cached. If not provided, kernel is computed with

torch.float64then cached astorch.float32. If you need higher precision, providetorch.float64, and the pre-computed kernel is computed and cached astorch.float64. If you use resample with lower precision, then instead of providing this providing this argument, please useResample.to(dtype), so that the kernel generation is still carried out ontorch.float64.

- Example

>>> waveform, sample_rate = torchaudio.load("test.wav", normalize=True) >>> transform = transforms.Resample(sample_rate, sample_rate/10) >>> waveform = transform(waveform)

- Tutorials using

Resample: