SiLU¶

- class torch.nn.SiLU(inplace=False)[source][source]¶

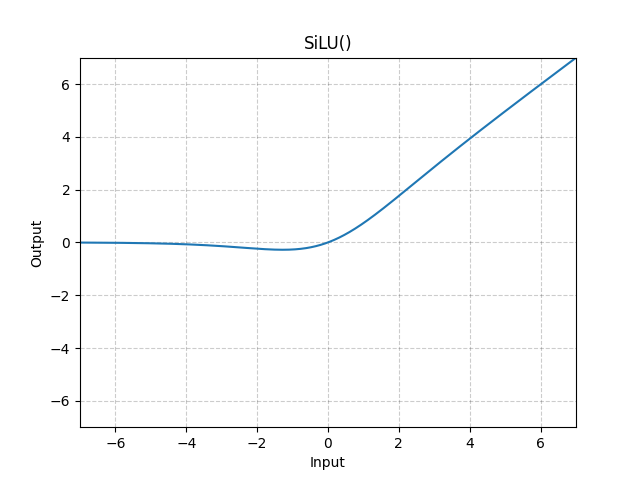

Applies the Sigmoid Linear Unit (SiLU) function, element-wise.

The SiLU function is also known as the swish function.

Note

See Gaussian Error Linear Units (GELUs) where the SiLU (Sigmoid Linear Unit) was originally coined, and see Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement Learning and Swish: a Self-Gated Activation Function where the SiLU was experimented with later.

- Shape:

Input: , where means any number of dimensions.

Output: , same shape as the input.

Examples:

>>> m = nn.SiLU() >>> input = torch.randn(2) >>> output = m(input)