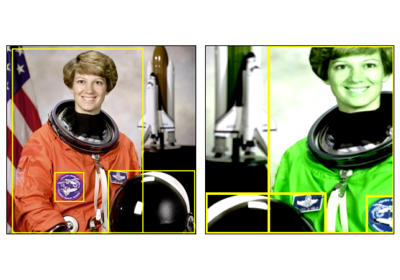

RandomPhotometricDistort

- class torchvision.transforms.v2.RandomPhotometricDistort(brightness: Tuple[float, float] = (0.875, 1.125), contrast: Tuple[float, float] = (0.5, 1.5), saturation: Tuple[float, float] = (0.5, 1.5), hue: Tuple[float, float] = (- 0.05, 0.05), p: float = 0.5)[source]

Randomly distorts the image or video as used in SSD: Single Shot MultiBox Detector.

This transform relies on

ColorJitterunder the hood to adjust the contrast, saturation, hue, brightness, and also randomly permutes channels.- Parameters:

brightness (tuple of python:float (min, max), optional) – How much to jitter brightness. brightness_factor is chosen uniformly from [min, max]. Should be non negative numbers.

contrast (tuple of python:float (min, max), optional) – How much to jitter contrast. contrast_factor is chosen uniformly from [min, max]. Should be non-negative numbers.

saturation (tuple of python:float (min, max), optional) – How much to jitter saturation. saturation_factor is chosen uniformly from [min, max]. Should be non negative numbers.

hue (tuple of python:float (min, max), optional) – How much to jitter hue. hue_factor is chosen uniformly from [min, max]. Should have -0.5 <= min <= max <= 0.5. To jitter hue, the pixel values of the input image has to be non-negative for conversion to HSV space; thus it does not work if you normalize your image to an interval with negative values, or use an interpolation that generates negative values before using this function.

p (float, optional) probability each distortion operation (contrast, saturation, ...) – Default is 0.5.

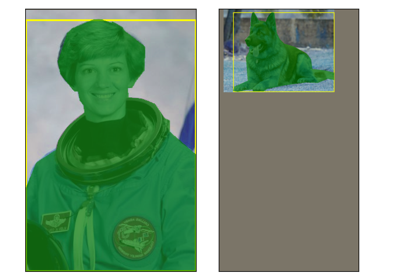

Examples using

RandomPhotometricDistort:

Transforms v2: End-to-end object detection/segmentation example

Transforms v2: End-to-end object detection/segmentation example