LFCC¶

- class torchaudio.transforms.LFCC(sample_rate: int = 16000, n_filter: int = 128, f_min: float = 0.0, f_max: Optional[float] = None, n_lfcc: int = 40, dct_type: int = 2, norm: str = 'ortho', log_lf: bool = False, speckwargs: Optional[dict] = None)[source]¶

Create the linear-frequency cepstrum coefficients from an audio signal.

By default, this calculates the LFCC on the DB-scaled linear filtered spectrogram. This is not the textbook implementation, but is implemented here to give consistency with librosa.

This output depends on the maximum value in the input spectrogram, and so may return different values for an audio clip split into snippets vs. a a full clip.

- Parameters:

sample_rate (int, optional) – Sample rate of audio signal. (Default:

16000)n_filter (int, optional) – Number of linear filters to apply. (Default:

128)n_lfcc (int, optional) – Number of lfc coefficients to retain. (Default:

40)f_min (float, optional) – Minimum frequency. (Default:

0.)f_max (float or None, optional) – Maximum frequency. (Default:

None)dct_type (int, optional) – type of DCT (discrete cosine transform) to use. (Default:

2)norm (str, optional) – norm to use. (Default:

"ortho")log_lf (bool, optional) – whether to use log-lf spectrograms instead of db-scaled. (Default:

False)speckwargs (dict or None, optional) – arguments for Spectrogram. (Default:

None)

- Example

>>> waveform, sample_rate = torchaudio.load("test.wav", normalize=True) >>> transform = transforms.LFCC( >>> sample_rate=sample_rate, >>> n_lfcc=13, >>> speckwargs={"n_fft": 400, "hop_length": 160, "center": False}, >>> ) >>> lfcc = transform(waveform)

See also

torchaudio.functional.linear_fbanks()- The function used to generate the filter banks.- Tutorials using

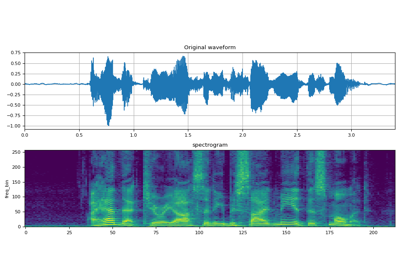

LFCC: