torchaudio.datasets

All datasets are subclasses of torch.utils.data.Dataset

and have __getitem__ and __len__ methods implemented.

Hence, they can all be passed to a torch.utils.data.DataLoader

which can load multiple samples parallelly using torch.multiprocessing workers.

For example:

yesno_data = torchaudio.datasets.YESNO('.', download=True)

data_loader = torch.utils.data.DataLoader(yesno_data,

batch_size=1,

shuffle=True,

num_workers=args.nThreads)

CMUARCTIC

-

class

torchaudio.datasets.CMUARCTIC(root: Union[str, pathlib.Path], url: str = 'aew', folder_in_archive: str = 'ARCTIC', download: bool = False)[source] Create a Dataset for CMU_ARCTIC.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from or the type of the dataset to dowload. (default:

"aew") Allowed type values are"aew","ahw","aup","awb","axb","bdl","clb","eey","fem","gka","jmk","ksp","ljm","lnh","rms","rxr","slp"or"slt".folder_in_archive (str, optional) – The top-level directory of the dataset. (default:

"ARCTIC")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).

CMUDict

-

class

torchaudio.datasets.CMUDict(root: Union[str, pathlib.Path], exclude_punctuations: bool = True, *, download: bool = False, url: str = 'http://svn.code.sf.net/p/cmusphinx/code/trunk/cmudict/cmudict-0.7b', url_symbols: str = 'http://svn.code.sf.net/p/cmusphinx/code/trunk/cmudict/cmudict-0.7b.symbols')[source] Create a Dataset for CMU Pronouncing Dictionary (CMUDict).

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

exclude_punctuations (bool, optional) – When enabled, exclude the pronounciation of punctuations, such as !EXCLAMATION-POINT and #HASH-MARK.

download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).url (str, optional) – The URL to download the dictionary from. (default:

"http://svn.code.sf.net/p/cmusphinx/code/trunk/cmudict/cmudict-0.7b")url_symbols (str, optional) – The URL to download the list of symbols from. (default:

"http://svn.code.sf.net/p/cmusphinx/code/trunk/cmudict/cmudict-0.7b.symbols")

COMMONVOICE

-

class

torchaudio.datasets.COMMONVOICE(root: Union[str, pathlib.Path], tsv: str = 'train.tsv')[source] Create a Dataset for CommonVoice.

- Parameters

root (str or Path) – Path to the directory where the dataset is located. (Where the

tsvfile is present.)tsv (str, optional) – The name of the tsv file used to construct the metadata, such as

"train.tsv","test.tsv","dev.tsv","invalidated.tsv","validated.tsv"and"other.tsv". (default:"train.tsv")

GTZAN

-

class

torchaudio.datasets.GTZAN(root: Union[str, pathlib.Path], url: str = 'http://opihi.cs.uvic.ca/sound/genres.tar.gz', folder_in_archive: str = 'genres', download: bool = False, subset: Optional[str] = None)[source] Create a Dataset for GTZAN.

Note

Please see http://marsyas.info/downloads/datasets.html if you are planning to use this dataset to publish results.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from. (default:

"http://opihi.cs.uvic.ca/sound/genres.tar.gz")folder_in_archive (str, optional) – The top-level directory of the dataset.

download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).subset (str or None, optional) – Which subset of the dataset to use. One of

"training","validation","testing"orNone. IfNone, the entire dataset is used. (default:None).

LIBRISPEECH

-

class

torchaudio.datasets.LIBRISPEECH(root: Union[str, pathlib.Path], url: str = 'train-clean-100', folder_in_archive: str = 'LibriSpeech', download: bool = False)[source] Create a Dataset for LibriSpeech.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from, or the type of the dataset to dowload. Allowed type values are

"dev-clean","dev-other","test-clean","test-other","train-clean-100","train-clean-360"and"train-other-500". (default:"train-clean-100")folder_in_archive (str, optional) – The top-level directory of the dataset. (default:

"LibriSpeech")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).

LIBRITTS

-

class

torchaudio.datasets.LIBRITTS(root: Union[str, pathlib.Path], url: str = 'train-clean-100', folder_in_archive: str = 'LibriTTS', download: bool = False)[source] Create a Dataset for LibriTTS.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from, or the type of the dataset to dowload. Allowed type values are

"dev-clean","dev-other","test-clean","test-other","train-clean-100","train-clean-360"and"train-other-500". (default:"train-clean-100")folder_in_archive (str, optional) – The top-level directory of the dataset. (default:

"LibriTTS")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).

LJSPEECH

-

class

torchaudio.datasets.LJSPEECH(root: Union[str, pathlib.Path], url: str = 'https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2', folder_in_archive: str = 'wavs', download: bool = False)[source] Create a Dataset for LJSpeech-1.1.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from. (default:

"https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2")folder_in_archive (str, optional) – The top-level directory of the dataset. (default:

"wavs")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).

SPEECHCOMMANDS

-

class

torchaudio.datasets.SPEECHCOMMANDS(root: Union[str, pathlib.Path], url: str = 'speech_commands_v0.02', folder_in_archive: str = 'SpeechCommands', download: bool = False, subset: Optional[str] = None)[source] Create a Dataset for Speech Commands.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from, or the type of the dataset to dowload. Allowed type values are

"speech_commands_v0.01"and"speech_commands_v0.02"(default:"speech_commands_v0.02")folder_in_archive (str, optional) – The top-level directory of the dataset. (default:

"SpeechCommands")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).subset (str or None, optional) – Select a subset of the dataset [None, “training”, “validation”, “testing”]. None means the whole dataset. “validation” and “testing” are defined in “validation_list.txt” and “testing_list.txt”, respectively, and “training” is the rest. Details for the files “validation_list.txt” and “testing_list.txt” are explained in the README of the dataset and in the introduction of Section 7 of the original paper and its reference 12. The original paper can be found here. (Default:

None)

TEDLIUM

-

class

torchaudio.datasets.TEDLIUM(root: Union[str, pathlib.Path], release: str = 'release1', subset: str = 'train', download: bool = False, audio_ext: str = '.sph')[source] Create a Dataset for Tedlium. It supports releases 1,2 and 3.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

release (str, optional) – Release version. Allowed values are

"release1","release2"or"release3". (default:"release1").subset (str, optional) – The subset of dataset to use. Valid options are

"train","dev", and"test". Defaults to"train".download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).audio_ext (str, optional) – extension for audio file (default:

".sph")

VCTK_092

-

class

torchaudio.datasets.VCTK_092(root: str, mic_id: str = 'mic2', download: bool = False, url: str = 'https://datashare.is.ed.ac.uk/bitstream/handle/10283/3443/VCTK-Corpus-0.92.zip', audio_ext='.flac')[source] Create VCTK 0.92 Dataset

- Parameters

root (str) – Root directory where the dataset’s top level directory is found.

mic_id (str, optional) – Microphone ID. Either

"mic1"or"mic2". (default:"mic2")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).url (str, optional) – The URL to download the dataset from. (default:

"https://datashare.is.ed.ac.uk/bitstream/handle/10283/3443/VCTK-Corpus-0.92.zip")audio_ext (str, optional) – Custom audio extension if dataset is converted to non-default audio format.

Note

All the speeches from speaker

p315will be skipped due to the lack of the corresponding text files.All the speeches from

p280will be skipped formic_id="mic2"due to the lack of the audio files.Some of the speeches from speaker

p362will be skipped due to the lack of the audio files.

DR_VCTK

-

class

torchaudio.datasets.DR_VCTK(root: Union[str, pathlib.Path], subset: str = 'train', *, download: bool = False, url: str = 'https://datashare.ed.ac.uk/bitstream/handle/10283/3038/DR-VCTK.zip')[source] Create a dataset for Device Recorded VCTK (Small subset version).

- Parameters

root (str or Path) – Root directory where the dataset’s top level directory is found.

subset (str) – The subset to use. Can be one of

"train"and"test". (default:"train").download (bool) – Whether to download the dataset if it is not found at root path. (default:

False).url (str) – The URL to download the dataset from. (default:

"https://datashare.ed.ac.uk/bitstream/handle/10283/3038/DR-VCTK.zip")

-

__getitem__(n: int) → Tuple[torch.Tensor, int, torch.Tensor, int, str, str, str, int][source] Load the n-th sample from the dataset.

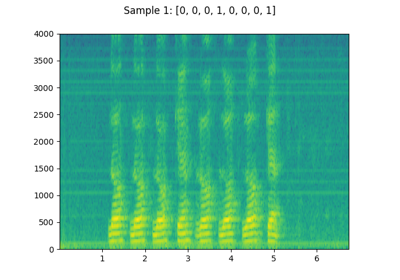

YESNO

-

class

torchaudio.datasets.YESNO(root: Union[str, pathlib.Path], url: str = 'http://www.openslr.org/resources/1/waves_yesno.tar.gz', folder_in_archive: str = 'waves_yesno', download: bool = False)[source] Create a Dataset for YesNo.

- Parameters

root (str or Path) – Path to the directory where the dataset is found or downloaded.

url (str, optional) – The URL to download the dataset from. (default:

"http://www.openslr.org/resources/1/waves_yesno.tar.gz")folder_in_archive (str, optional) – The top-level directory of the dataset. (default:

"waves_yesno")download (bool, optional) – Whether to download the dataset if it is not found at root path. (default:

False).

- Tutorials using

YESNO: