torchaudio.models

The models subpackage contains definitions of models for addressing common audio tasks.

Conformer

-

class

torchaudio.models.Conformer(input_dim: int, num_heads: int, ffn_dim: int, num_layers: int, depthwise_conv_kernel_size: int, dropout: float = 0.0)[source] Implements the Conformer architecture introduced in Conformer: Convolution-augmented Transformer for Speech Recognition [1].

- Parameters

input_dim (int) – input dimension.

num_heads (int) – number of attention heads in each Conformer layer.

ffn_dim (int) – hidden layer dimension of feedforward networks.

num_layers (int) – number of Conformer layers to instantiate.

depthwise_conv_kernel_size (int) – kernel size of each Conformer layer’s depthwise convolution layer.

dropout (float, optional) – dropout probability. (Default: 0.0)

Examples

>>> conformer = Conformer( >>> input_dim=80, >>> num_heads=4, >>> ffn_dim=128, >>> num_layers=4, >>> depthwise_conv_kernel_size=31, >>> ) >>> lengths = torch.randint(1, 400, (10,)) # (batch,) >>> input = torch.rand(10, int(lengths.max()), input_dim) # (batch, num_frames, input_dim) >>> output = conformer(input, lengths)

-

forward(input: torch.Tensor, lengths: torch.Tensor) → Tuple[torch.Tensor, torch.Tensor][source] - Parameters

input (torch.Tensor) – with shape (B, T, input_dim).

lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

input.

- Returns

- (torch.Tensor, torch.Tensor)

- torch.Tensor

output frames, with shape (B, T, input_dim)

- torch.Tensor

output lengths, with shape (B,) and i-th element representing number of valid frames for i-th batch element in output frames.

ConvTasNet

-

class

torchaudio.models.ConvTasNet(num_sources: int = 2, enc_kernel_size: int = 16, enc_num_feats: int = 512, msk_kernel_size: int = 3, msk_num_feats: int = 128, msk_num_hidden_feats: int = 512, msk_num_layers: int = 8, msk_num_stacks: int = 3, msk_activate: str = 'sigmoid')[source] Conv-TasNet: a fully-convolutional time-domain audio separation network Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation [2].

- Parameters

num_sources (int, optional) – The number of sources to split.

enc_kernel_size (int, optional) – The convolution kernel size of the encoder/decoder, <L>.

enc_num_feats (int, optional) – The feature dimensions passed to mask generator, <N>.

msk_kernel_size (int, optional) – The convolution kernel size of the mask generator, <P>.

msk_num_feats (int, optional) – The input/output feature dimension of conv block in the mask generator, <B, Sc>.

msk_num_hidden_feats (int, optional) – The internal feature dimension of conv block of the mask generator, <H>.

msk_num_layers (int, optional) – The number of layers in one conv block of the mask generator, <X>.

msk_num_stacks (int, optional) – The numbr of conv blocks of the mask generator, <R>.

msk_activate (str, optional) – The activation function of the mask output (Default:

sigmoid).

Note

This implementation corresponds to the “non-causal” setting in the paper.

-

forward(input: torch.Tensor) → torch.Tensor[source] Perform source separation. Generate audio source waveforms.

- Parameters

input (torch.Tensor) – 3D Tensor with shape [batch, channel==1, frames]

- Returns

3D Tensor with shape [batch, channel==num_sources, frames]

- Return type

Tensor

DeepSpeech

-

class

torchaudio.models.DeepSpeech(n_feature: int, n_hidden: int = 2048, n_class: int = 40, dropout: float = 0.0)[source] DeepSpeech model architecture from Deep Speech: Scaling up end-to-end speech recognition [3].

- Parameters

n_feature – Number of input features

n_hidden – Internal hidden unit size.

n_class – Number of output classes

-

forward(x: torch.Tensor) → torch.Tensor[source] - Parameters

x (torch.Tensor) – Tensor of dimension (batch, channel, time, feature).

- Returns

Predictor tensor of dimension (batch, time, class).

- Return type

Tensor

Emformer

-

class

torchaudio.models.Emformer(input_dim: int, num_heads: int, ffn_dim: int, num_layers: int, segment_length: int, dropout: float = 0.0, activation: str = 'relu', left_context_length: int = 0, right_context_length: int = 0, max_memory_size: int = 0, weight_init_scale_strategy: str = 'depthwise', tanh_on_mem: bool = False, negative_inf: float = - 100000000.0)[source] Implements the Emformer architecture introduced in Emformer: Efficient Memory Transformer Based Acoustic Model for Low Latency Streaming Speech Recognition [4].

- Parameters

input_dim (int) – input dimension.

num_heads (int) – number of attention heads in each Emformer layer.

ffn_dim (int) – hidden layer dimension of each Emformer layer’s feedforward network.

num_layers (int) – number of Emformer layers to instantiate.

segment_length (int) – length of each input segment.

dropout (float, optional) – dropout probability. (Default: 0.0)

activation (str, optional) – activation function to use in each Emformer layer’s feedforward network. Must be one of (“relu”, “gelu”, “silu”). (Default: “relu”)

left_context_length (int, optional) – length of left context. (Default: 0)

right_context_length (int, optional) – length of right context. (Default: 0)

max_memory_size (int, optional) – maximum number of memory elements to use. (Default: 0)

weight_init_scale_strategy (str, optional) – per-layer weight initialization scaling strategy. Must be one of (“depthwise”, “constant”,

None). (Default: “depthwise”)tanh_on_mem (bool, optional) – if

True, applies tanh to memory elements. (Default:False)negative_inf (float, optional) – value to use for negative infinity in attention weights. (Default: -1e8)

Examples

>>> emformer = Emformer(512, 8, 2048, 20, 4, right_context_length=1) >>> input = torch.rand(128, 400, 512) # batch, num_frames, feature_dim >>> lengths = torch.randint(1, 200, (128,)) # batch >>> output = emformer(input, lengths) >>> input = torch.rand(128, 5, 512) >>> lengths = torch.ones(128) * 5 >>> output, lengths, states = emformer.infer(input, lengths, None)

-

forward(input: torch.Tensor, lengths: torch.Tensor) → Tuple[torch.Tensor, torch.Tensor][source] Forward pass for training and non-streaming inference.

B: batch size; T: max number of input frames in batch; D: feature dimension of each frame.

- Parameters

input (torch.Tensor) – utterance frames right-padded with right context frames, with shape (B, T + right_context_length, D).

lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid utterance frames for i-th batch element in

input.

- Returns

- Tensor

output frames, with shape (B, T, D).

- Tensor

output lengths, with shape (B,) and i-th element representing number of valid frames for i-th batch element in output frames.

- Return type

(Tensor, Tensor)

-

infer(input: torch.Tensor, lengths: torch.Tensor, states: Optional[List[List[torch.Tensor]]] = None) → Tuple[torch.Tensor, torch.Tensor, List[List[torch.Tensor]]][source] Forward pass for streaming inference.

B: batch size; D: feature dimension of each frame.

- Parameters

input (torch.Tensor) – utterance frames right-padded with right context frames, with shape (B, segment_length + right_context_length, D).

lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

input.states (List[List[torch.Tensor]] or None, optional) – list of lists of tensors representing Emformer internal state generated in preceding invocation of

infer. (Default:None)

- Returns

- Tensor

output frames, with shape (B, segment_length, D).

- Tensor

output lengths, with shape (B,) and i-th element representing number of valid frames for i-th batch element in output frames.

- List[List[Tensor]]

output states; list of lists of tensors representing Emformer internal state generated in current invocation of

infer.

- Return type

(Tensor, Tensor, List[List[Tensor]])

RNN-T

Model

RNNT

-

class

torchaudio.models.RNNT[source] Recurrent neural network transducer (RNN-T) model.

Note

To build the model, please use one of the factory functions.

- Parameters

transcriber (torch.nn.Module) – transcription network.

predictor (torch.nn.Module) – prediction network.

joiner (torch.nn.Module) – joint network.

-

forward(sources: torch.Tensor, source_lengths: torch.Tensor, targets: torch.Tensor, target_lengths: torch.Tensor, predictor_state: Optional[List[List[torch.Tensor]]] = None) → Tuple[torch.Tensor, torch.Tensor, torch.Tensor, List[List[torch.Tensor]]][source] Forward pass for training.

B: batch size; T: maximum source sequence length in batch; U: maximum target sequence length in batch; D: feature dimension of each source sequence element.

- Parameters

sources (torch.Tensor) – source frame sequences right-padded with right context, with shape (B, T, D).

source_lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

sources.targets (torch.Tensor) – target sequences, with shape (B, U) and each element mapping to a target symbol.

target_lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

targets.predictor_state (List[List[torch.Tensor]] or None, optional) – list of lists of tensors representing prediction network internal state generated in preceding invocation of

forward. (Default:None)

- Returns

- torch.Tensor

joint network output, with shape (B, max output source length, max output target length, output_dim (number of target symbols)).

- torch.Tensor

output source lengths, with shape (B,) and i-th element representing number of valid elements along dim 1 for i-th batch element in joint network output.

- torch.Tensor

output target lengths, with shape (B,) and i-th element representing number of valid elements along dim 2 for i-th batch element in joint network output.

- List[List[torch.Tensor]]

output states; list of lists of tensors representing prediction network internal state generated in current invocation of

forward.

- Return type

(torch.Tensor, torch.Tensor, torch.Tensor, List[List[torch.Tensor]])

-

transcribe_streaming(sources: torch.Tensor, source_lengths: torch.Tensor, state: Optional[List[List[torch.Tensor]]]) → Tuple[torch.Tensor, torch.Tensor, List[List[torch.Tensor]]][source] Applies transcription network to sources in streaming mode.

B: batch size; T: maximum source sequence segment length in batch; D: feature dimension of each source sequence frame.

- Parameters

sources (torch.Tensor) – source frame sequence segments right-padded with right context, with shape (B, T + right context length, D).

source_lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

sources.state (List[List[torch.Tensor]] or None) – list of lists of tensors representing transcription network internal state generated in preceding invocation of

transcribe_streaming.

- Returns

- torch.Tensor

output frame sequences, with shape (B, T // time_reduction_stride, output_dim).

- torch.Tensor

output lengths, with shape (B,) and i-th element representing number of valid elements for i-th batch element in output.

- List[List[torch.Tensor]]

output states; list of lists of tensors representing transcription network internal state generated in current invocation of

transcribe_streaming.

- Return type

(torch.Tensor, torch.Tensor, List[List[torch.Tensor]])

-

transcribe(sources: torch.Tensor, source_lengths: torch.Tensor) → Tuple[torch.Tensor, torch.Tensor][source] Applies transcription network to sources in non-streaming mode.

B: batch size; T: maximum source sequence length in batch; D: feature dimension of each source sequence frame.

- Parameters

sources (torch.Tensor) – source frame sequences right-padded with right context, with shape (B, T + right context length, D).

source_lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

sources.

- Returns

- torch.Tensor

output frame sequences, with shape (B, T // time_reduction_stride, output_dim).

- torch.Tensor

output lengths, with shape (B,) and i-th element representing number of valid elements for i-th batch element in output frame sequences.

- Return type

-

predict(targets: torch.Tensor, target_lengths: torch.Tensor, state: Optional[List[List[torch.Tensor]]]) → Tuple[torch.Tensor, torch.Tensor, List[List[torch.Tensor]]][source] Applies prediction network to targets.

B: batch size; U: maximum target sequence length in batch; D: feature dimension of each target sequence frame.

- Parameters

targets (torch.Tensor) – target sequences, with shape (B, U) and each element mapping to a target symbol, i.e. in range [0, num_symbols).

target_lengths (torch.Tensor) – with shape (B,) and i-th element representing number of valid frames for i-th batch element in

targets.state (List[List[torch.Tensor]] or None) – list of lists of tensors representing internal state generated in preceding invocation of

predict.

- Returns

- torch.Tensor

output frame sequences, with shape (B, U, output_dim).

- torch.Tensor

output lengths, with shape (B,) and i-th element representing number of valid elements for i-th batch element in output.

- List[List[torch.Tensor]]

output states; list of lists of tensors representing internal state generated in current invocation of

predict.

- Return type

(torch.Tensor, torch.Tensor, List[List[torch.Tensor]])

-

join(source_encodings: torch.Tensor, source_lengths: torch.Tensor, target_encodings: torch.Tensor, target_lengths: torch.Tensor) → Tuple[torch.Tensor, torch.Tensor, torch.Tensor][source] Applies joint network to source and target encodings.

B: batch size; T: maximum source sequence length in batch; U: maximum target sequence length in batch; D: dimension of each source and target sequence encoding.

- Parameters

source_encodings (torch.Tensor) – source encoding sequences, with shape (B, T, D).

source_lengths (torch.Tensor) – with shape (B,) and i-th element representing valid sequence length of i-th batch element in

source_encodings.target_encodings (torch.Tensor) – target encoding sequences, with shape (B, U, D).

target_lengths (torch.Tensor) – with shape (B,) and i-th element representing valid sequence length of i-th batch element in

target_encodings.

- Returns

- torch.Tensor

joint network output, with shape (B, T, U, output_dim).

- torch.Tensor

output source lengths, with shape (B,) and i-th element representing number of valid elements along dim 1 for i-th batch element in joint network output.

- torch.Tensor

output target lengths, with shape (B,) and i-th element representing number of valid elements along dim 2 for i-th batch element in joint network output.

- Return type

Factory Functions

emformer_rnnt_model

-

torchaudio.models.emformer_rnnt_model(*, input_dim: int, encoding_dim: int, num_symbols: int, segment_length: int, right_context_length: int, time_reduction_input_dim: int, time_reduction_stride: int, transformer_num_heads: int, transformer_ffn_dim: int, transformer_num_layers: int, transformer_dropout: float, transformer_activation: str, transformer_left_context_length: int, transformer_max_memory_size: int, transformer_weight_init_scale_strategy: str, transformer_tanh_on_mem: bool, symbol_embedding_dim: int, num_lstm_layers: int, lstm_layer_norm: bool, lstm_layer_norm_epsilon: float, lstm_dropout: float) → torchaudio.models.rnnt.RNNT[source] Builds Emformer-based recurrent neural network transducer (RNN-T) model.

Note

For non-streaming inference, the expectation is for transcribe to be called on input sequences right-concatenated with right_context_length frames.

For streaming inference, the expectation is for transcribe_streaming to be called on input chunks comprising segment_length frames right-concatenated with right_context_length frames.

- Parameters

input_dim (int) – dimension of input sequence frames passed to transcription network.

encoding_dim (int) – dimension of transcription- and prediction-network-generated encodings passed to joint network.

num_symbols (int) – cardinality of set of target tokens.

segment_length (int) – length of input segment expressed as number of frames.

right_context_length (int) – length of right context expressed as number of frames.

time_reduction_input_dim (int) – dimension to scale each element in input sequences to prior to applying time reduction block.

time_reduction_stride (int) – factor by which to reduce length of input sequence.

transformer_num_heads (int) – number of attention heads in each Emformer layer.

transformer_ffn_dim (int) – hidden layer dimension of each Emformer layer’s feedforward network.

transformer_num_layers (int) – number of Emformer layers to instantiate.

transformer_left_context_length (int) – length of left context considered by Emformer.

transformer_dropout (float) – Emformer dropout probability.

transformer_activation (str) – activation function to use in each Emformer layer’s feedforward network. Must be one of (“relu”, “gelu”, “silu”).

transformer_max_memory_size (int) – maximum number of memory elements to use.

transformer_weight_init_scale_strategy (str) – per-layer weight initialization scaling strategy. Must be one of (“depthwise”, “constant”,

None).transformer_tanh_on_mem (bool) – if

True, applies tanh to memory elements.symbol_embedding_dim (int) – dimension of each target token embedding.

num_lstm_layers (int) – number of LSTM layers to instantiate.

lstm_layer_norm (bool) – if

True, enables layer normalization for LSTM layers.lstm_layer_norm_epsilon (float) – value of epsilon to use in LSTM layer normalization layers.

lstm_dropout (float) – LSTM dropout probability.

- Returns

Emformer RNN-T model.

- Return type

Decoder

RNNTBeamSearch

-

class

torchaudio.models.RNNTBeamSearch(model: torchaudio.models.rnnt.RNNT, blank: int, temperature: float = 1.0, hypo_sort_key: Optional[Callable[[torchaudio.models.rnnt_decoder.Hypothesis], float]] = None, step_max_tokens: int = 100)[source] Beam search decoder for RNN-T model.

- Parameters

model (RNNT) – RNN-T model to use.

blank (int) – index of blank token in vocabulary.

temperature (float, optional) – temperature to apply to joint network output. Larger values yield more uniform samples. (Default: 1.0)

hypo_sort_key (Callable[[Hypothesis], float] or None, optional) – callable that computes a score for a given hypothesis to rank hypotheses by. If

None, defaults to callable that returns hypothesis score normalized by token sequence length. (Default: None)step_max_tokens (int, optional) – maximum number of tokens to emit per input time step. (Default: 100)

-

forward(input: torch.Tensor, length: torch.Tensor, beam_width: int) → List[torchaudio.models.rnnt_decoder.Hypothesis][source] Performs beam search for the given input sequence.

T: number of frames; D: feature dimension of each frame.

- Parameters

input (torch.Tensor) – sequence of input frames, with shape (T, D) or (1, T, D).

length (torch.Tensor) – number of valid frames in input sequence, with shape () or (1,).

beam_width (int) – beam size to use during search.

- Returns

top-

beam_widthhypotheses found by beam search.- Return type

List[Hypothesis]

-

infer(input: torch.Tensor, length: torch.Tensor, beam_width: int, state: Optional[List[List[torch.Tensor]]] = None, hypothesis: Optional[torchaudio.models.rnnt_decoder.Hypothesis] = None) → Tuple[List[torchaudio.models.rnnt_decoder.Hypothesis], List[List[torch.Tensor]]][source] Performs beam search for the given input sequence in streaming mode.

T: number of frames; D: feature dimension of each frame.

- Parameters

input (torch.Tensor) – sequence of input frames, with shape (T, D) or (1, T, D).

length (torch.Tensor) – number of valid frames in input sequence, with shape () or (1,).

beam_width (int) – beam size to use during search.

state (List[List[torch.Tensor]] or None, optional) – list of lists of tensors representing transcription network internal state generated in preceding invocation. (Default:

None)hypothesis (Hypothesis or None) – hypothesis from preceding invocation to seed search with. (Default:

None)

- Returns

- List[Hypothesis]

top-

beam_widthhypotheses found by beam search.- List[List[torch.Tensor]]

list of lists of tensors representing transcription network internal state generated in current invocation.

- Return type

(List[Hypothesis], List[List[torch.Tensor]])

Hypothesis

-

class

torchaudio.models.Hypothesis(tokens: List[int], predictor_out: torch.Tensor, state: List[List[torch.Tensor]], score: float, alignment: List[int], blank: int, key: str)[source] Represents hypothesis generated by beam search decoder

RNNTBeamSearch.- Variables

tokens (List[int]) – Predicted sequence of tokens.

predictor_out (torch.Tensor) – Prediction network output.

state (List[List[torch.Tensor]]) – Prediction network internal state.

score (float) – Score of hypothesis.

alignment (List[int]) – Sequence of timesteps, with the i-th value mapping to the i-th predicted token in

tokens.blank (int) – Token index corresponding to blank token.

key (str) – Value used to determine equivalence in token sequences between

Hypothesisinstances.

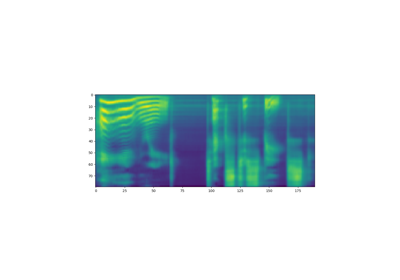

Tacotron2

-

class

torchaudio.models.Tacotron2(mask_padding: bool = False, n_mels: int = 80, n_symbol: int = 148, n_frames_per_step: int = 1, symbol_embedding_dim: int = 512, encoder_embedding_dim: int = 512, encoder_n_convolution: int = 3, encoder_kernel_size: int = 5, decoder_rnn_dim: int = 1024, decoder_max_step: int = 2000, decoder_dropout: float = 0.1, decoder_early_stopping: bool = True, attention_rnn_dim: int = 1024, attention_hidden_dim: int = 128, attention_location_n_filter: int = 32, attention_location_kernel_size: int = 31, attention_dropout: float = 0.1, prenet_dim: int = 256, postnet_n_convolution: int = 5, postnet_kernel_size: int = 5, postnet_embedding_dim: int = 512, gate_threshold: float = 0.5)[source] Tacotron2 model based on the implementation from Nvidia.

The original implementation was introduced in Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions [5].

- Parameters

mask_padding (bool, optional) – Use mask padding (Default:

False).n_mels (int, optional) – Number of mel bins (Default:

80).n_symbol (int, optional) – Number of symbols for the input text (Default:

148).n_frames_per_step (int, optional) – Number of frames processed per step, only 1 is supported (Default:

1).symbol_embedding_dim (int, optional) – Input embedding dimension (Default:

512).encoder_n_convolution (int, optional) – Number of encoder convolutions (Default:

3).encoder_kernel_size (int, optional) – Encoder kernel size (Default:

5).encoder_embedding_dim (int, optional) – Encoder embedding dimension (Default:

512).decoder_rnn_dim (int, optional) – Number of units in decoder LSTM (Default:

1024).decoder_max_step (int, optional) – Maximum number of output mel spectrograms (Default:

2000).decoder_dropout (float, optional) – Dropout probability for decoder LSTM (Default:

0.1).decoder_early_stopping (bool, optional) – Continue decoding after all samples are finished (Default:

True).attention_rnn_dim (int, optional) – Number of units in attention LSTM (Default:

1024).attention_hidden_dim (int, optional) – Dimension of attention hidden representation (Default:

128).attention_location_n_filter (int, optional) – Number of filters for attention model (Default:

32).attention_location_kernel_size (int, optional) – Kernel size for attention model (Default:

31).attention_dropout (float, optional) – Dropout probability for attention LSTM (Default:

0.1).prenet_dim (int, optional) – Number of ReLU units in prenet layers (Default:

256).postnet_n_convolution (int, optional) – Number of postnet convolutions (Default:

5).postnet_kernel_size (int, optional) – Postnet kernel size (Default:

5).postnet_embedding_dim (int, optional) – Postnet embedding dimension (Default:

512).gate_threshold (float, optional) – Probability threshold for stop token (Default:

0.5).

- Tutorials using

Tacotron2:

-

forward(tokens: torch.Tensor, token_lengths: torch.Tensor, mel_specgram: torch.Tensor, mel_specgram_lengths: torch.Tensor) → Tuple[torch.Tensor, torch.Tensor, torch.Tensor, torch.Tensor][source] Pass the input through the Tacotron2 model. This is in teacher forcing mode, which is generally used for training.

The input

tokensshould be padded with zeros to length max oftoken_lengths. The inputmel_specgramshould be padded with zeros to length max ofmel_specgram_lengths.- Parameters

tokens (Tensor) – The input tokens to Tacotron2 with shape (n_batch, max of token_lengths).

token_lengths (Tensor) – The valid length of each sample in

tokenswith shape (n_batch, ).mel_specgram (Tensor) – The target mel spectrogram with shape (n_batch, n_mels, max of mel_specgram_lengths).

mel_specgram_lengths (Tensor) – The length of each mel spectrogram with shape (n_batch, ).

- Returns

- Tensor

Mel spectrogram before Postnet with shape (n_batch, n_mels, max of mel_specgram_lengths).

- Tensor

Mel spectrogram after Postnet with shape (n_batch, n_mels, max of mel_specgram_lengths).

- Tensor

The output for stop token at each time step with shape (n_batch, max of mel_specgram_lengths).

- Tensor

Sequence of attention weights from the decoder with shape (n_batch, max of mel_specgram_lengths, max of token_lengths).

- Return type

[Tensor, Tensor, Tensor, Tensor]

-

infer(tokens: torch.Tensor, lengths: Optional[torch.Tensor] = None) → Tuple[torch.Tensor, torch.Tensor, torch.Tensor][source] Using Tacotron2 for inference. The input is a batch of encoded sentences (

tokens) and its corresponding lengths (lengths). The output is the generated mel spectrograms, its corresponding lengths, and the attention weights from the decoder.The input tokens should be padded with zeros to length max of

lengths.- Parameters

tokens (Tensor) – The input tokens to Tacotron2 with shape (n_batch, max of lengths).

lengths (Tensor or None, optional) – The valid length of each sample in

tokenswith shape (n_batch, ). IfNone, it is assumed that the all the tokens are valid. Default:None

- Returns

- Tensor

The predicted mel spectrogram with shape (n_batch, n_mels, max of mel_specgram_lengths).

- Tensor

The length of the predicted mel spectrogram with shape (n_batch, ).

- Tensor

Sequence of attention weights from the decoder with shape (n_batch, max of mel_specgram_lengths, max of lengths).

- Return type

(Tensor, Tensor, Tensor)

Wav2Letter

-

class

torchaudio.models.Wav2Letter(num_classes: int = 40, input_type: str = 'waveform', num_features: int = 1)[source] Wav2Letter model architecture from Wav2Letter: an End-to-End ConvNet-based Speech Recognition System [6].

- Parameters

-

forward(x: torch.Tensor) → torch.Tensor[source] - Parameters

x (torch.Tensor) – Tensor of dimension (batch_size, num_features, input_length).

- Returns

Predictor tensor of dimension (batch_size, number_of_classes, input_length).

- Return type

Tensor

Wav2Vec2.0 / HuBERT

Model

Wav2Vec2Model

-

class

torchaudio.models.Wav2Vec2Model(feature_extractor: torch.nn.Module, encoder: torch.nn.Module, aux: Optional[torch.nn.Module] = None)[source] Encoder model used in wav2vec 2.0 [7].

Note

To build the model, please use one of the factory functions.

- Parameters

feature_extractor (torch.nn.Module) – Feature extractor that extracts feature vectors from raw audio Tensor.

encoder (torch.nn.Module) – Encoder that converts the audio features into the sequence of probability distribution (in negative log-likelihood) over labels.

aux (torch.nn.Module or None, optional) – Auxiliary module. If provided, the output from encoder is passed to this module.

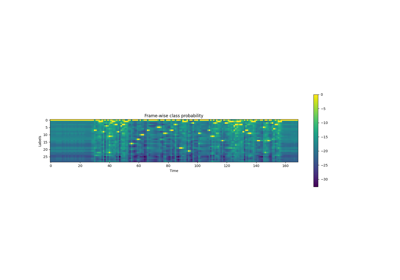

- Tutorials using

Wav2Vec2Model:

-

extract_features(waveforms: torch.Tensor, lengths: Optional[torch.Tensor] = None, num_layers: Optional[int] = None) → Tuple[List[torch.Tensor], Optional[torch.Tensor]][source] Extract feature vectors from raw waveforms

This returns the list of outputs from the intermediate layers of transformer block in encoder.

- Parameters

waveforms (Tensor) – Audio tensor of shape (batch, frames).

lengths (Tensor or None, optional) – Indicates the valid length of each audio in the batch. Shape: (batch, ). When the

waveformscontains audios with different durations, by providinglengthsargument, the model will compute the corresponding valid output lengths and apply proper mask in transformer attention layer. IfNone, it is assumed that the entire audio waveform length is valid.num_layers (int or None, optional) – If given, limit the number of intermediate layers to go through. Providing 1 will stop the computation after going through one intermediate layers. If not given, the outputs from all the intermediate layers are returned.

- Returns

- List of Tensors

Features from requested layers. Each Tensor is of shape: (batch, time frame, feature dimension)

- Tensor or None

If

lengthsargument was provided, a Tensor of shape (batch, ) is returned. It indicates the valid length in time axis of each feature Tensor.

- Return type

(List[Tensor], Optional[Tensor])

-

forward(waveforms: torch.Tensor, lengths: Optional[torch.Tensor] = None) → Tuple[torch.Tensor, Optional[torch.Tensor]][source] Compute the sequence of probability distribution over labels.

- Parameters

waveforms (Tensor) – Audio tensor of shape (batch, frames).

lengths (Tensor or None, optional) – Indicates the valid length of each audio in the batch. Shape: (batch, ). When the

waveformscontains audios with different durations, by providinglengthsargument, the model will compute the corresponding valid output lengths and apply proper mask in transformer attention layer. IfNone, it is assumed that all the audio inwaveformshave valid length. Default:None.

- Returns

- Tensor

The sequences of probability distribution (in logit) over labels. Shape: (batch, frames, num labels).

- Tensor or None

If

lengthsargument was provided, a Tensor of shape (batch, ) is returned. It indicates the valid length in time axis of the output Tensor.

- Return type

(Tensor, Optional[Tensor])

HuBERTPretrainModel

-

class

torchaudio.models.HuBERTPretrainModel(wav2vec2: torchaudio.models.wav2vec2.model.Wav2Vec2Model, mask_generator: torch.nn.modules.module.Module, logit_generator: torch.nn.modules.module.Module)[source] HuBERT pre-train model for training from scratch.

Note

- To build the model, please use one of the factory functions in

[hubert_pretrain_base, hubert_pretrain_large, hubert_pretrain_xlarge].

- Parameters

feature_extractor (torch.nn.Module) – Feature extractor that extracts feature vectors from raw audio Tensor.

encoder (torch.nn.Module) – Encoder that converts the audio features into the sequence of probability distribution (in negative log-likelihood) over labels.

mask_generator (torch.nn.Module) – Mask generator that generates the mask for masked prediction during the training.

logit_generator (torch.nn.Module) – Logit generator that predicts the logits of the masked and unmasked inputs.

-

forward(waveforms: torch.Tensor, labels: torch.Tensor, audio_lengths: Optional[torch.Tensor] = None) → Tuple[torch.Tensor, Optional[torch.Tensor]][source] Compute the sequence of probability distribution over labels.

- Parameters

waveforms (Tensor) – Audio tensor of dimension [batch, frames].

labels (Tensor) – Label for pre-training. A Tensor of dimension [batch, frames].

audio_lengths (Tensor or None, optional) – Indicates the valid length of each audio in the batch. Shape: [batch, ]. When the

waveformscontains audios with different durations, by providinglengthsargument, the model will compute the corresponding valid output lengths and apply proper mask in transformer attention layer. IfNone, it is assumed that all the audio inwaveformshave valid length. Default:None.

- Returns

- Tensor

The masked sequences of probability distribution (in logit). Shape: (masked_frames, num labels).

- Tensor

The unmasked sequence of probability distribution (in logit). Shape: (unmasked_frames, num labels).

- Tensor

The feature mean value for additional penalty loss. Shape: (1,).

- Return type

(Tensor, Tensor, Tensor)

Factory Functions

wav2vec2_model

-

torchaudio.models.wav2vec2_model(extractor_mode: str, extractor_conv_layer_config: Optional[List[Tuple[int, int, int]]], extractor_conv_bias: bool, encoder_embed_dim: int, encoder_projection_dropout: float, encoder_pos_conv_kernel: int, encoder_pos_conv_groups: int, encoder_num_layers: int, encoder_num_heads: int, encoder_attention_dropout: float, encoder_ff_interm_features: int, encoder_ff_interm_dropout: float, encoder_dropout: float, encoder_layer_norm_first: bool, encoder_layer_drop: float, aux_num_out: Optional[int]) → torchaudio.models.Wav2Vec2Model[source] Build a custom Wav2Vec2Model

Note

The “feature extractor” below corresponds to ConvFeatureExtractionModel in the original

fairseqimplementation. This is referred as “(convolutional) feature encoder” in the wav2vec 2.0 [7] paper.The “encoder” below corresponds to TransformerEncoder, and this is referred as “Transformer” in the paper.

- Parameters

extractor_mode (str) –

Operation mode of feature extractor. Valid values are

"group_norm"or"layer_norm". If"group_norm", then a single normalization is applied in the first convolution block. Otherwise, all the convolution blocks will have layer normalization.This option corresponds to

extractor_modefromfairseq.extractor_conv_layer_config (list of python:integer tuples or None) –

Configuration of convolution layers in feature extractor. List of convolution configuration, i.e.

[(output_channel, kernel_size, stride), ...]If

Noneis provided, then the following default value is used.[ (512, 10, 5), (512, 3, 2), (512, 3, 2), (512, 3, 2), (512, 3, 2), (512, 2, 2), (512, 2, 2), ]

This option corresponds to

conv_feature_layersfromfairseq.extractor_conv_bias (bool) –

Whether to include bias term to each convolution operation.

This option corresponds to

conv_biasfromfairseq.encoder_embed_dim (int) –

The dimension of embedding in encoder.

This option corresponds to

encoder_embed_dimfromfairseq.encoder_projection_dropout (float) –

The dropout probability applied after the input feature is projected to

encoder_embed_dim.This option corresponds to

dropout_inputfromfairseq.encoder_pos_conv_kernel (int) –

The kernel size of convolutional positional embeddings.

This option corresponds to

conv_posfromfairseq.encoder_pos_conv_groups (int) –

The number of groups of convolutional positional embeddings.

This option corresponds to

conv_pos_groupsfromfairseq.encoder_num_layers (int) –

The number of self attention layers in transformer block.

This option corresponds to

encoder_layersfromfairseq.encoder_num_heads (int) –

The number of heads in self attention layers.

This option corresponds to

encoder_attention_headsfromfairseq.encoder_attention_dropout (float) –

The dropout probability applied after softmax in self-attention layer.

This option corresponds to

attention_dropoutfromfairseq.encoder_ff_interm_features (int) –

The dimension of hidden features in feed forward layer.

This option corresponds to

encoder_ffn_embed_dimfromfairseq.encoder_ff_interm_dropout (float) –

The dropout probability applied in feedforward layer.

This option correspinds to

activation_dropoutfromfairseq.encoder_dropout (float) –

The dropout probability applied at the end of feed forward layer.

This option corresponds to

dropoutfromfairseq.encoder_layer_norm_first (bool) –

Control the order of layer norm in transformer layer and each encoder layer. If True, in transformer layer, layer norm is applied before features are fed to encoder layers. In encoder layer, two layer norms are applied before and after self attention. If False, in transformer layer, layer norm is applied after features are fed to encoder layers. In encoder layer, two layer norms are applied after self attention, before and after feed forward.

This option corresponds to

layer_norm_firstfromfairseq.encoder_layer_drop (float) –

Probability to drop each encoder layer during training.

This option corresponds to

layerdropfromfairseq.aux_num_out (int or None) – When provided, attach an extra linear layer on top of encoder, which can be used for fine-tuning.

- Returns

The resulting model.

- Return type

wav2vec2_base

-

torchaudio.models.wav2vec2_base(encoder_projection_dropout: float = 0.1, encoder_attention_dropout: float = 0.1, encoder_ff_interm_dropout: float = 0.1, encoder_dropout: float = 0.1, encoder_layer_drop: float = 0.1, aux_num_out: Optional[int] = None) → torchaudio.models.Wav2Vec2Model[source] Build Wav2Vec2Model with “base” architecture from wav2vec 2.0 [7]

- Parameters

encoder_projection_dropout (float) – See

wav2vec2_model().encoder_attention_dropout (float) – See

wav2vec2_model().encoder_ff_interm_dropout (float) – See

wav2vec2_model().encoder_dropout (float) – See

wav2vec2_model().encoder_layer_drop (float) – See

wav2vec2_model().aux_num_out (int or None, optional) – See

wav2vec2_model().

- Returns

The resulting model.

- Return type

wav2vec2_large

-

torchaudio.models.wav2vec2_large(encoder_projection_dropout: float = 0.1, encoder_attention_dropout: float = 0.1, encoder_ff_interm_dropout: float = 0.1, encoder_dropout: float = 0.1, encoder_layer_drop: float = 0.1, aux_num_out: Optional[int] = None) → torchaudio.models.Wav2Vec2Model[source] Build Wav2Vec2Model with “large” architecture from wav2vec 2.0 [7]

- Parameters

encoder_projection_dropout (float) – See

wav2vec2_model().encoder_attention_dropout (float) – See

wav2vec2_model().encoder_ff_interm_dropout (float) – See

wav2vec2_model().encoder_dropout (float) – See

wav2vec2_model().encoder_layer_drop (float) – See

wav2vec2_model().aux_num_out (int or None, optional) – See

wav2vec2_model().

- Returns

The resulting model.

- Return type

wav2vec2_large_lv60k

-

torchaudio.models.wav2vec2_large_lv60k(encoder_projection_dropout: float = 0.1, encoder_attention_dropout: float = 0.0, encoder_ff_interm_dropout: float = 0.1, encoder_dropout: float = 0.0, encoder_layer_drop: float = 0.1, aux_num_out: Optional[int] = None) → torchaudio.models.Wav2Vec2Model[source] Build Wav2Vec2Model with “large lv-60k” architecture from wav2vec 2.0 [7]

- Parameters

encoder_projection_dropout (float) – See

wav2vec2_model().encoder_attention_dropout (float) – See

wav2vec2_model().encoder_ff_interm_dropout (float) – See

wav2vec2_model().encoder_dropout (float) – See

wav2vec2_model().encoder_layer_drop (float) – See

wav2vec2_model().aux_num_out (int or None, optional) – See

wav2vec2_model().

- Returns

The resulting model.

- Return type

hubert_base

-

torchaudio.models.hubert_base(encoder_projection_dropout: float = 0.1, encoder_attention_dropout: float = 0.1, encoder_ff_interm_dropout: float = 0.0, encoder_dropout: float = 0.1, encoder_layer_drop: float = 0.05, aux_num_out: Optional[int] = None) → torchaudio.models.Wav2Vec2Model[source] Build HuBERT model with “base” architecture from HuBERT [8]

- Parameters

encoder_projection_dropout (float) – See

wav2vec2_model().encoder_attention_dropout (float) – See

wav2vec2_model().encoder_ff_interm_dropout (float) – See

wav2vec2_model().encoder_dropout (float) – See

wav2vec2_model().encoder_layer_drop (float) – See

wav2vec2_model().aux_num_out (int or None, optional) – See

wav2vec2_model().

- Returns

The resulting model.

- Return type

hubert_large

-

torchaudio.models.hubert_large(encoder_projection_dropout: float = 0.0, encoder_attention_dropout: float = 0.0, encoder_ff_interm_dropout: float = 0.0, encoder_dropout: float = 0.0, encoder_layer_drop: float = 0.0, aux_num_out: Optional[int] = None) → torchaudio.models.Wav2Vec2Model[source] Build HuBERT model with “large” architecture from HuBERT [8]

- Parameters

encoder_projection_dropout (float) – See

wav2vec2_model().encoder_attention_dropout (float) – See

wav2vec2_model().encoder_ff_interm_dropout (float) – See

wav2vec2_model().encoder_dropout (float) – See

wav2vec2_model().encoder_layer_drop (float) – See

wav2vec2_model().aux_num_out (int or None, optional) – See

wav2vec2_model().

- Returns

The resulting model.

- Return type

hubert_xlarge

-

torchaudio.models.hubert_xlarge(encoder_projection_dropout: float = 0.0, encoder_attention_dropout: float = 0.0, encoder_ff_interm_dropout: float = 0.0, encoder_dropout: float = 0.0, encoder_layer_drop: float = 0.0, aux_num_out: Optional[int] = None) → torchaudio.models.Wav2Vec2Model[source] Build HuBERT model with “extra large” architecture from HuBERT [8]

- Parameters

encoder_projection_dropout (float) – See

wav2vec2_model().encoder_attention_dropout (float) – See

wav2vec2_model().encoder_ff_interm_dropout (float) – See

wav2vec2_model().encoder_dropout (float) – See

wav2vec2_model().encoder_layer_drop (float) – See

wav2vec2_model().aux_num_out (int or None, optional) – See

wav2vec2_model().

- Returns

The resulting model.

- Return type

hubert_pretrain_model

-

torchaudio.models.hubert_pretrain_model(extractor_mode: str, extractor_conv_layer_config: Optional[List[Tuple[int, int, int]]], extractor_conv_bias: bool, encoder_embed_dim: int, encoder_projection_dropout: float, encoder_pos_conv_kernel: int, encoder_pos_conv_groups: int, encoder_num_layers: int, encoder_num_heads: int, encoder_attention_dropout: float, encoder_ff_interm_features: int, encoder_ff_interm_dropout: float, encoder_dropout: float, encoder_layer_norm_first: bool, encoder_layer_drop: float, mask_prob: float, mask_selection: str, mask_other: float, mask_length: int, no_mask_overlap: bool, mask_min_space: int, mask_channel_prob: float, mask_channel_selection: str, mask_channel_other: float, mask_channel_length: int, no_mask_channel_overlap: bool, mask_channel_min_space: int, skip_masked: bool, skip_nomask: bool, num_classes: int, final_dim: int) → torchaudio.models.HuBERTPretrainModel[source] Build a custom HuBERTPretrainModel for training from scratch

Note

The “feature extractor” below corresponds to ConvFeatureExtractionModel in the original

fairseqimplementation. This is referred as “(convolutional) feature encoder” in the wav2vec 2.0 [7] paper.The “encoder” below corresponds to TransformerEncoder, and this is referred as “Transformer” in the paper.

- Parameters

extractor_mode (str) –

Operation mode of feature extractor. Valid values are

"group_norm"or"layer_norm". If"group_norm", then a single normalization is applied in the first convolution block. Otherwise, all the convolution blocks will have layer normalization.This option corresponds to

extractor_modefromfairseq.extractor_conv_layer_config (list of python:integer tuples or None) –

Configuration of convolution layers in feature extractor. List of convolution configuration, i.e.

[(output_channel, kernel_size, stride), ...]If

Noneis provided, then the following default value is used.[ (512, 10, 5), (512, 3, 2), (512, 3, 2), (512, 3, 2), (512, 3, 2), (512, 2, 2), (512, 2, 2), ]

This option corresponds to

conv_feature_layersfromfairseq.extractor_conv_bias (bool) –

Whether to include bias term to each convolution operation.

This option corresponds to

conv_biasfromfairseq.encoder_embed_dim (int) –

The dimension of embedding in encoder.

This option corresponds to

encoder_embed_dimfromfairseq.encoder_projection_dropout (float) –

The dropout probability applied after the input feature is projected to

encoder_embed_dim.This option corresponds to

dropout_inputfromfairseq.encoder_pos_conv_kernel (int) –

The kernel size of convolutional positional embeddings.

This option corresponds to

conv_posfromfairseq.encoder_pos_conv_groups (int) –

The number of groups of convolutional positional embeddings.

This option corresponds to

conv_pos_groupsfromfairseq.encoder_num_layers (int) –

The number of self attention layers in transformer block.

This option corresponds to

encoder_layersfromfairseq.encoder_num_heads (int) –

The number of heads in self attention layers.

This option corresponds to

encoder_attention_headsfromfairseq.encoder_attention_dropout (float) –

The dropout probability applied after softmax in self-attention layer.

This option corresponds to

attention_dropoutfromfairseq.encoder_ff_interm_features (int) –

The dimension of hidden features in feed forward layer.

This option corresponds to

encoder_ffn_embed_dimfromfairseq.encoder_ff_interm_dropout (float) –

The dropout probability applied in feedforward layer.

This option correspinds to

activation_dropoutfromfairseq.encoder_dropout (float) –

The dropout probability applied at the end of feed forward layer.

This option corresponds to

dropoutfromfairseq.encoder_layer_norm_first (bool) –

Control the order of layer norm in transformer layer and each encoder layer. If True, in transformer layer, layer norm is applied before features are fed to encoder layers. In encoder layer, two layer norms are applied before and after self attention. If False, in transformer layer, layer norm is applied after features are fed to encoder layers. In encoder layer, two layer norms are applied after self attention, before and after feed forward.

This option corresponds to

layer_norm_firstfromfairseq.encoder_layer_drop (float) –

Probability to drop each encoder layer during training.

This option corresponds to

layerdropfromfairseq.mask_prob (float) –

Probability for each token to be chosen as start of the span to be masked. this will be multiplied by number of timesteps divided by length of mask span to mask approximately this percentage of all elements. However due to overlaps, the actual number will be smaller (unless no_overlap is True).

This option corresponds to

mask_probfromfairseq.mask_selection (str) –

How to choose the mask length. Options: [

static,uniform,normal,poisson].This option corresponds to

mask_selectionfromfairseq.mask_other (float) –

Secondary mask argument (used for more complex distributions).

This option corresponds to

mask_otherfromfairseq.mask_length (int) –

The lengths of the mask.

This option corresponds to

mask_lengthfromfairseq.no_mask_overlap (bool) –

Whether to allow masks to overlap.

This option corresponds to

no_mask_overlapfromfairseq.mask_min_space (int) –

Minimum space between spans (if no overlap is enabled).

This option corresponds to

mask_min_spacefromfairseq.mask_channel_prob –

(float): The probability of replacing a feature with 0.

This option corresponds to

mask_channel_probfromfairseq.mask_channel_selection (str) –

How to choose the mask length for channel masking. Options: [

static,uniform,normal,poisson].This option corresponds to

mask_channel_selectionfromfairseq.mask_channel_other (float) –

Secondary mask argument for channel masking(used for more complex distributions).

This option corresponds to

mask_channel_otherfromfairseq.mask_channel_length (int) –

Minimum space between spans (if no overlap is enabled) for channel masking.

This option corresponds to

mask_channel_lengthfromfairseq.no_mask_channel_overlap (bool) –

Whether to allow channel masks to overlap.

This option corresponds to

no_mask_channel_overlapfromfairseq.mask_channel_min_space (int) –

Minimum space between spans for channel masking(if no overlap is enabled).

This option corresponds to

mask_channel_min_spacefromfairseq.skip_masked (bool) –

If True, skip computing losses over masked frames.

This option corresponds to

skip_maskedfromfairseq.skip_nomask (bool) –

If True, skip computing losses over unmasked frames.

This option corresponds to

skip_nomaskfromfairseq.num_classes (int) – The number of classes in the labels.

final_dim (int) –

Project final representations and targets to final_dim.

This option corresponds to

final_dimfromfairseq.

- Returns

The resulting model.

- Return type

hubert_pretrain_base

-

torchaudio.models.hubert_pretrain_base(encoder_projection_dropout: float = 0.1, encoder_attention_dropout: float = 0.1, encoder_ff_interm_dropout: float = 0.0, encoder_dropout: float = 0.1, encoder_layer_drop: float = 0.05, num_classes: int = 100) → torchaudio.models.HuBERTPretrainModel[source] Build HuBERTPretrainModel model with “base” architecture from HuBERT [8]

- Parameters

encoder_projection_dropout (float) – See

hubert_pretrain_model().encoder_attention_dropout (float) – See

hubert_pretrain_model().encoder_ff_interm_dropout (float) – See

hubert_pretrain_model().encoder_dropout (float) – See

hubert_pretrain_model().encoder_layer_drop (float) – See

hubert_pretrain_model().num_classes (int, optional) – See

hubert_pretrain_model().

- Returns

The resulting model.

- Return type

hubert_pretrain_large

-

torchaudio.models.hubert_pretrain_large(encoder_projection_dropout: float = 0.0, encoder_attention_dropout: float = 0.0, encoder_ff_interm_dropout: float = 0.0, encoder_dropout: float = 0.0, encoder_layer_drop: float = 0.0) → torchaudio.models.HuBERTPretrainModel[source] Build HuBERTPretrainModel model for pre-training with “large” architecture from HuBERT [8]

- Parameters

encoder_projection_dropout (float) – See

hubert_pretrain_model().encoder_attention_dropout (float) – See

hubert_pretrain_model().encoder_ff_interm_dropout (float) – See

hubert_pretrain_model().encoder_dropout (float) – See

hubert_pretrain_model().encoder_layer_drop (float) – See

hubert_pretrain_model().

- Returns

The resulting model.

- Return type

hubert_pretrain_xlarge

-

torchaudio.models.hubert_pretrain_xlarge(encoder_projection_dropout: float = 0.0, encoder_attention_dropout: float = 0.0, encoder_ff_interm_dropout: float = 0.0, encoder_dropout: float = 0.0, encoder_layer_drop: float = 0.0) → torchaudio.models.HuBERTPretrainModel[source] Build HuBERTPretrainModel model for pre-training with “extra large” architecture from HuBERT [8]

- Parameters

encoder_projection_dropout (float) – See

hubert_pretrain_model().encoder_attention_dropout (float) – See

hubert_pretrain_model().encoder_ff_interm_dropout (float) – See

hubert_pretrain_model().encoder_dropout (float) – See

hubert_pretrain_model().encoder_layer_drop (float) – See

hubert_pretrain_model().

- Returns

The resulting model.

- Return type

Utility Functions

import_huggingface_model

-

torchaudio.models.wav2vec2.utils.import_huggingface_model(original: torch.nn.Module) → torchaudio.models.Wav2Vec2Model[source] Build Wav2Vec2Model from the corresponding model object of Hugging Face’s Transformers.

- Parameters

original (torch.nn.Module) – An instance of

Wav2Vec2ForCTCfromtransformers.- Returns

Imported model.

- Return type

- Example

>>> from torchaudio.models.wav2vec2.utils import import_huggingface_model >>> >>> original = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-base-960h") >>> model = import_huggingface_model(original) >>> >>> waveforms, _ = torchaudio.load("audio.wav") >>> logits, _ = model(waveforms)

import_fairseq_model

-

torchaudio.models.wav2vec2.utils.import_fairseq_model(original: torch.nn.Module) → torchaudio.models.Wav2Vec2Model[source] Build Wav2Vec2Model from the corresponding model object of fairseq.

- Parameters

original (torch.nn.Module) – An instance of fairseq’s Wav2Vec2.0 or HuBERT model. One of

fairseq.models.wav2vec.wav2vec2_asr.Wav2VecEncoder,fairseq.models.wav2vec.wav2vec2.Wav2Vec2Modelorfairseq.models.hubert.hubert_asr.HubertEncoder.- Returns

Imported model.

- Return type

- Example - Loading pretrain-only model

>>> from torchaudio.models.wav2vec2.utils import import_fairseq_model >>> >>> # Load model using fairseq >>> model_file = 'wav2vec_small.pt' >>> model, _, _ = fairseq.checkpoint_utils.load_model_ensemble_and_task([model_file]) >>> original = model[0] >>> imported = import_fairseq_model(original) >>> >>> # Perform feature extraction >>> waveform, _ = torchaudio.load('audio.wav') >>> features, _ = imported.extract_features(waveform) >>> >>> # Compare result with the original model from fairseq >>> reference = original.feature_extractor(waveform).transpose(1, 2) >>> torch.testing.assert_allclose(features, reference)

- Example - Fine-tuned model

>>> from torchaudio.models.wav2vec2.utils import import_fairseq_model >>> >>> # Load model using fairseq >>> model_file = 'wav2vec_small_960h.pt' >>> model, _, _ = fairseq.checkpoint_utils.load_model_ensemble_and_task([model_file]) >>> original = model[0] >>> imported = import_fairseq_model(original.w2v_encoder) >>> >>> # Perform encoding >>> waveform, _ = torchaudio.load('audio.wav') >>> emission, _ = imported(waveform) >>> >>> # Compare result with the original model from fairseq >>> mask = torch.zeros_like(waveform) >>> reference = original(waveform, mask)['encoder_out'].transpose(0, 1) >>> torch.testing.assert_allclose(emission, reference)

WaveRNN

-

class

torchaudio.models.WaveRNN(upsample_scales: List[int], n_classes: int, hop_length: int, n_res_block: int = 10, n_rnn: int = 512, n_fc: int = 512, kernel_size: int = 5, n_freq: int = 128, n_hidden: int = 128, n_output: int = 128)[source] WaveRNN model based on the implementation from fatchord.

The original implementation was introduced in Efficient Neural Audio Synthesis [9]. The input channels of waveform and spectrogram have to be 1. The product of upsample_scales must equal hop_length.

- Parameters

upsample_scales – the list of upsample scales.

n_classes – the number of output classes.

hop_length – the number of samples between the starts of consecutive frames.

n_res_block – the number of ResBlock in stack. (Default:

10)n_rnn – the dimension of RNN layer. (Default:

512)n_fc – the dimension of fully connected layer. (Default:

512)kernel_size – the number of kernel size in the first Conv1d layer. (Default:

5)n_freq – the number of bins in a spectrogram. (Default:

128)n_hidden – the number of hidden dimensions of resblock. (Default:

128)n_output – the number of output dimensions of melresnet. (Default:

128)

- Example

>>> wavernn = WaveRNN(upsample_scales=[5,5,8], n_classes=512, hop_length=200) >>> waveform, sample_rate = torchaudio.load(file) >>> # waveform shape: (n_batch, n_channel, (n_time - kernel_size + 1) * hop_length) >>> specgram = MelSpectrogram(sample_rate)(waveform) # shape: (n_batch, n_channel, n_freq, n_time) >>> output = wavernn(waveform, specgram) >>> # output shape: (n_batch, n_channel, (n_time - kernel_size + 1) * hop_length, n_classes)

-

forward(waveform: torch.Tensor, specgram: torch.Tensor) → torch.Tensor[source] Pass the input through the WaveRNN model.

- Parameters

waveform – the input waveform to the WaveRNN layer (n_batch, 1, (n_time - kernel_size + 1) * hop_length)

specgram – the input spectrogram to the WaveRNN layer (n_batch, 1, n_freq, n_time)

- Returns

shape (n_batch, 1, (n_time - kernel_size + 1) * hop_length, n_classes)

- Return type

Tensor

-

infer(specgram: torch.Tensor, lengths: Optional[torch.Tensor] = None) → Tuple[torch.Tensor, Optional[torch.Tensor]][source] Inference method of WaveRNN.

This function currently only supports multinomial sampling, which assumes the network is trained on cross entropy loss.

- Parameters

specgram (Tensor) – Batch of spectrograms. Shape: (n_batch, n_freq, n_time).

lengths (Tensor or None, optional) – Indicates the valid length of each audio in the batch. Shape: (batch, ). When the

specgramcontains spectrograms with different durations, by providinglengthsargument, the model will compute the corresponding valid output lengths. IfNone, it is assumed that all the audio inwaveformshave valid length. Default:None.

- Returns

- Tensor

The inferred waveform of size (n_batch, 1, n_time). 1 stands for a single channel.

- Tensor or None

If

lengthsargument was provided, a Tensor of shape (batch, ) is returned. It indicates the valid length in time axis of the output Tensor.

- Return type

(Tensor, Optional[Tensor])

References

- 1

Anmol Gulati, James Qin, Chung-Cheng Chiu, Niki Parmar, Yu Zhang, Jiahui Yu, Wei Han, Shibo Wang, Zhengdong Zhang, Yonghui Wu, and Ruoming Pang. Conformer: convolution-augmented transformer for speech recognition. 2020. arXiv:2005.08100.

- 2

Yi Luo and Nima Mesgarani. Conv-tasnet: surpassing ideal time–frequency magnitude masking for speech separation. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 27(8):1256–1266, Aug 2019. URL: http://dx.doi.org/10.1109/TASLP.2019.2915167, doi:10.1109/taslp.2019.2915167.

- 3

Awni Hannun, Carl Case, Jared Casper, Bryan Catanzaro, Greg Diamos, Erich Elsen, Ryan Prenger, Sanjeev Satheesh, Shubho Sengupta, Adam Coates, and Andrew Y. Ng. Deep speech: scaling up end-to-end speech recognition. 2014. arXiv:1412.5567.

- 4

Yangyang Shi, Yongqiang Wang, Chunyang Wu, Ching-Feng Yeh, Julian Chan, Frank Zhang, Duc Le, and Mike Seltzer. Emformer: efficient memory transformer based acoustic model for low latency streaming speech recognition. In ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6783–6787. 2021.

- 5

Jonathan Shen, Ruoming Pang, Ron J Weiss, Mike Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, Rj Skerrv-Ryan, and others. Natural tts synthesis by conditioning wavenet on mel spectrogram predictions. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 4779–4783. IEEE, 2018.

- 6

Ronan Collobert, Christian Puhrsch, and Gabriel Synnaeve. Wav2letter: an end-to-end convnet-based speech recognition system. 2016. arXiv:1609.03193.

- 7(1,2,3,4,5,6)

Alexei Baevski, Henry Zhou, Abdelrahman Mohamed, and Michael Auli. Wav2vec 2.0: a framework for self-supervised learning of speech representations. 2020. arXiv:2006.11477.

- 8(1,2,3,4,5,6)

Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, and Abdelrahman Mohamed. Hubert: self-supervised speech representation learning by masked prediction of hidden units. 2021. arXiv:2106.07447.

- 9

Nal Kalchbrenner, Erich Elsen, Karen Simonyan, Seb Noury, Norman Casagrande, Edward Lockhart, Florian Stimberg, Aaron van den Oord, Sander Dieleman, and Koray Kavukcuoglu. Efficient neural audio synthesis. 2018. arXiv:1802.08435.