read_video

- torchvision.io.read_video(filename: str, start_pts: Union[float, Fraction] = 0, end_pts: Optional[Union[float, Fraction]] = None, pts_unit: str = 'pts', output_format: str = 'THWC') Tuple[Tensor, Tensor, Dict[str, Any]][source]

[DEPRECATED] Reads a video from a file, returning both the video frames and the audio frames

Warning

DEPRECATED: All the video decoding and encoding capabilities of torchvision are deprecated from version 0.22 and will be removed in version 0.24. We recommend that you migrate to TorchCodec, where we’ll consolidate the future decoding/encoding capabilities of PyTorch

- Parameters:

filename (str) – path to the video file. If using the pyav backend, this can be whatever

av.openaccepts.start_pts (python:int if pts_unit = 'pts', python:float / Fraction if pts_unit = 'sec', optional) – The start presentation time of the video

end_pts (python:int if pts_unit = 'pts', python:float / Fraction if pts_unit = 'sec', optional) – The end presentation time

pts_unit (str, optional) – unit in which start_pts and end_pts values will be interpreted, either ‘pts’ or ‘sec’. Defaults to ‘pts’.

output_format (str, optional) – The format of the output video tensors. Can be either “THWC” (default) or “TCHW”.

- Returns:

the T video frames aframes (Tensor[K, L]): the audio frames, where K is the number of channels and L is the number of points info (Dict): metadata for the video and audio. Can contain the fields video_fps (float) and audio_fps (int)

- Return type:

vframes (Tensor[T, H, W, C] or Tensor[T, C, H, W])

Examples using

read_video:

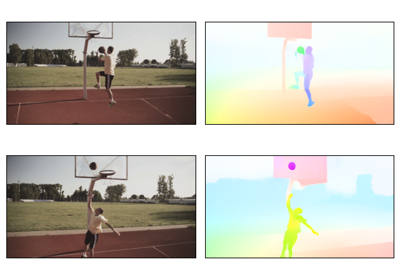

Optical Flow: Predicting movement with the RAFT model

Optical Flow: Predicting movement with the RAFT model