torchvision.ops

torchvision.ops implements operators that are specific for Computer Vision.

Note

All operators have native support for TorchScript.

-

torchvision.ops.batched_nms(boxes: torch.Tensor, scores: torch.Tensor, idxs: torch.Tensor, iou_threshold: float) → torch.Tensor[source] Performs non-maximum suppression in a batched fashion.

Each index value correspond to a category, and NMS will not be applied between elements of different categories.

- Parameters

boxes (Tensor[N, 4]) – boxes where NMS will be performed. They are expected to be in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.scores (Tensor[N]) – scores for each one of the boxes

idxs (Tensor[N]) – indices of the categories for each one of the boxes.

iou_threshold (float) – discards all overlapping boxes with IoU > iou_threshold

- Returns

int64 tensor with the indices of the elements that have been kept by NMS, sorted in decreasing order of scores

- Return type

Tensor

-

torchvision.ops.box_area(boxes: torch.Tensor) → torch.Tensor[source] Computes the area of a set of bounding boxes, which are specified by their (x1, y1, x2, y2) coordinates.

- Parameters

boxes (Tensor[N, 4]) – boxes for which the area will be computed. They are expected to be in (x1, y1, x2, y2) format with

0 <= x1 < x2and0 <= y1 < y2.- Returns

the area for each box

- Return type

Tensor[N]

-

torchvision.ops.box_convert(boxes: torch.Tensor, in_fmt: str, out_fmt: str) → torch.Tensor[source] Converts boxes from given in_fmt to out_fmt. Supported in_fmt and out_fmt are:

‘xyxy’: boxes are represented via corners, x1, y1 being top left and x2, y2 being bottom right. This is the format that torchvision utilities expect.

‘xywh’ : boxes are represented via corner, width and height, x1, y2 being top left, w, h being width and height.

‘cxcywh’ : boxes are represented via centre, width and height, cx, cy being center of box, w, h being width and height.

- Parameters

- Returns

Boxes into converted format.

- Return type

Tensor[N, 4]

-

torchvision.ops.box_iou(boxes1: torch.Tensor, boxes2: torch.Tensor) → torch.Tensor[source] Return intersection-over-union (Jaccard index) between two sets of boxes.

Both sets of boxes are expected to be in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.- Parameters

boxes1 (Tensor[N, 4]) – first set of boxes

boxes2 (Tensor[M, 4]) – second set of boxes

- Returns

the NxM matrix containing the pairwise IoU values for every element in boxes1 and boxes2

- Return type

Tensor[N, M]

-

torchvision.ops.clip_boxes_to_image(boxes: torch.Tensor, size: Tuple[int, int]) → torch.Tensor[source] Clip boxes so that they lie inside an image of size size.

- Parameters

boxes (Tensor[N, 4]) – boxes in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.size (Tuple[height, width]) – size of the image

- Returns

clipped boxes

- Return type

Tensor[N, 4]

-

torchvision.ops.deform_conv2d(input: torch.Tensor, offset: torch.Tensor, weight: torch.Tensor, bias: Optional[torch.Tensor] = None, stride: Tuple[int, int] = (1, 1), padding: Tuple[int, int] = (0, 0), dilation: Tuple[int, int] = (1, 1), mask: Optional[torch.Tensor] = None) → torch.Tensor[source] Performs Deformable Convolution v2, described in Deformable ConvNets v2: More Deformable, Better Results if

maskis notNoneand Performs Deformable Convolution, described in Deformable Convolutional Networks ifmaskisNone.- Parameters

input (Tensor[batch_size, in_channels, in_height, in_width]) – input tensor

offset (Tensor[batch_size, 2 * offset_groups * kernel_height * kernel_width, out_height, out_width]) – offsets to be applied for each position in the convolution kernel.

weight (Tensor[out_channels, in_channels // groups, kernel_height, kernel_width]) – convolution weights, split into groups of size (in_channels // groups)

bias (Tensor[out_channels]) – optional bias of shape (out_channels,). Default: None

stride (int or Tuple[int, int]) – distance between convolution centers. Default: 1

padding (int or Tuple[int, int]) – height/width of padding of zeroes around each image. Default: 0

dilation (int or Tuple[int, int]) – the spacing between kernel elements. Default: 1

mask (Tensor[batch_size, offset_groups * kernel_height * kernel_width, out_height, out_width]) – masks to be applied for each position in the convolution kernel. Default: None

- Returns

result of convolution

- Return type

Tensor[batch_sz, out_channels, out_h, out_w]

- Examples::

>>> input = torch.rand(4, 3, 10, 10) >>> kh, kw = 3, 3 >>> weight = torch.rand(5, 3, kh, kw) >>> # offset and mask should have the same spatial size as the output >>> # of the convolution. In this case, for an input of 10, stride of 1 >>> # and kernel size of 3, without padding, the output size is 8 >>> offset = torch.rand(4, 2 * kh * kw, 8, 8) >>> mask = torch.rand(4, kh * kw, 8, 8) >>> out = deform_conv2d(input, offset, weight, mask=mask) >>> print(out.shape) >>> # returns >>> torch.Size([4, 5, 8, 8])

-

torchvision.ops.generalized_box_iou(boxes1: torch.Tensor, boxes2: torch.Tensor) → torch.Tensor[source] Return generalized intersection-over-union (Jaccard index) between two sets of boxes.

Both sets of boxes are expected to be in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.- Parameters

boxes1 (Tensor[N, 4]) – first set of boxes

boxes2 (Tensor[M, 4]) – second set of boxes

- Returns

the NxM matrix containing the pairwise generalized IoU values for every element in boxes1 and boxes2

- Return type

Tensor[N, M]

-

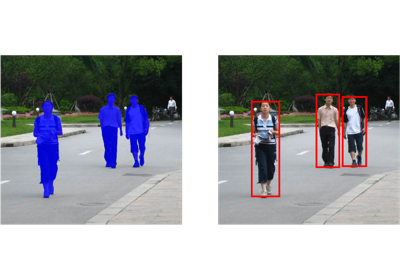

torchvision.ops.masks_to_boxes(masks: torch.Tensor) → torch.Tensor[source] Compute the bounding boxes around the provided masks.

Returns a [N, 4] tensor containing bounding boxes. The boxes are in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.- Parameters

masks (Tensor[N, H, W]) – masks to transform where N is the number of masks and (H, W) are the spatial dimensions.

- Returns

bounding boxes

- Return type

Tensor[N, 4]

Examples using

masks_to_boxes:

-

torchvision.ops.nms(boxes: torch.Tensor, scores: torch.Tensor, iou_threshold: float) → torch.Tensor[source] Performs non-maximum suppression (NMS) on the boxes according to their intersection-over-union (IoU).

NMS iteratively removes lower scoring boxes which have an IoU greater than iou_threshold with another (higher scoring) box.

If multiple boxes have the exact same score and satisfy the IoU criterion with respect to a reference box, the selected box is not guaranteed to be the same between CPU and GPU. This is similar to the behavior of argsort in PyTorch when repeated values are present.

- Parameters

boxes (Tensor[N, 4])) – boxes to perform NMS on. They are expected to be in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.scores (Tensor[N]) – scores for each one of the boxes

iou_threshold (float) – discards all overlapping boxes with IoU > iou_threshold

- Returns

int64 tensor with the indices of the elements that have been kept by NMS, sorted in decreasing order of scores

- Return type

Tensor

-

torchvision.ops.ps_roi_align(input: torch.Tensor, boxes: torch.Tensor, output_size: int, spatial_scale: float = 1.0, sampling_ratio: int = - 1) → torch.Tensor[source] Performs Position-Sensitive Region of Interest (RoI) Align operator mentioned in Light-Head R-CNN.

- Parameters

input (Tensor[N, C, H, W]) – The input tensor, i.e. a batch with

Nelements. Each element containsCfeature maps of dimensionsH x W.boxes (Tensor[K, 5] or List[Tensor[L, 4]]) – the box coordinates in (x1, y1, x2, y2) format where the regions will be taken from. The coordinate must satisfy

0 <= x1 < x2and0 <= y1 < y2. If a single Tensor is passed, then the first column should contain the index of the corresponding element in the batch, i.e. a number in[0, N - 1]. If a list of Tensors is passed, then each Tensor will correspond to the boxes for an element i in the batch.output_size (int or Tuple[int, int]) – the size of the output (in bins or pixels) after the pooling is performed, as (height, width).

spatial_scale (float) – a scaling factor that maps the input coordinates to the box coordinates. Default: 1.0

sampling_ratio (int) – number of sampling points in the interpolation grid used to compute the output value of each pooled output bin. If > 0, then exactly

sampling_ratio x sampling_ratiosampling points per bin are used. If <= 0, then an adaptive number of grid points are used (computed asceil(roi_width / output_width), and likewise for height). Default: -1

- Returns

The pooled RoIs

- Return type

Tensor[K, C / (output_size[0] * output_size[1]), output_size[0], output_size[1]]

-

torchvision.ops.ps_roi_pool(input: torch.Tensor, boxes: torch.Tensor, output_size: int, spatial_scale: float = 1.0) → torch.Tensor[source] Performs Position-Sensitive Region of Interest (RoI) Pool operator described in R-FCN

- Parameters

input (Tensor[N, C, H, W]) – The input tensor, i.e. a batch with

Nelements. Each element containsCfeature maps of dimensionsH x W.boxes (Tensor[K, 5] or List[Tensor[L, 4]]) – the box coordinates in (x1, y1, x2, y2) format where the regions will be taken from. The coordinate must satisfy

0 <= x1 < x2and0 <= y1 < y2. If a single Tensor is passed, then the first column should contain the index of the corresponding element in the batch, i.e. a number in[0, N - 1]. If a list of Tensors is passed, then each Tensor will correspond to the boxes for an element i in the batch.output_size (int or Tuple[int, int]) – the size of the output (in bins or pixels) after the pooling is performed, as (height, width).

spatial_scale (float) – a scaling factor that maps the input coordinates to the box coordinates. Default: 1.0

- Returns

The pooled RoIs.

- Return type

Tensor[K, C / (output_size[0] * output_size[1]), output_size[0], output_size[1]]

-

torchvision.ops.remove_small_boxes(boxes: torch.Tensor, min_size: float) → torch.Tensor[source] Remove boxes which contains at least one side smaller than min_size.

- Parameters

boxes (Tensor[N, 4]) – boxes in

(x1, y1, x2, y2)format with0 <= x1 < x2and0 <= y1 < y2.min_size (float) – minimum size

- Returns

indices of the boxes that have both sides larger than min_size

- Return type

Tensor[K]

-

torchvision.ops.roi_align(input: torch.Tensor, boxes: Union[torch.Tensor, List[torch.Tensor]], output_size: None, spatial_scale: float = 1.0, sampling_ratio: int = - 1, aligned: bool = False) → torch.Tensor[source] Performs Region of Interest (RoI) Align operator with average pooling, as described in Mask R-CNN.

- Parameters

input (Tensor[N, C, H, W]) – The input tensor, i.e. a batch with

Nelements. Each element containsCfeature maps of dimensionsH x W. If the tensor is quantized, we expect a batch size ofN == 1.boxes (Tensor[K, 5] or List[Tensor[L, 4]]) – the box coordinates in (x1, y1, x2, y2) format where the regions will be taken from. The coordinate must satisfy

0 <= x1 < x2and0 <= y1 < y2. If a single Tensor is passed, then the first column should contain the index of the corresponding element in the batch, i.e. a number in[0, N - 1]. If a list of Tensors is passed, then each Tensor will correspond to the boxes for an element i in the batch.output_size (int or Tuple[int, int]) – the size of the output (in bins or pixels) after the pooling is performed, as (height, width).

spatial_scale (float) – a scaling factor that maps the input coordinates to the box coordinates. Default: 1.0

sampling_ratio (int) – number of sampling points in the interpolation grid used to compute the output value of each pooled output bin. If > 0, then exactly

sampling_ratio x sampling_ratiosampling points per bin are used. If <= 0, then an adaptive number of grid points are used (computed asceil(roi_width / output_width), and likewise for height). Default: -1aligned (bool) – If False, use the legacy implementation. If True, pixel shift the box coordinates it by -0.5 for a better alignment with the two neighboring pixel indices. This version is used in Detectron2

- Returns

The pooled RoIs.

- Return type

Tensor[K, C, output_size[0], output_size[1]]

-

torchvision.ops.roi_pool(input: torch.Tensor, boxes: Union[torch.Tensor, List[torch.Tensor]], output_size: None, spatial_scale: float = 1.0) → torch.Tensor[source] Performs Region of Interest (RoI) Pool operator described in Fast R-CNN

- Parameters

input (Tensor[N, C, H, W]) – The input tensor, i.e. a batch with

Nelements. Each element containsCfeature maps of dimensionsH x W.boxes (Tensor[K, 5] or List[Tensor[L, 4]]) – the box coordinates in (x1, y1, x2, y2) format where the regions will be taken from. The coordinate must satisfy

0 <= x1 < x2and0 <= y1 < y2. If a single Tensor is passed, then the first column should contain the index of the corresponding element in the batch, i.e. a number in[0, N - 1]. If a list of Tensors is passed, then each Tensor will correspond to the boxes for an element i in the batch.output_size (int or Tuple[int, int]) – the size of the output after the cropping is performed, as (height, width)

spatial_scale (float) – a scaling factor that maps the input coordinates to the box coordinates. Default: 1.0

- Returns

The pooled RoIs.

- Return type

Tensor[K, C, output_size[0], output_size[1]]

-

torchvision.ops.sigmoid_focal_loss(inputs: torch.Tensor, targets: torch.Tensor, alpha: float = 0.25, gamma: float = 2, reduction: str = 'none')[source] Original implementation from https://github.com/facebookresearch/fvcore/blob/master/fvcore/nn/focal_loss.py . Loss used in RetinaNet for dense detection: https://arxiv.org/abs/1708.02002.

- Parameters

inputs – A float tensor of arbitrary shape. The predictions for each example.

targets – A float tensor with the same shape as inputs. Stores the binary classification label for each element in inputs (0 for the negative class and 1 for the positive class).

alpha – (optional) Weighting factor in range (0,1) to balance positive vs negative examples or -1 for ignore. Default = 0.25

gamma – Exponent of the modulating factor (1 - p_t) to balance easy vs hard examples.

reduction – ‘none’ | ‘mean’ | ‘sum’ ‘none’: No reduction will be applied to the output. ‘mean’: The output will be averaged. ‘sum’: The output will be summed.

- Returns

Loss tensor with the reduction option applied.

-

torchvision.ops.stochastic_depth(input: torch.Tensor, p: float, mode: str, training: bool = True) → torch.Tensor[source] Implements the Stochastic Depth from “Deep Networks with Stochastic Depth” used for randomly dropping residual branches of residual architectures.

- Parameters

input (Tensor[N, ..]) – The input tensor or arbitrary dimensions with the first one being its batch i.e. a batch with

Nrows.p (float) – probability of the input to be zeroed.

mode (str) –

"batch"or"row"."batch"randomly zeroes the entire input,"row"zeroes randomly selected rows from the batch.training – apply stochastic depth if is

True. Default:True

- Returns

The randomly zeroed tensor.

- Return type

Tensor[N, ..]

-

class

torchvision.ops.RoIAlign(output_size: None, spatial_scale: float, sampling_ratio: int, aligned: bool = False)[source] See

roi_align().

-

class

torchvision.ops.PSRoIAlign(output_size: int, spatial_scale: float, sampling_ratio: int)[source] See

ps_roi_align().

-

class

torchvision.ops.RoIPool(output_size: None, spatial_scale: float)[source] See

roi_pool().

-

class

torchvision.ops.PSRoIPool(output_size: int, spatial_scale: float)[source] See

ps_roi_pool().

-

class

torchvision.ops.DeformConv2d(in_channels: int, out_channels: int, kernel_size: int, stride: int = 1, padding: int = 0, dilation: int = 1, groups: int = 1, bias: bool = True)[source] See

deform_conv2d().

-

class

torchvision.ops.MultiScaleRoIAlign(featmap_names: List[str], output_size: Union[int, Tuple[int], List[int]], sampling_ratio: int, *, canonical_scale: int = 224, canonical_level: int = 4)[source] Multi-scale RoIAlign pooling, which is useful for detection with or without FPN.

It infers the scale of the pooling via the heuristics specified in eq. 1 of the Feature Pyramid Network paper. They keyword-only parameters

canonical_scaleandcanonical_levelcorrespond respectively to224andk0=4in eq. 1, and have the following meaning:canonical_levelis the target level of the pyramid from which to pool a region of interest withw x h = canonical_scale x canonical_scale.- Parameters

featmap_names (List[str]) – the names of the feature maps that will be used for the pooling.

output_size (List[Tuple[int, int]] or List[int]) – output size for the pooled region

sampling_ratio (int) – sampling ratio for ROIAlign

canonical_scale (int, optional) – canonical_scale for LevelMapper

canonical_level (int, optional) – canonical_level for LevelMapper

Examples:

>>> m = torchvision.ops.MultiScaleRoIAlign(['feat1', 'feat3'], 3, 2) >>> i = OrderedDict() >>> i['feat1'] = torch.rand(1, 5, 64, 64) >>> i['feat2'] = torch.rand(1, 5, 32, 32) # this feature won't be used in the pooling >>> i['feat3'] = torch.rand(1, 5, 16, 16) >>> # create some random bounding boxes >>> boxes = torch.rand(6, 4) * 256; boxes[:, 2:] += boxes[:, :2] >>> # original image size, before computing the feature maps >>> image_sizes = [(512, 512)] >>> output = m(i, [boxes], image_sizes) >>> print(output.shape) >>> torch.Size([6, 5, 3, 3])

-

class

torchvision.ops.FeaturePyramidNetwork(in_channels_list: List[int], out_channels: int, extra_blocks: Optional[torchvision.ops.feature_pyramid_network.ExtraFPNBlock] = None)[source] Module that adds a FPN from on top of a set of feature maps. This is based on “Feature Pyramid Network for Object Detection”.

The feature maps are currently supposed to be in increasing depth order.

The input to the model is expected to be an OrderedDict[Tensor], containing the feature maps on top of which the FPN will be added.

- Parameters

in_channels_list (list[int]) – number of channels for each feature map that is passed to the module

out_channels (int) – number of channels of the FPN representation

extra_blocks (ExtraFPNBlock or None) – if provided, extra operations will be performed. It is expected to take the fpn features, the original features and the names of the original features as input, and returns a new list of feature maps and their corresponding names

Examples:

>>> m = torchvision.ops.FeaturePyramidNetwork([10, 20, 30], 5) >>> # get some dummy data >>> x = OrderedDict() >>> x['feat0'] = torch.rand(1, 10, 64, 64) >>> x['feat2'] = torch.rand(1, 20, 16, 16) >>> x['feat3'] = torch.rand(1, 30, 8, 8) >>> # compute the FPN on top of x >>> output = m(x) >>> print([(k, v.shape) for k, v in output.items()]) >>> # returns >>> [('feat0', torch.Size([1, 5, 64, 64])), >>> ('feat2', torch.Size([1, 5, 16, 16])), >>> ('feat3', torch.Size([1, 5, 8, 8]))]

-

class

torchvision.ops.StochasticDepth(p: float, mode: str)[source] See

stochastic_depth().