torchvision.models

The models subpackage contains definitions of models for addressing different tasks, including: image classification, pixelwise semantic segmentation, object detection, instance segmentation, person keypoint detection and video classification.

Note

Backward compatibility is guaranteed for loading a serialized

state_dict to the model created using old PyTorch version.

On the contrary, loading entire saved models or serialized

ScriptModules (seralized using older versions of PyTorch)

may not preserve the historic behaviour. Refer to the following

documentation

Classification

The models subpackage contains definitions for the following model architectures for image classification:

Inception v3

ShuffleNet v2

You can construct a model with random weights by calling its constructor:

import torchvision.models as models

resnet18 = models.resnet18()

alexnet = models.alexnet()

vgg16 = models.vgg16()

squeezenet = models.squeezenet1_0()

densenet = models.densenet161()

inception = models.inception_v3()

googlenet = models.googlenet()

shufflenet = models.shufflenet_v2_x1_0()

mobilenet_v2 = models.mobilenet_v2()

mobilenet_v3_large = models.mobilenet_v3_large()

mobilenet_v3_small = models.mobilenet_v3_small()

resnext50_32x4d = models.resnext50_32x4d()

wide_resnet50_2 = models.wide_resnet50_2()

mnasnet = models.mnasnet1_0()

efficientnet_b0 = models.efficientnet_b0()

efficientnet_b1 = models.efficientnet_b1()

efficientnet_b2 = models.efficientnet_b2()

efficientnet_b3 = models.efficientnet_b3()

efficientnet_b4 = models.efficientnet_b4()

efficientnet_b5 = models.efficientnet_b5()

efficientnet_b6 = models.efficientnet_b6()

efficientnet_b7 = models.efficientnet_b7()

regnet_y_400mf = models.regnet_y_400mf()

regnet_y_800mf = models.regnet_y_800mf()

regnet_y_1_6gf = models.regnet_y_1_6gf()

regnet_y_3_2gf = models.regnet_y_3_2gf()

regnet_y_8gf = models.regnet_y_8gf()

regnet_y_16gf = models.regnet_y_16gf()

regnet_y_32gf = models.regnet_y_32gf()

regnet_x_400mf = models.regnet_x_400mf()

regnet_x_800mf = models.regnet_x_800mf()

regnet_x_1_6gf = models.regnet_x_1_6gf()

regnet_x_3_2gf = models.regnet_x_3_2gf()

regnet_x_8gf = models.regnet_x_8gf()

regnet_x_16gf = models.regnet_x_16gf()

regnet_x_32gf = models.regnet_x_32gf()

We provide pre-trained models, using the PyTorch torch.utils.model_zoo.

These can be constructed by passing pretrained=True:

import torchvision.models as models

resnet18 = models.resnet18(pretrained=True)

alexnet = models.alexnet(pretrained=True)

squeezenet = models.squeezenet1_0(pretrained=True)

vgg16 = models.vgg16(pretrained=True)

densenet = models.densenet161(pretrained=True)

inception = models.inception_v3(pretrained=True)

googlenet = models.googlenet(pretrained=True)

shufflenet = models.shufflenet_v2_x1_0(pretrained=True)

mobilenet_v2 = models.mobilenet_v2(pretrained=True)

mobilenet_v3_large = models.mobilenet_v3_large(pretrained=True)

mobilenet_v3_small = models.mobilenet_v3_small(pretrained=True)

resnext50_32x4d = models.resnext50_32x4d(pretrained=True)

wide_resnet50_2 = models.wide_resnet50_2(pretrained=True)

mnasnet = models.mnasnet1_0(pretrained=True)

efficientnet_b0 = models.efficientnet_b0(pretrained=True)

efficientnet_b1 = models.efficientnet_b1(pretrained=True)

efficientnet_b2 = models.efficientnet_b2(pretrained=True)

efficientnet_b3 = models.efficientnet_b3(pretrained=True)

efficientnet_b4 = models.efficientnet_b4(pretrained=True)

efficientnet_b5 = models.efficientnet_b5(pretrained=True)

efficientnet_b6 = models.efficientnet_b6(pretrained=True)

efficientnet_b7 = models.efficientnet_b7(pretrained=True)

regnet_y_400mf = models.regnet_y_400mf(pretrained=True)

regnet_y_800mf = models.regnet_y_800mf(pretrained=True)

regnet_y_1_6gf = models.regnet_y_1_6gf(pretrained=True)

regnet_y_3_2gf = models.regnet_y_3_2gf(pretrained=True)

regnet_y_8gf = models.regnet_y_8gf(pretrained=True)

regnet_y_16gf = models.regnet_y_16gf(pretrained=True)

regnet_y_32gf = models.regnet_y_32gf(pretrained=True)

regnet_x_400mf = models.regnet_x_400mf(pretrained=True)

regnet_x_800mf = models.regnet_x_800mf(pretrained=True)

regnet_x_1_6gf = models.regnet_x_1_6gf(pretrained=True)

regnet_x_3_2gf = models.regnet_x_3_2gf(pretrained=True)

regnet_x_8gf = models.regnet_x_8gf(pretrained=True)

regnet_x_16gf = models.regnet_x_16gf(pretrainedTrue)

regnet_x_32gf = models.regnet_x_32gf(pretrained=True)

Instancing a pre-trained model will download its weights to a cache directory.

This directory can be set using the TORCH_MODEL_ZOO environment variable. See

torch.utils.model_zoo.load_url() for details.

Some models use modules which have different training and evaluation

behavior, such as batch normalization. To switch between these modes, use

model.train() or model.eval() as appropriate. See

train() or eval() for details.

All pre-trained models expect input images normalized in the same way,

i.e. mini-batches of 3-channel RGB images of shape (3 x H x W),

where H and W are expected to be at least 224.

The images have to be loaded in to a range of [0, 1] and then normalized

using mean = [0.485, 0.456, 0.406] and std = [0.229, 0.224, 0.225].

You can use the following transform to normalize:

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

An example of such normalization can be found in the imagenet example here

The process for obtaining the values of mean and std is roughly equivalent to:

import torch

from torchvision import datasets, transforms as T

transform = T.Compose([T.Resize(256), T.CenterCrop(224), T.ToTensor()])

dataset = datasets.ImageNet(".", split="train", transform=transform)

means = []

stds = []

for img in subset(dataset):

means.append(torch.mean(img))

stds.append(torch.std(img))

mean = torch.mean(torch.tensor(means))

std = torch.mean(torch.tensor(stds))

Unfortunately, the concrete subset that was used is lost. For more information see this discussion or these experiments.

The sizes of the EfficientNet models depend on the variant. For the exact input sizes check here

ImageNet 1-crop error rates

Model |

Acc@1 |

Acc@5 |

|---|---|---|

AlexNet |

56.522 |

79.066 |

VGG-11 |

69.020 |

88.628 |

VGG-13 |

69.928 |

89.246 |

VGG-16 |

71.592 |

90.382 |

VGG-19 |

72.376 |

90.876 |

VGG-11 with batch normalization |

70.370 |

89.810 |

VGG-13 with batch normalization |

71.586 |

90.374 |

VGG-16 with batch normalization |

73.360 |

91.516 |

VGG-19 with batch normalization |

74.218 |

91.842 |

ResNet-18 |

69.758 |

89.078 |

ResNet-34 |

73.314 |

91.420 |

ResNet-50 |

76.130 |

92.862 |

ResNet-101 |

77.374 |

93.546 |

ResNet-152 |

78.312 |

94.046 |

SqueezeNet 1.0 |

58.092 |

80.420 |

SqueezeNet 1.1 |

58.178 |

80.624 |

Densenet-121 |

74.434 |

91.972 |

Densenet-169 |

75.600 |

92.806 |

Densenet-201 |

76.896 |

93.370 |

Densenet-161 |

77.138 |

93.560 |

Inception v3 |

77.294 |

93.450 |

GoogleNet |

69.778 |

89.530 |

ShuffleNet V2 x1.0 |

69.362 |

88.316 |

ShuffleNet V2 x0.5 |

60.552 |

81.746 |

MobileNet V2 |

71.878 |

90.286 |

MobileNet V3 Large |

74.042 |

91.340 |

MobileNet V3 Small |

67.668 |

87.402 |

ResNeXt-50-32x4d |

77.618 |

93.698 |

ResNeXt-101-32x8d |

79.312 |

94.526 |

Wide ResNet-50-2 |

78.468 |

94.086 |

Wide ResNet-101-2 |

78.848 |

94.284 |

MNASNet 1.0 |

73.456 |

91.510 |

MNASNet 0.5 |

67.734 |

87.490 |

EfficientNet-B0 |

77.692 |

93.532 |

EfficientNet-B1 |

78.642 |

94.186 |

EfficientNet-B2 |

80.608 |

95.310 |

EfficientNet-B3 |

82.008 |

96.054 |

EfficientNet-B4 |

83.384 |

96.594 |

EfficientNet-B5 |

83.444 |

96.628 |

EfficientNet-B6 |

84.008 |

96.916 |

EfficientNet-B7 |

84.122 |

96.908 |

regnet_x_400mf |

72.834 |

90.950 |

regnet_x_800mf |

75.212 |

92.348 |

regnet_x_1_6gf |

77.040 |

93.440 |

regnet_x_3_2gf |

78.364 |

93.992 |

regnet_x_8gf |

79.344 |

94.686 |

regnet_x_16gf |

80.058 |

94.944 |

regnet_x_32gf |

80.622 |

95.248 |

regnet_y_400mf |

74.046 |

91.716 |

regnet_y_800mf |

76.420 |

93.136 |

regnet_y_1_6gf |

77.950 |

93.966 |

regnet_y_3_2gf |

78.948 |

94.576 |

regnet_y_8gf |

80.032 |

95.048 |

regnet_y_16gf |

80.424 |

95.240 |

regnet_y_32gf |

80.878 |

95.340 |

Alexnet

-

torchvision.models.alexnet(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.alexnet.AlexNet[source] AlexNet model architecture from the “One weird trick…” paper. The required minimum input size of the model is 63x63.

VGG

-

torchvision.models.vgg11(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 11-layer model (configuration “A”) from “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg11_bn(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 11-layer model (configuration “A”) with batch normalization “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg13(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 13-layer model (configuration “B”) “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg13_bn(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 13-layer model (configuration “B”) with batch normalization “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg16(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 16-layer model (configuration “D”) “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg16_bn(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 16-layer model (configuration “D”) with batch normalization “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg19(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 19-layer model (configuration “E”) “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

-

torchvision.models.vgg19_bn(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.vgg.VGG[source] VGG 19-layer model (configuration ‘E’) with batch normalization “Very Deep Convolutional Networks For Large-Scale Image Recognition”. The required minimum input size of the model is 32x32.

ResNet

-

torchvision.models.resnet18(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNet-18 model from “Deep Residual Learning for Image Recognition”.

- Parameters

Examples using

resnet18:

-

torchvision.models.resnet34(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNet-34 model from “Deep Residual Learning for Image Recognition”.

-

torchvision.models.resnet50(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNet-50 model from “Deep Residual Learning for Image Recognition”.

-

torchvision.models.resnet101(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNet-101 model from “Deep Residual Learning for Image Recognition”.

-

torchvision.models.resnet152(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNet-152 model from “Deep Residual Learning for Image Recognition”.

SqueezeNet

-

torchvision.models.squeezenet1_0(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.squeezenet.SqueezeNet[source] SqueezeNet model architecture from the “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size” paper. The required minimum input size of the model is 21x21.

-

torchvision.models.squeezenet1_1(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.squeezenet.SqueezeNet[source] SqueezeNet 1.1 model from the official SqueezeNet repo. SqueezeNet 1.1 has 2.4x less computation and slightly fewer parameters than SqueezeNet 1.0, without sacrificing accuracy. The required minimum input size of the model is 17x17.

DenseNet

-

torchvision.models.densenet121(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.densenet.DenseNet[source] Densenet-121 model from “Densely Connected Convolutional Networks”. The required minimum input size of the model is 29x29.

-

torchvision.models.densenet169(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.densenet.DenseNet[source] Densenet-169 model from “Densely Connected Convolutional Networks”. The required minimum input size of the model is 29x29.

-

torchvision.models.densenet161(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.densenet.DenseNet[source] Densenet-161 model from “Densely Connected Convolutional Networks”. The required minimum input size of the model is 29x29.

-

torchvision.models.densenet201(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.densenet.DenseNet[source] Densenet-201 model from “Densely Connected Convolutional Networks”. The required minimum input size of the model is 29x29.

Inception v3

-

torchvision.models.inception_v3(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.inception.Inception3[source] Inception v3 model architecture from “Rethinking the Inception Architecture for Computer Vision”. The required minimum input size of the model is 75x75.

Note

Important: In contrast to the other models the inception_v3 expects tensors with a size of N x 3 x 299 x 299, so ensure your images are sized accordingly.

- Parameters

pretrained (bool) – If True, returns a model pre-trained on ImageNet

progress (bool) – If True, displays a progress bar of the download to stderr

aux_logits (bool) – If True, add an auxiliary branch that can improve training. Default: True

transform_input (bool) – If True, preprocesses the input according to the method with which it was trained on ImageNet. Default: False

Note

This requires scipy to be installed

GoogLeNet

-

torchvision.models.googlenet(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.googlenet.GoogLeNet[source] GoogLeNet (Inception v1) model architecture from “Going Deeper with Convolutions”. The required minimum input size of the model is 15x15.

- Parameters

pretrained (bool) – If True, returns a model pre-trained on ImageNet

progress (bool) – If True, displays a progress bar of the download to stderr

aux_logits (bool) – If True, adds two auxiliary branches that can improve training. Default: False when pretrained is True otherwise True

transform_input (bool) – If True, preprocesses the input according to the method with which it was trained on ImageNet. Default: False

Note

This requires scipy to be installed

ShuffleNet v2

-

torchvision.models.shufflenet_v2_x0_5(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.shufflenetv2.ShuffleNetV2[source] Constructs a ShuffleNetV2 with 0.5x output channels, as described in “ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design”.

-

torchvision.models.shufflenet_v2_x1_0(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.shufflenetv2.ShuffleNetV2[source] Constructs a ShuffleNetV2 with 1.0x output channels, as described in “ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design”.

-

torchvision.models.shufflenet_v2_x1_5(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.shufflenetv2.ShuffleNetV2[source] Constructs a ShuffleNetV2 with 1.5x output channels, as described in “ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design”.

-

torchvision.models.shufflenet_v2_x2_0(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.shufflenetv2.ShuffleNetV2[source] Constructs a ShuffleNetV2 with 2.0x output channels, as described in “ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design”.

MobileNet v2

-

torchvision.models.mobilenet_v2(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mobilenetv2.MobileNetV2[source] Constructs a MobileNetV2 architecture from “MobileNetV2: Inverted Residuals and Linear Bottlenecks”.

MobileNet v3

-

torchvision.models.mobilenet_v3_large(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mobilenetv3.MobileNetV3[source] Constructs a large MobileNetV3 architecture from “Searching for MobileNetV3”.

-

torchvision.models.mobilenet_v3_small(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mobilenetv3.MobileNetV3[source] Constructs a small MobileNetV3 architecture from “Searching for MobileNetV3”.

ResNext

-

torchvision.models.resnext50_32x4d(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNeXt-50 32x4d model from “Aggregated Residual Transformation for Deep Neural Networks”.

-

torchvision.models.resnext101_32x8d(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] ResNeXt-101 32x8d model from “Aggregated Residual Transformation for Deep Neural Networks”.

Wide ResNet

-

torchvision.models.wide_resnet50_2(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] Wide ResNet-50-2 model from “Wide Residual Networks”.

The model is the same as ResNet except for the bottleneck number of channels which is twice larger in every block. The number of channels in outer 1x1 convolutions is the same, e.g. last block in ResNet-50 has 2048-512-2048 channels, and in Wide ResNet-50-2 has 2048-1024-2048.

-

torchvision.models.wide_resnet101_2(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.resnet.ResNet[source] Wide ResNet-101-2 model from “Wide Residual Networks”.

The model is the same as ResNet except for the bottleneck number of channels which is twice larger in every block. The number of channels in outer 1x1 convolutions is the same, e.g. last block in ResNet-50 has 2048-512-2048 channels, and in Wide ResNet-50-2 has 2048-1024-2048.

MNASNet

-

torchvision.models.mnasnet0_5(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mnasnet.MNASNet[source] MNASNet with depth multiplier of 0.5 from “MnasNet: Platform-Aware Neural Architecture Search for Mobile”.

-

torchvision.models.mnasnet0_75(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mnasnet.MNASNet[source] MNASNet with depth multiplier of 0.75 from “MnasNet: Platform-Aware Neural Architecture Search for Mobile”.

-

torchvision.models.mnasnet1_0(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mnasnet.MNASNet[source] MNASNet with depth multiplier of 1.0 from “MnasNet: Platform-Aware Neural Architecture Search for Mobile”.

-

torchvision.models.mnasnet1_3(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.mnasnet.MNASNet[source] MNASNet with depth multiplier of 1.3 from “MnasNet: Platform-Aware Neural Architecture Search for Mobile”.

EfficientNet

-

torchvision.models.efficientnet_b0(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B0 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b1(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B1 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b2(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B2 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b3(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B3 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b4(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B4 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b5(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B5 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b6(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B6 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

-

torchvision.models.efficientnet_b7(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.efficientnet.EfficientNet[source] Constructs a EfficientNet B7 architecture from “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”.

RegNet

-

torchvision.models.regnet_y_400mf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_400MF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_y_800mf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_800MF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_y_1_6gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_1.6GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_y_3_2gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_3.2GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_y_8gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_8GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_y_16gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_16GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_y_32gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetY_32GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_400mf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_400MF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_800mf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_800MF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_1_6gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_1.6GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_3_2gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_3.2GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_8gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_8GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_16gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_16GF architecture from “Designing Network Design Spaces”.

-

torchvision.models.regnet_x_32gf(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.regnet.RegNet[source] Constructs a RegNetX_32GF architecture from “Designing Network Design Spaces”.

Quantized Models

The following architectures provide support for INT8 quantized models. You can get a model with random weights by calling its constructor:

import torchvision.models as models

googlenet = models.quantization.googlenet()

inception_v3 = models.quantization.inception_v3()

mobilenet_v2 = models.quantization.mobilenet_v2()

mobilenet_v3_large = models.quantization.mobilenet_v3_large()

resnet18 = models.quantization.resnet18()

resnet50 = models.quantization.resnet50()

resnext101_32x8d = models.quantization.resnext101_32x8d()

shufflenet_v2_x0_5 = models.quantization.shufflenet_v2_x0_5()

shufflenet_v2_x1_0 = models.quantization.shufflenet_v2_x1_0()

shufflenet_v2_x1_5 = models.quantization.shufflenet_v2_x1_5()

shufflenet_v2_x2_0 = models.quantization.shufflenet_v2_x2_0()

Obtaining a pre-trained quantized model can be done with a few lines of code:

import torchvision.models as models

model = models.quantization.mobilenet_v2(pretrained=True, quantize=True)

model.eval()

# run the model with quantized inputs and weights

out = model(torch.rand(1, 3, 224, 224))

We provide pre-trained quantized weights for the following models:

Model |

Acc@1 |

Acc@5 |

|---|---|---|

MobileNet V2 |

71.658 |

90.150 |

MobileNet V3 Large |

73.004 |

90.858 |

ShuffleNet V2 |

68.360 |

87.582 |

ResNet 18 |

69.494 |

88.882 |

ResNet 50 |

75.920 |

92.814 |

ResNext 101 32x8d |

78.986 |

94.480 |

Inception V3 |

77.176 |

93.354 |

GoogleNet |

69.826 |

89.404 |

Semantic Segmentation

The models subpackage contains definitions for the following model architectures for semantic segmentation:

As with image classification models, all pre-trained models expect input images normalized in the same way.

The images have to be loaded in to a range of [0, 1] and then normalized using

mean = [0.485, 0.456, 0.406] and std = [0.229, 0.224, 0.225].

They have been trained on images resized such that their minimum size is 520.

For details on how to plot the masks of such models, you may refer to Semantic segmentation models.

The pre-trained models have been trained on a subset of COCO train2017, on the 20 categories that are

present in the Pascal VOC dataset. You can see more information on how the subset has been selected in

references/segmentation/coco_utils.py. The classes that the pre-trained model outputs are the following,

in order:

['__background__', 'aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor']

The accuracies of the pre-trained models evaluated on COCO val2017 are as follows

Network |

mean IoU |

global pixelwise acc |

|---|---|---|

FCN ResNet50 |

60.5 |

91.4 |

FCN ResNet101 |

63.7 |

91.9 |

DeepLabV3 ResNet50 |

66.4 |

92.4 |

DeepLabV3 ResNet101 |

67.4 |

92.4 |

DeepLabV3 MobileNetV3-Large |

60.3 |

91.2 |

LR-ASPP MobileNetV3-Large |

57.9 |

91.2 |

Fully Convolutional Networks

-

torchvision.models.segmentation.fcn_resnet50(pretrained: bool = False, progress: bool = True, num_classes: int = 21, aux_loss: Optional[bool] = None, **kwargs: Any) → torch.nn.modules.module.Module[source] Constructs a Fully-Convolutional Network model with a ResNet-50 backbone.

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017 which contains the same classes as Pascal VOC

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

aux_loss (bool) – If True, it uses an auxiliary loss

Examples using

fcn_resnet50:

-

torchvision.models.segmentation.fcn_resnet101(pretrained: bool = False, progress: bool = True, num_classes: int = 21, aux_loss: Optional[bool] = None, **kwargs: Any) → torch.nn.modules.module.Module[source] Constructs a Fully-Convolutional Network model with a ResNet-101 backbone.

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017 which contains the same classes as Pascal VOC

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

aux_loss (bool) – If True, it uses an auxiliary loss

DeepLabV3

-

torchvision.models.segmentation.deeplabv3_resnet50(pretrained: bool = False, progress: bool = True, num_classes: int = 21, aux_loss: Optional[bool] = None, **kwargs: Any) → torch.nn.modules.module.Module[source] Constructs a DeepLabV3 model with a ResNet-50 backbone.

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017 which contains the same classes as Pascal VOC

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

aux_loss (bool) – If True, it uses an auxiliary loss

Examples using

deeplabv3_resnet50:

-

torchvision.models.segmentation.deeplabv3_resnet101(pretrained: bool = False, progress: bool = True, num_classes: int = 21, aux_loss: Optional[bool] = None, **kwargs: Any) → torch.nn.modules.module.Module[source] Constructs a DeepLabV3 model with a ResNet-101 backbone.

- Parameters

-

torchvision.models.segmentation.deeplabv3_mobilenet_v3_large(pretrained: bool = False, progress: bool = True, num_classes: int = 21, aux_loss: Optional[bool] = None, **kwargs: Any) → torch.nn.modules.module.Module[source] Constructs a DeepLabV3 model with a MobileNetV3-Large backbone.

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017 which contains the same classes as Pascal VOC

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

aux_loss (bool) – If True, it uses an auxiliary loss

LR-ASPP

-

torchvision.models.segmentation.lraspp_mobilenet_v3_large(pretrained: bool = False, progress: bool = True, num_classes: int = 21, **kwargs: Any) → torch.nn.modules.module.Module[source] Constructs a Lite R-ASPP Network model with a MobileNetV3-Large backbone.

- Parameters

Examples using

lraspp_mobilenet_v3_large:

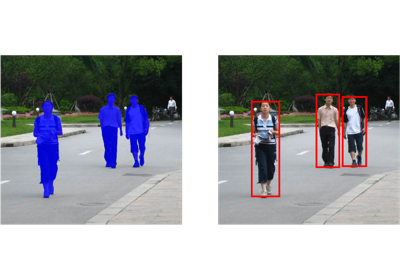

Object Detection, Instance Segmentation and Person Keypoint Detection

The models subpackage contains definitions for the following model architectures for detection:

The pre-trained models for detection, instance segmentation and keypoint detection are initialized with the classification models in torchvision.

The models expect a list of Tensor[C, H, W], in the range 0-1.

The models internally resize the images but the behaviour varies depending

on the model. Check the constructor of the models for more information. The

output format of such models is illustrated in Instance segmentation models.

For object detection and instance segmentation, the pre-trained models return the predictions of the following classes:

COCO_INSTANCE_CATEGORY_NAMES = [ '__background__', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack', 'umbrella', 'N/A', 'N/A', 'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket', 'bottle', 'N/A', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table', 'N/A', 'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone', 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A', 'book', 'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush' ]

Here are the summary of the accuracies for the models trained on the instances set of COCO train2017 and evaluated on COCO val2017.

Network |

box AP |

mask AP |

keypoint AP |

|---|---|---|---|

Faster R-CNN ResNet-50 FPN |

37.0 |

||

Faster R-CNN MobileNetV3-Large FPN |

32.8 |

||

Faster R-CNN MobileNetV3-Large 320 FPN |

22.8 |

||

RetinaNet ResNet-50 FPN |

36.4 |

||

SSD300 VGG16 |

25.1 |

||

SSDlite320 MobileNetV3-Large |

21.3 |

||

Mask R-CNN ResNet-50 FPN |

37.9 |

34.6 |

For person keypoint detection, the accuracies for the pre-trained models are as follows

Network |

box AP |

mask AP |

keypoint AP |

|---|---|---|---|

Keypoint R-CNN ResNet-50 FPN |

54.6 |

65.0 |

For person keypoint detection, the pre-trained model return the keypoints in the following order:

COCO_PERSON_KEYPOINT_NAMES = [ 'nose', 'left_eye', 'right_eye', 'left_ear', 'right_ear', 'left_shoulder', 'right_shoulder', 'left_elbow', 'right_elbow', 'left_wrist', 'right_wrist', 'left_hip', 'right_hip', 'left_knee', 'right_knee', 'left_ankle', 'right_ankle' ]

Runtime characteristics

The implementations of the models for object detection, instance segmentation and keypoint detection are efficient.

In the following table, we use 8 GPUs to report the results. During training, we use a batch size of 2 per GPU for all models except SSD which uses 4 and SSDlite which uses 24. During testing a batch size of 1 is used.

For test time, we report the time for the model evaluation and postprocessing (including mask pasting in image), but not the time for computing the precision-recall.

Network |

train time (s / it) |

test time (s / it) |

memory (GB) |

|---|---|---|---|

Faster R-CNN ResNet-50 FPN |

0.2288 |

0.0590 |

5.2 |

Faster R-CNN MobileNetV3-Large FPN |

0.1020 |

0.0415 |

1.0 |

Faster R-CNN MobileNetV3-Large 320 FPN |

0.0978 |

0.0376 |

0.6 |

RetinaNet ResNet-50 FPN |

0.2514 |

0.0939 |

4.1 |

SSD300 VGG16 |

0.2093 |

0.0744 |

1.5 |

SSDlite320 MobileNetV3-Large |

0.1773 |

0.0906 |

1.5 |

Mask R-CNN ResNet-50 FPN |

0.2728 |

0.0903 |

5.4 |

Keypoint R-CNN ResNet-50 FPN |

0.3789 |

0.1242 |

6.8 |

Faster R-CNN

-

torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=False, progress=True, num_classes=91, pretrained_backbone=True, trainable_backbone_layers=None, **kwargs)[source] Constructs a Faster R-CNN model with a ResNet-50-FPN backbone.

Reference: “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks”.

The input to the model is expected to be a list of tensors, each of shape

[C, H, W], one for each image, and should be in0-1range. Different images can have different sizes.The behavior of the model changes depending if it is in training or evaluation mode.

During training, the model expects both the input tensors, as well as a targets (list of dictionary), containing:

boxes (

FloatTensor[N, 4]): the ground-truth boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the class label for each ground-truth box

The model returns a

Dict[Tensor]during training, containing the classification and regression losses for both the RPN and the R-CNN.During inference, the model requires only the input tensors, and returns the post-processed predictions as a

List[Dict[Tensor]], one for each input image. The fields of theDictare as follows, whereNis the number of detections:boxes (

FloatTensor[N, 4]): the predicted boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the predicted labels for each detectionscores (

Tensor[N]): the scores of each detection

For more details on the output, you may refer to Instance segmentation models.

Faster R-CNN is exportable to ONNX for a fixed batch size with inputs images of fixed size.

Example:

>>> model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True) >>> # For training >>> images, boxes = torch.rand(4, 3, 600, 1200), torch.rand(4, 11, 4) >>> labels = torch.randint(1, 91, (4, 11)) >>> images = list(image for image in images) >>> targets = [] >>> for i in range(len(images)): >>> d = {} >>> d['boxes'] = boxes[i] >>> d['labels'] = labels[i] >>> targets.append(d) >>> output = model(images, targets) >>> # For inference >>> model.eval() >>> x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)] >>> predictions = model(x) >>> >>> # optionally, if you want to export the model to ONNX: >>> torch.onnx.export(model, x, "faster_rcnn.onnx", opset_version = 11)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 5, with 5 meaning all backbone layers are trainable.

Examples using

fasterrcnn_resnet50_fpn:

-

torchvision.models.detection.fasterrcnn_mobilenet_v3_large_fpn(pretrained=False, progress=True, num_classes=91, pretrained_backbone=True, trainable_backbone_layers=None, **kwargs)[source] Constructs a high resolution Faster R-CNN model with a MobileNetV3-Large FPN backbone. It works similarly to Faster R-CNN with ResNet-50 FPN backbone. See

fasterrcnn_resnet50_fpn()for more details.Example:

>>> model = torchvision.models.detection.fasterrcnn_mobilenet_v3_large_fpn(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)] >>> predictions = model(x)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 6, with 6 meaning all backbone layers are trainable.

-

torchvision.models.detection.fasterrcnn_mobilenet_v3_large_320_fpn(pretrained=False, progress=True, num_classes=91, pretrained_backbone=True, trainable_backbone_layers=None, **kwargs)[source] Constructs a low resolution Faster R-CNN model with a MobileNetV3-Large FPN backbone tunned for mobile use-cases. It works similarly to Faster R-CNN with ResNet-50 FPN backbone. See

fasterrcnn_resnet50_fpn()for more details.Example:

>>> model = torchvision.models.detection.fasterrcnn_mobilenet_v3_large_320_fpn(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)] >>> predictions = model(x)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 6, with 6 meaning all backbone layers are trainable.

RetinaNet

-

torchvision.models.detection.retinanet_resnet50_fpn(pretrained=False, progress=True, num_classes=91, pretrained_backbone=True, trainable_backbone_layers=None, **kwargs)[source] Constructs a RetinaNet model with a ResNet-50-FPN backbone.

Reference: “Focal Loss for Dense Object Detection”.

The input to the model is expected to be a list of tensors, each of shape

[C, H, W], one for each image, and should be in0-1range. Different images can have different sizes.The behavior of the model changes depending if it is in training or evaluation mode.

During training, the model expects both the input tensors, as well as a targets (list of dictionary), containing:

boxes (

FloatTensor[N, 4]): the ground-truth boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the class label for each ground-truth box

The model returns a

Dict[Tensor]during training, containing the classification and regression losses.During inference, the model requires only the input tensors, and returns the post-processed predictions as a

List[Dict[Tensor]], one for each input image. The fields of theDictare as follows, whereNis the number of detections:boxes (

FloatTensor[N, 4]): the predicted boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the predicted labels for each detectionscores (

Tensor[N]): the scores of each detection

For more details on the output, you may refer to Instance segmentation models.

Example:

>>> model = torchvision.models.detection.retinanet_resnet50_fpn(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)] >>> predictions = model(x)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 5, with 5 meaning all backbone layers are trainable.

Examples using

retinanet_resnet50_fpn:

SSD

-

torchvision.models.detection.ssd300_vgg16(pretrained: bool = False, progress: bool = True, num_classes: int = 91, pretrained_backbone: bool = True, trainable_backbone_layers: Optional[int] = None, **kwargs: Any)[source] Constructs an SSD model with input size 300x300 and a VGG16 backbone.

Reference: “SSD: Single Shot MultiBox Detector”.

The input to the model is expected to be a list of tensors, each of shape [C, H, W], one for each image, and should be in 0-1 range. Different images can have different sizes but they will be resized to a fixed size before passing it to the backbone.

The behavior of the model changes depending if it is in training or evaluation mode.

During training, the model expects both the input tensors, as well as a targets (list of dictionary), containing:

boxes (

FloatTensor[N, 4]): the ground-truth boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (Int64Tensor[N]): the class label for each ground-truth box

The model returns a Dict[Tensor] during training, containing the classification and regression losses.

During inference, the model requires only the input tensors, and returns the post-processed predictions as a List[Dict[Tensor]], one for each input image. The fields of the Dict are as follows, where

Nis the number of detections:boxes (

FloatTensor[N, 4]): the predicted boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (Int64Tensor[N]): the predicted labels for each detection

scores (Tensor[N]): the scores for each detection

Example

>>> model = torchvision.models.detection.ssd300_vgg16(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 300, 300), torch.rand(3, 500, 400)] >>> predictions = model(x)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 5, with 5 meaning all backbone layers are trainable.

Examples using

ssd300_vgg16:

SSDlite

-

torchvision.models.detection.ssdlite320_mobilenet_v3_large(pretrained: bool = False, progress: bool = True, num_classes: int = 91, pretrained_backbone: bool = False, trainable_backbone_layers: Optional[int] = None, norm_layer: Optional[Callable[[…], torch.nn.modules.module.Module]] = None, **kwargs: Any)[source] Constructs an SSDlite model with input size 320x320 and a MobileNetV3 Large backbone, as described at “Searching for MobileNetV3” and “MobileNetV2: Inverted Residuals and Linear Bottlenecks”.

See

ssd300_vgg16()for more details.Example

>>> model = torchvision.models.detection.ssdlite320_mobilenet_v3_large(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 320, 320), torch.rand(3, 500, 400)] >>> predictions = model(x)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 6, with 6 meaning all backbone layers are trainable.

norm_layer (callable, optional) – Module specifying the normalization layer to use.

Examples using

ssdlite320_mobilenet_v3_large:

Mask R-CNN

-

torchvision.models.detection.maskrcnn_resnet50_fpn(pretrained=False, progress=True, num_classes=91, pretrained_backbone=True, trainable_backbone_layers=None, **kwargs)[source] Constructs a Mask R-CNN model with a ResNet-50-FPN backbone.

Reference: “Mask R-CNN”.

The input to the model is expected to be a list of tensors, each of shape

[C, H, W], one for each image, and should be in0-1range. Different images can have different sizes.The behavior of the model changes depending if it is in training or evaluation mode.

During training, the model expects both the input tensors, as well as a targets (list of dictionary), containing:

boxes (

FloatTensor[N, 4]): the ground-truth boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the class label for each ground-truth boxmasks (

UInt8Tensor[N, H, W]): the segmentation binary masks for each instance

The model returns a

Dict[Tensor]during training, containing the classification and regression losses for both the RPN and the R-CNN, and the mask loss.During inference, the model requires only the input tensors, and returns the post-processed predictions as a

List[Dict[Tensor]], one for each input image. The fields of theDictare as follows, whereNis the number of detected instances:boxes (

FloatTensor[N, 4]): the predicted boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the predicted labels for each instancescores (

Tensor[N]): the scores or each instancemasks (

UInt8Tensor[N, 1, H, W]): the predicted masks for each instance, in0-1range. In order to obtain the final segmentation masks, the soft masks can be thresholded, generally with a value of 0.5 (mask >= 0.5)

For more details on the output and on how to plot the masks, you may refer to Instance segmentation models.

Mask R-CNN is exportable to ONNX for a fixed batch size with inputs images of fixed size.

Example:

>>> model = torchvision.models.detection.maskrcnn_resnet50_fpn(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)] >>> predictions = model(x) >>> >>> # optionally, if you want to export the model to ONNX: >>> torch.onnx.export(model, x, "mask_rcnn.onnx", opset_version = 11)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 5, with 5 meaning all backbone layers are trainable.

Examples using

maskrcnn_resnet50_fpn:

Keypoint R-CNN

-

torchvision.models.detection.keypointrcnn_resnet50_fpn(pretrained=False, progress=True, num_classes=2, num_keypoints=17, pretrained_backbone=True, trainable_backbone_layers=None, **kwargs)[source] Constructs a Keypoint R-CNN model with a ResNet-50-FPN backbone.

Reference: “Mask R-CNN”.

The input to the model is expected to be a list of tensors, each of shape

[C, H, W], one for each image, and should be in0-1range. Different images can have different sizes.The behavior of the model changes depending if it is in training or evaluation mode.

During training, the model expects both the input tensors, as well as a targets (list of dictionary), containing:

boxes (

FloatTensor[N, 4]): the ground-truth boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the class label for each ground-truth boxkeypoints (

FloatTensor[N, K, 3]): theKkeypoints location for each of theNinstances, in the format[x, y, visibility], wherevisibility=0means that the keypoint is not visible.

The model returns a

Dict[Tensor]during training, containing the classification and regression losses for both the RPN and the R-CNN, and the keypoint loss.During inference, the model requires only the input tensors, and returns the post-processed predictions as a

List[Dict[Tensor]], one for each input image. The fields of theDictare as follows, whereNis the number of detected instances:boxes (

FloatTensor[N, 4]): the predicted boxes in[x1, y1, x2, y2]format, with0 <= x1 < x2 <= Wand0 <= y1 < y2 <= H.labels (

Int64Tensor[N]): the predicted labels for each instancescores (

Tensor[N]): the scores or each instancekeypoints (

FloatTensor[N, K, 3]): the locations of the predicted keypoints, in[x, y, v]format.

For more details on the output, you may refer to Instance segmentation models.

Keypoint R-CNN is exportable to ONNX for a fixed batch size with inputs images of fixed size.

Example:

>>> model = torchvision.models.detection.keypointrcnn_resnet50_fpn(pretrained=True) >>> model.eval() >>> x = [torch.rand(3, 300, 400), torch.rand(3, 500, 400)] >>> predictions = model(x) >>> >>> # optionally, if you want to export the model to ONNX: >>> torch.onnx.export(model, x, "keypoint_rcnn.onnx", opset_version = 11)

- Parameters

pretrained (bool) – If True, returns a model pre-trained on COCO train2017

progress (bool) – If True, displays a progress bar of the download to stderr

num_classes (int) – number of output classes of the model (including the background)

num_keypoints (int) – number of keypoints, default 17

pretrained_backbone (bool) – If True, returns a model with backbone pre-trained on Imagenet

trainable_backbone_layers (int) – number of trainable (not frozen) resnet layers starting from final block. Valid values are between 0 and 5, with 5 meaning all backbone layers are trainable.

Examples using

keypointrcnn_resnet50_fpn:

Video classification

We provide models for action recognition pre-trained on Kinetics-400.

They have all been trained with the scripts provided in references/video_classification.

All pre-trained models expect input images normalized in the same way,

i.e. mini-batches of 3-channel RGB videos of shape (3 x T x H x W),

where H and W are expected to be 112, and T is a number of video frames in a clip.

The images have to be loaded in to a range of [0, 1] and then normalized

using mean = [0.43216, 0.394666, 0.37645] and std = [0.22803, 0.22145, 0.216989].

Note

The normalization parameters are different from the image classification ones, and correspond to the mean and std from Kinetics-400.

Note

For now, normalization code can be found in references/video_classification/transforms.py,

see the Normalize function there. Note that it differs from standard normalization for

images because it assumes the video is 4d.

Kinetics 1-crop accuracies for clip length 16 (16x112x112)

Network |

Clip acc@1 |

Clip acc@5 |

|---|---|---|

ResNet 3D 18 |

52.75 |

75.45 |

ResNet MC 18 |

53.90 |

76.29 |

ResNet (2+1)D |

57.50 |

78.81 |

ResNet 3D

-

torchvision.models.video.r3d_18(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.video.resnet.VideoResNet[source] Construct 18 layer Resnet3D model as in https://arxiv.org/abs/1711.11248

ResNet Mixed Convolution

-

torchvision.models.video.mc3_18(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.video.resnet.VideoResNet[source] Constructor for 18 layer Mixed Convolution network as in https://arxiv.org/abs/1711.11248

ResNet (2+1)D

-

torchvision.models.video.r2plus1d_18(pretrained: bool = False, progress: bool = True, **kwargs: Any) → torchvision.models.video.resnet.VideoResNet[source] Constructor for the 18 layer deep R(2+1)D network as in https://arxiv.org/abs/1711.11248