Note

PyTorch Mobile is no longer actively supported. Please check out ExecuTorch, PyTorch’s all-new on-device inference library.

PyTorch Mobile

There is a growing need to execute ML models on edge devices to reduce latency, preserve privacy, and enable new interactive use cases.

The PyTorch Mobile runtime beta release allows you to seamlessly go from training a model to deploying it, while staying entirely within the PyTorch ecosystem. It provides an end-to-end workflow that simplifies the research to production environment for mobile devices. In addition, it paves the way for privacy-preserving features via federated learning techniques.

PyTorch Mobile is in beta stage right now, and is already in wide scale production use. It will soon be available as a stable release once the APIs are locked down.

Key features

- Available for iOS, Android and Linux

- Provides APIs that cover common preprocessing and integration tasks needed for incorporating ML in mobile applications

- Support for tracing and scripting via TorchScript IR

- Support for XNNPACK floating point kernel libraries for Arm CPUs

- Integration of QNNPACK for 8-bit quantized kernels. Includes support for per-channel quantization, dynamic quantization and more

- Provides an efficient mobile interpreter in Android and iOS. Also supports build level optimization and selective compilation depending on the operators needed for user applications (i.e., the final binary size of the app is determined by the actual operators the app needs).

- Streamline model optimization via optimize_for_mobile

- Support for hardware backends like GPU, DSP, and NPU will be available soon in Beta

Prototypes

We have launched the following features in prototype, available in the PyTorch nightly releases, and would love to get your feedback on the PyTorch forums:

- GPU support on iOS via Metal

- GPU support on Android via Vulkan

- DSP and NPU support on Android via Google NNAPI

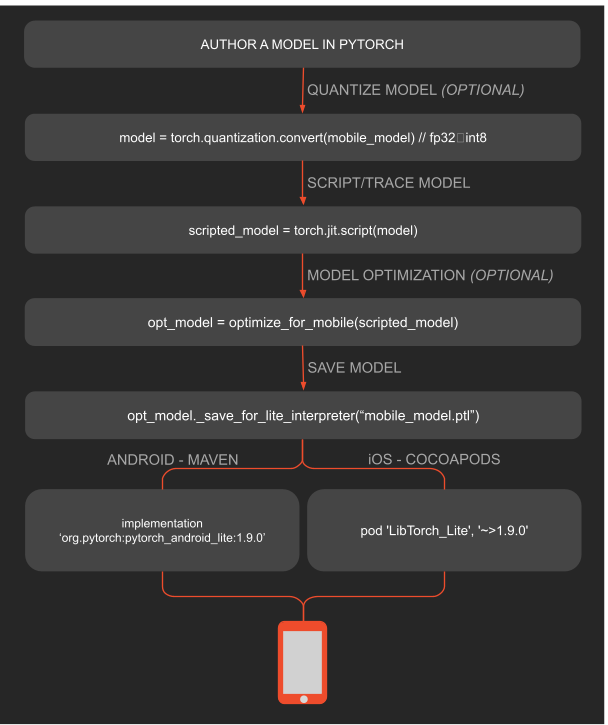

Deployment workflow

A typical workflow from training to mobile deployment with the optional model optimization steps is outlined in the following figure.

Examples to get you started

- PyTorch Mobile Runtime for iOS

- PyTorch Mobile Runtime for Android

- PyTorch Mobile Recipes in Tutorials

- Image Segmentation DeepLabV3 on iOS

- Image Segmentation DeepLabV3 on Android

- D2Go Object Detection on iOS

- D2Go Object Detection on Android

- PyTorchVideo on iOS

- PyTorchVideo on Android

- Speech Recognition on iOS

- Speech Recognition on Android

- Question Answering on iOS

- Question Answering on Android

Demo apps

Our new demo apps also include examples of image segmentation, object detection, neural machine translation, question answering, and vision transformers. They are available on both iOS and Android: