Building ExecuTorch LLaMA Android Demo App¶

This app demonstrates the use of the LLaMA chat app demonstrating local inference use case with ExecuTorch.

Prerequisites¶

Set up your ExecuTorch repo and environment if you haven’t done so by following the Setting up ExecuTorch to set up the repo and dev environment.

Install Java 17 JDK.

Install the Android SDK API Level 34 and Android NDK 25.0.8775105.

If you have Android Studio set up, you can install them with

Android Studio Settings -> Language & Frameworks -> Android SDK -> SDK Platforms -> Check the row with API Level 34.

Android Studio Settings -> Language & Frameworks -> Android SDK -> SDK Tools -> Check NDK (Side by side) row.

Alternatively, you can follow this guide to set up Java/SDK/NDK with CLI.

Supported Host OS: CentOS, macOS Sonoma on Apple Silicon.

Note: This demo app and tutorial has only been validated with arm64-v8a ABI, with NDK 25.0.8775105.

Getting models¶

Please refer to the ExecuTorch Llama2 docs to export the model.

After you export the model and generate tokenizer.bin, push them device:

adb shell mkdir -p /data/local/tmp/llama

adb push llama2.pte /data/local/tmp/llama

adb push tokenizer.bin /data/local/tmp/llama

Note: The demo app searches in /data/local/tmp/llama for .pte and .bin files as LLAMA model and tokenizer.

Build library¶

For the demo app to build, we need to build the ExecuTorch AAR library first.

The AAR library contains the required Java package and the corresponding JNI library for using ExecuTorch in your Android app.

Alternative 1: Use prebuilt AAR library (recommended)¶

Open a terminal window and navigate to the root directory of the

executorch.Run the following command to download the prebuilt library:

bash examples/demo-apps/android/LlamaDemo/download_prebuilt_lib.sh

The prebuilt AAR library contains the Java library and the JNI binding for NativePeer.java and ExecuTorch native library, including core ExecuTorch runtime libraries, XNNPACK backend, Portable kernels, Optimized kernels, and Quantized kernels. It comes with two ABI variants, arm64-v8a and x86_64.

If you want to use the prebuilt library for your own app, please refer to Using Android prebuilt libraries (AAR) for tutorial.

If you need to use other dependencies (like tokenizer), please refer to Alternative 2: Build from local machine option.

Alternative 2: Build from local machine¶

Open a terminal window and navigate to the root directory of the

executorch.Set the following environment variables:

export ANDROID_NDK=<path_to_android_ndk>

export ANDROID_ABI=arm64-v8a

Note: <path_to_android_ndk> is the root for the NDK, which is usually under

~/Library/Android/sdk/ndk/XX.Y.ZZZZZ for macOS, and contains NOTICE and README.md.

We use <path_to_android_ndk>/build/cmake/android.toolchain.cmake for CMake to cross-compile.

(Optional) If you need to use tiktoken as the tokenizer (for LLaMA3), set

EXECUTORCH_USE_TIKTOKEN=ONand later CMake will use it as the tokenizer. If you need to run other models like LLaMA2, skip this skip.

export EXECUTORCH_USE_TIKTOKEN=ON # Only for LLaMA3

Build the Android Java extension code:

pushd extension/android

./gradlew build

popd

Run the following command set up the required JNI library:

pushd examples/demo-apps/android/LlamaDemo

./gradlew :app:setup

popd

This is running the shell script setup.sh which configures the required core ExecuTorch, LLAMA2, and Android libraries, builds them, and copy to jniLibs.

Build APK¶

Alternative 1: Android Studio (Recommended)¶

Open Android Studio and select “Open an existing Android Studio project” to open examples/demo-apps/android/LlamaDemo.

Run the app (^R). This builds and launches the app on the phone.

Alternative 2: Command line¶

Without Android Studio UI, we can run gradle directly to build the app. We need to set up the Android SDK path and invoke gradle.

export ANDROID_HOME=<path_to_android_sdk_home>

pushd examples/demo-apps/android/LlamaDemo

./gradlew :app:installDebug

popd

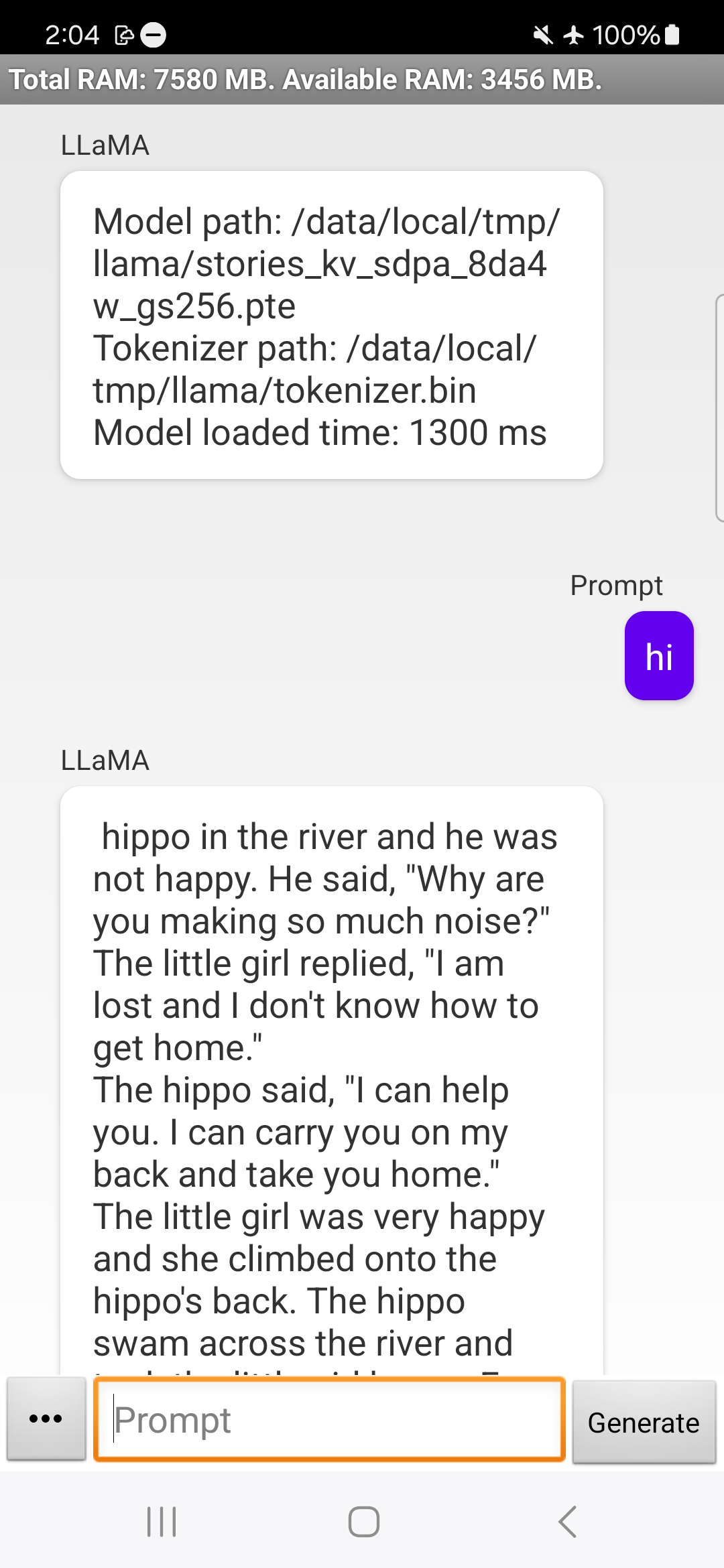

On the phone or emulator, you can try running the model:

Takeaways¶

Through this tutorial we’ve learnt how to build the ExecuTorch LLAMA library, and expose it to JNI layer to build the Android app.