Building ExecuTorch LLaMA iOS Demo App¶

This app demonstrates the use of the LLaMA chat app demonstrating local inference use case with ExecuTorch.

Prerequisites¶

Set up your ExecuTorch repo and environment if you haven’t done so by following the Setting up ExecuTorch to set up the repo and dev environment:

git clone -b release/0.2 https://github.com/pytorch/executorch.git

cd executorch

git submodule update --init

python3 -m venv .venv && source .venv/bin/activate

./install_requirements.sh

Exporting models¶

Please refer to the ExecuTorch Llama2 docs to export the model.

Run the App¶

Open the project in Xcode.

Run the app (cmd+R).

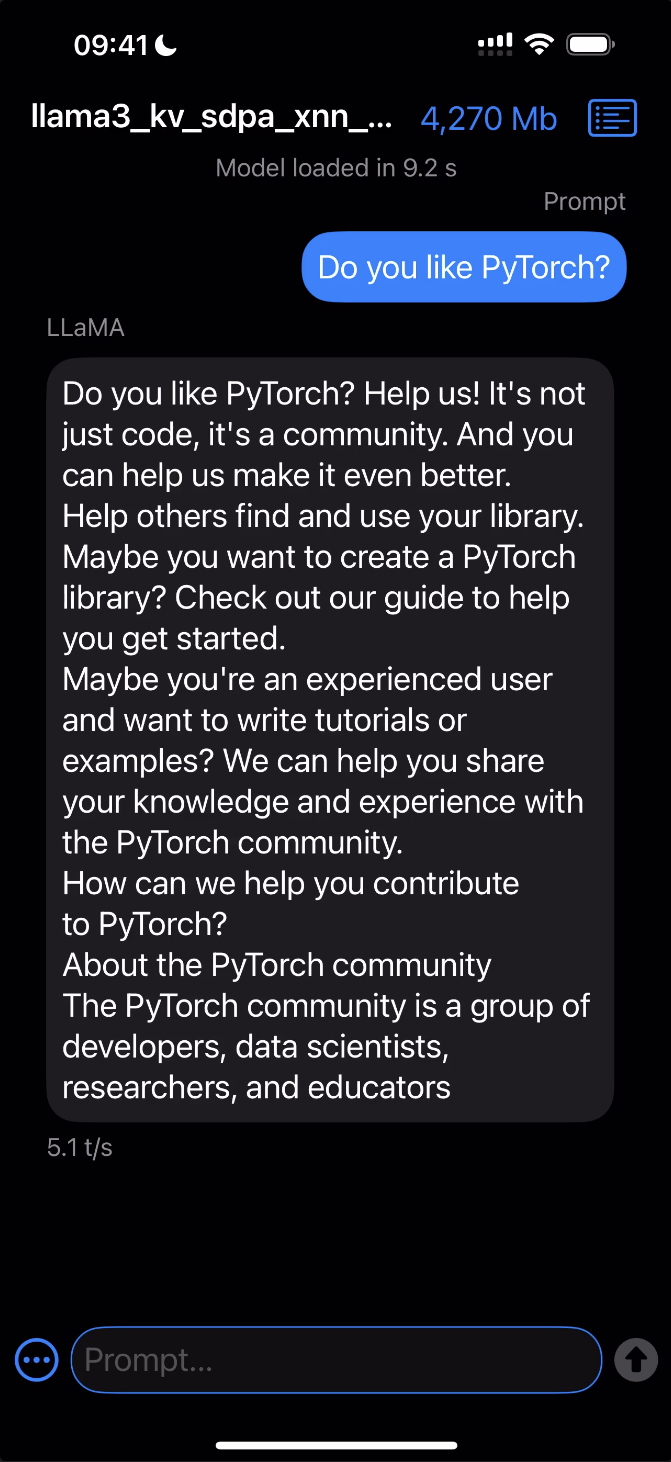

In app UI pick a model and tokenizer to use, type a prompt and tap the arrow buton

Note

ExecuTorch runtime is distributed as a Swift package providing some .xcframework as prebuilt binary targets. Xcode will dowload and cache the package on the first run, which will take some time.

Copy the model to Simulator¶

Drag&drop the model and tokenizer files onto the Simulator window and save them somewhere inside the iLLaMA folder.

Pick the files in the app dialog, type a prompt and click the arrow-up button.

Copy the model to Device¶

Wire-connect the device and open the contents in Finder.

Navigate to the Files tab and drag&drop the model and tokenizer files onto the iLLaMA folder.

Wait until the files are copied.

Click the image below to see it in action!