Since the release of PyTorch 1.0, we’ve seen the community expand to add new tools, contribute to a growing set of models available in the PyTorch Hub, and continually increase usage in both research and production.

From a core perspective, PyTorch has continued to add features to support both research and production usage, including the ability to bridge these two worlds via TorchScript. Today, we are excited to announce that we have four new releases including PyTorch 1.2, torchvision 0.4, torchaudio 0.3, and torchtext 0.4. You can get started now with any of these releases at pytorch.org.

PyTorch 1.2

With PyTorch 1.2, the open source ML framework takes a major step forward for production usage with the addition of an improved and more polished TorchScript environment. These improvements make it even easier to ship production models, expand support for exporting ONNX formatted models, and enhance module level support for Transformers. In addition to these new features, TensorBoard is now no longer experimental – you can simply type from torch.utils.tensorboard import SummaryWriter to get started.

TorchScript Improvements

Since its release in PyTorch 1.0, TorchScript has provided a path to production for eager PyTorch models. The TorchScript compiler converts PyTorch models to a statically typed graph representation, opening up opportunities for optimization and execution in constrained environments where Python is not available. You can incrementally convert your model to TorchScript, mixing compiled code seamlessly with Python.

PyTorch 1.2 significantly expands TorchScript’s support for the subset of Python used in PyTorch models and delivers a new, easier-to-use API for compiling your models to TorchScript. See the migration guide for details. Below is an example usage of the new API:

import torch

class MyModule(torch.nn.Module):

def __init__(self, N, M):

super(MyModule, self).__init__()

self.weight = torch.nn.Parameter(torch.rand(N, M))

def forward(self, input):

if input.sum() > 0:

output = self.weight.mv(input)

else:

output = self.weight + input

return output

# Compile the model code to a static representation

my_script_module = torch.jit.script(MyModule(3, 4))

# Save the compiled code and model data so it can be loaded elsewhere

my_script_module.save("my_script_module.pt")

To learn more, see our Introduction to TorchScript and Loading a PyTorch Model in C++ tutorials.

Expanded ONNX Export

The ONNX community continues to grow with an open governance structure and additional steering committee members, special interest groups (SIGs), and working groups (WGs). In collaboration with Microsoft, we’ve added full support to export ONNX Opset versions 7(v1.2), 8(v1.3), 9(v1.4) and 10 (v1.5). We’ve have also enhanced the constant folding pass to support Opset 10, the latest available version of ONNX. ScriptModule has also been improved including support for multiple outputs, tensor factories, and tuples as inputs and outputs. Additionally, users are now able to register their own symbolic to export custom ops, and specify the dynamic dimensions of inputs during export. Here is a summary of the all of the major improvements:

- Support for multiple Opsets including the ability to export dropout, slice, flip, and interpolate in Opset 10.

- Improvements to ScriptModule including support for multiple outputs, tensor factories, and tuples as inputs and outputs.

- More than a dozen additional PyTorch operators supported including the ability to export a custom operator.

- Many big fixes and test infra improvements.

You can try out the latest tutorial here, contributed by @lara-hdr at Microsoft. A big thank you to the entire Microsoft team for all of their hard work to make this release happen!

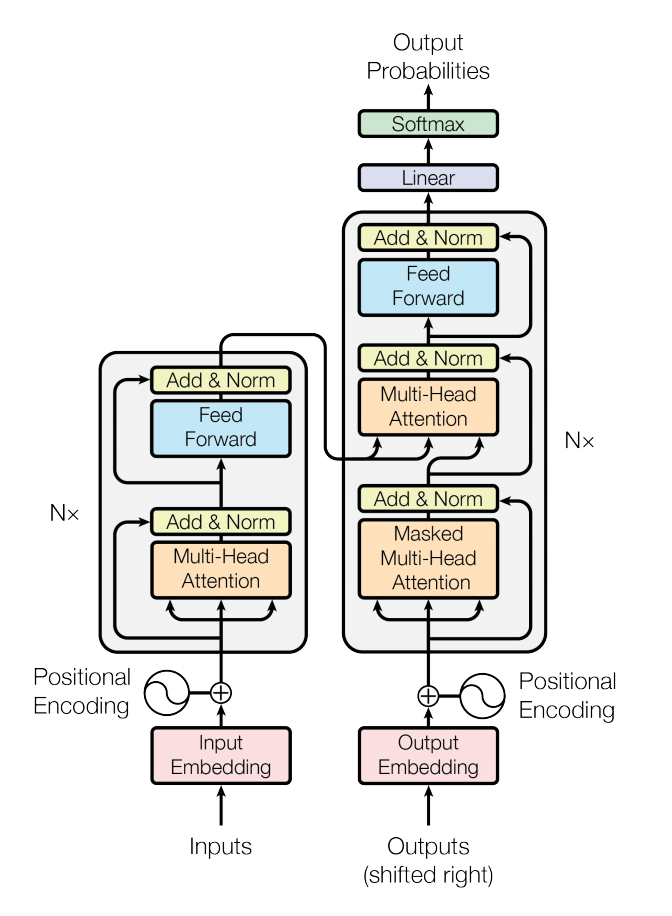

nn.Transformer

In PyTorch 1.2, we now include a standard nn.Transformer module, based on the paper “Attention is All You Need”. The nn.Transformer module relies entirely on an attention mechanism to draw global dependencies between input and output. The individual components of the nn.Transformer module are designed so they can be adopted independently. For example, the nn.TransformerEncoder can be used by itself, without the larger nn.Transformer. The new APIs include:

nn.Transformernn.TransformerEncoderandnn.TransformerEncoderLayernn.TransformerDecoderandnn.TransformerDecoderLayer

See the Transformer Layers documentation for more information. See here for the full PyTorch 1.2 release notes.

Domain API Library Updates

PyTorch domain libraries like torchvision, torchtext, and torchaudio provide convenient access to common datasets, models, and transforms that can be used to quickly create a state-of-the-art baseline. Moreover, they also provide common abstractions to reduce boilerplate code that users might have to otherwise repeatedly write. Since research domains have distinct requirements, an ecosystem of specialized libraries called domain APIs (DAPI) has emerged around PyTorch to simplify the development of new and existing algorithms in a number of fields. We’re excited to release three updated DAPI libraries for text, audio, and vision that compliment the PyTorch 1.2 core release.

Torchaudio 0.3 with Kaldi Compatibility, New Transforms

Torchaudio specializes in machine understanding of audio waveforms. It is an ML library that provides relevant signal processing functionality (but is not a general signal processing library). It leverages PyTorch’s GPU support to provide many tools and transformations for waveforms to make data loading and standardization easier and more readable. For example, it offers data loaders for waveforms using sox, and transformations such as spectrograms, resampling, and mu-law encoding and decoding.

We are happy to announce the availability of torchaudio 0.3.0, with a focus on standardization and complex numbers, a transformation (resample) and two new functionals (phase_vocoder, ISTFT), Kaldi compatibility, and a new tutorial. Torchaudio was redesigned to be an extension of PyTorch and a part of the domain APIs (DAPI) ecosystem.

Standardization

Significant effort in solving machine learning problems goes into data preparation. In this new release, we’ve updated torchaudio’s interfaces for its transformations to standardize around the following vocabulary and conventions.

Tensors are assumed to have channel as the first dimension and time as the last dimension (when applicable). This makes it consistent with PyTorch’s dimensions. For size names, the prefix n_ is used (e.g. “a tensor of size (n_freq, n_mel)”) whereas dimension names do not have this prefix (e.g. “a tensor of dimension (channel, time)”). The input of all transforms and functions now assumes channel first. This is done to be consistent with PyTorch, which has channel followed by the number of samples. The channel parameter of all transforms and functions is now deprecated.

The output of STFT is (channel, frequency, time, 2), meaning for each channel, the columns are the Fourier transform of a certain window, so as we travel horizontally we can see each column (the Fourier transformed waveform) change over time. This matches the output of librosa so we no longer need to transpose in our test comparisons with Spectrogram, MelScale, MelSpectrogram, and MFCC. Moreover, because of these new conventions, we deprecated LC2CL and BLC2CBL which were used to transfer from one shape of signal to another.

As part of this release, we’re also introducing support for complex numbers via tensors of dimension (…, 2), and providing magphase to convert such a tensor into its magnitude and phase, and similarly complex_norm and angle.

The details of the standardization are provided in the README.

Functionals, Transformations, and Kaldi Compatibility

Prior to the standardization, we separated state and computation into torchaudio.transforms and torchaudio.functional.

As part of the transforms, we’re adding a new transformation in 0.3.0: Resample. Resample can upsample or downsample a waveform to a different frequency.

As part of the functionals, we’re introducing: phase_vocoder, a phase vocoder to change the speed of a waveform without changing its pitch, and ISTFT, the inverse STFT implemented to be compatible with STFT provided by PyTorch. This separation allows us to make functionals weak scriptable and to utilize JIT in 0.3.0. We thus have JIT and CUDA support for the following transformations: Spectrogram, AmplitudeToDB (previously named SpectrogramToDB), MelScale, MelSpectrogram, MFCC, MuLawEncoding, MuLawDecoding (previously named MuLawExpanding).

We now also provide a compatibility interface with Kaldi to ease onboarding and reduce a user’s code dependency on Kaldi. We now have an interface for spectrogram, fbank, and resample_waveform.

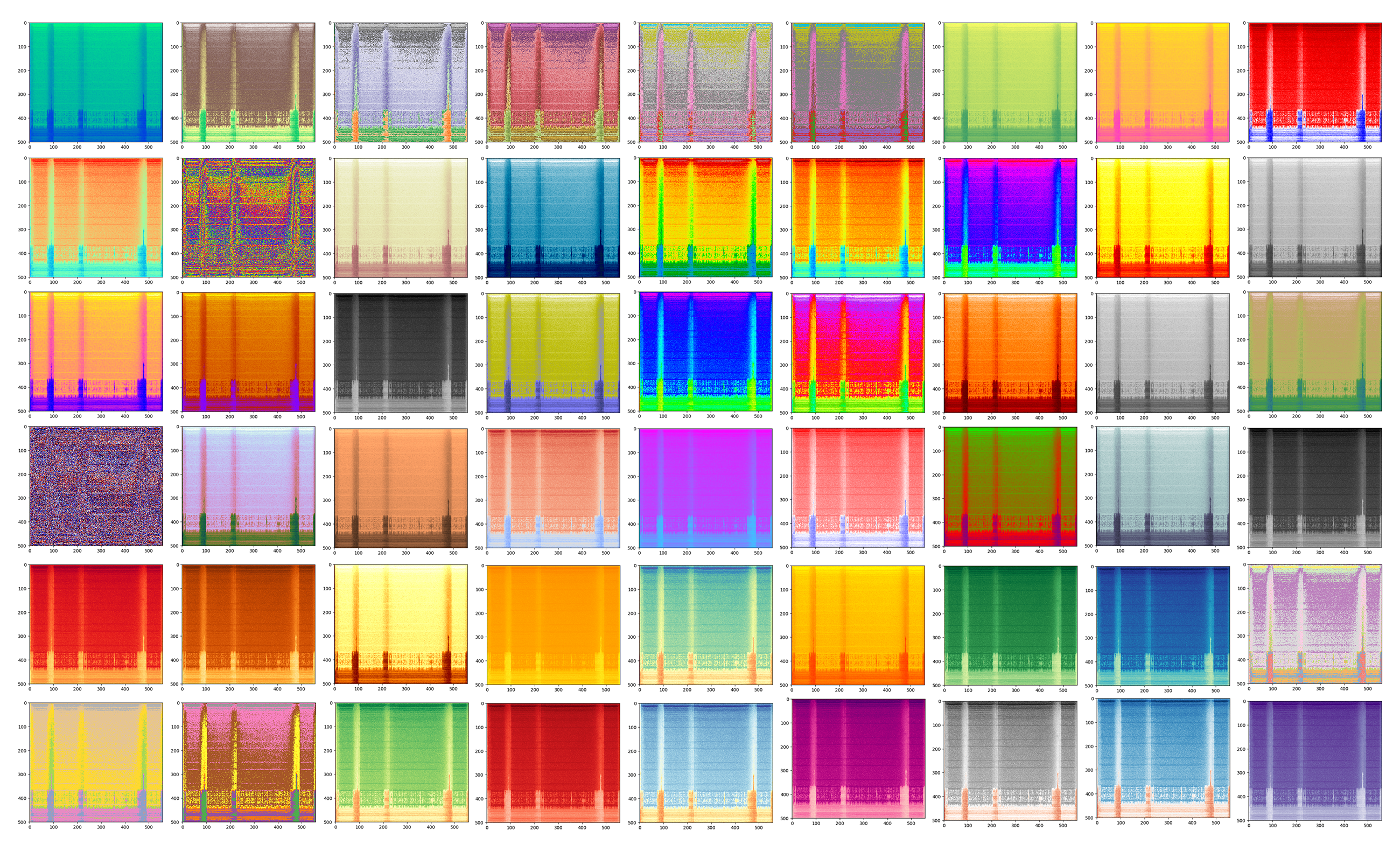

New Tutorial

To showcase the new conventions and transformations, we have a new tutorial demonstrating how to preprocess waveforms using torchaudio. This tutorial walks through an example of loading a waveform and applying some of the available transformations to it.

We are excited to see an active community around torchaudio and eager to further grow and support it. We encourage you to go ahead and experiment for yourself with this tutorial and the two datasets that are available: VCTK and YESNO! They have an interface to download the datasets and preprocess them in a convenient format. You can find the details in the release notes here.

Torchtext 0.4 with supervised learning datasets

A key focus area of torchtext is to provide the fundamental elements to help accelerate NLP research. This includes easy access to commonly used datasets and basic preprocessing pipelines for working on raw text based data. The torchtext 0.4.0 release includes several popular supervised learning baselines with “one-command” data loading. A tutorial is included to show how to use the new datasets for text classification analysis. We also added and improved on a few functions such as get_tokenizer and build_vocab_from_iterator to make it easier to implement future datasets. Additional examples can be found here.

Text classification is an important task in Natural Language Processing with many applications, such as sentiment analysis. The new release includes several popular text classification datasets for supervised learning including:

- AG_NEWS

- SogouNews

- DBpedia

- YelpReviewPolarity

- YelpReviewFull

- YahooAnswers

- AmazonReviewPolarity

- AmazonReviewFull

Each dataset comes with two parts (train vs. test), and can be easily loaded with a single command. The datasets also support an ngrams feature to capture the partial information about the local word order. Take a look at the tutorial here to learn more about how to use the new datasets for supervised problems such as text classification analysis.

from torchtext.datasets.text_classification import DATASETS

train_dataset, test_dataset = DATASETS['AG_NEWS'](ngrams=2)

In addition to the domain library, PyTorch provides many tools to make data loading easy. Users now can load and preprocess the text classification datasets with some well supported tools, like torch.utils.data.DataLoader and torch.utils.data.IterableDataset. Here are a few lines to wrap the data with DataLoader. More examples can be found here.

from torch.utils.data import DataLoader

data = DataLoader(train_dataset, collate_fn=generate_batch)

Check out the release notes here to learn more and try out the tutorial here.

Torchvision 0.4 with Support for Video

Video is now a first-class citizen in torchvision, with support for data loading, datasets, pre-trained models, and transforms. The 0.4 release of torchvision includes:

- Efficient IO primitives for reading/writing video files (including audio), with support for arbitrary encodings and formats.

- Standard video datasets, compatible with

torch.utils.data.Datasetandtorch.utils.data.DataLoader. - Pre-trained models built on the Kinetics-400 dataset for action classification on videos (including the training scripts).

- Reference training scripts for training your own video models.

We wanted working with video data in PyTorch to be as straightforward as possible, without compromising too much on performance. As such, we avoid the steps that would require re-encoding the videos beforehand, as it would involve:

- A preprocessing step which duplicates the dataset in order to re-encode it.

- An overhead in time and space because this re-encoding is time-consuming.

- Generally, an external script should be used to perform the re-encoding.

Additionally, we provide APIs such as the utility class, VideoClips, that simplifies the task of enumerating all possible clips of fixed size in a list of video files by creating an index of all clips in a set of videos. It also allows you to specify a fixed frame-rate for the videos. An example of the API is provided below:

from torchvision.datasets.video_utils import VideoClips

class MyVideoDataset(object):

def __init__(self, video_paths):

self.video_clips = VideoClips(video_paths,

clip_length_in_frames=16,

frames_between_clips=1,

frame_rate=15)

def __getitem__(self, idx):

video, audio, info, video_idx = self.video_clips.get_clip(idx)

return video, audio

def __len__(self):

return self.video_clips.num_clips()

Most of the user-facing API is in Python, similar to PyTorch, which makes it easily extensible. Plus, the underlying implementation is fast — torchvision decodes as little as possible from the video on-the-fly in order to return a clip from the video.

Check out the torchvision 0.4 release notes here for more details.

We look forward to continuing our collaboration with the community and hearing your feedback as we further improve and expand the PyTorch deep learning platform.

We’d like to thank the entire PyTorch team and the community for all of the contributions to this work!