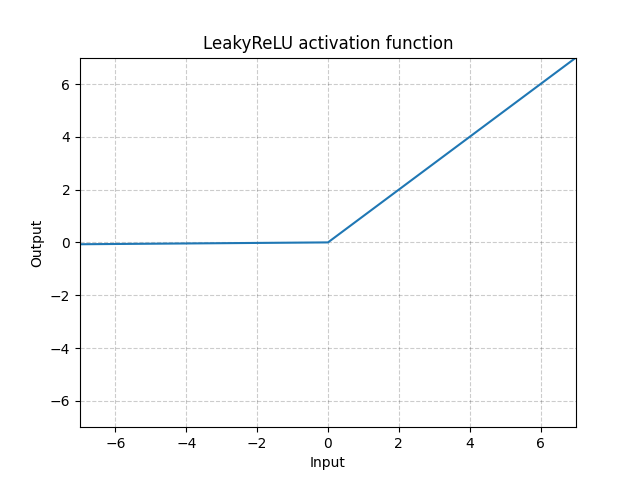

LeakyReLU¶

-

class

torch.nn.LeakyReLU(negative_slope=0.01, inplace=False)[source]¶ Applies the element-wise function:

or

- Parameters

negative_slope – Controls the angle of the negative slope. Default: 1e-2

inplace – can optionally do the operation in-place. Default:

False

- Shape:

Input: where * means, any number of additional dimensions

Output: , same shape as the input

Examples:

>>> m = nn.LeakyReLU(0.1) >>> input = torch.randn(2) >>> output = m(input)