ToDtype

- class torchvision.transforms.v2.ToDtype(dtype: Union[dtype, Dict[Union[Type, str], Optional[dtype]]], scale: bool = False)[source]

[BETA] Converts the input to a specific dtype, optionally scaling the values for images or videos.

Note

The ToDtype transform is in Beta stage, and while we do not expect disruptive breaking changes, some APIs may slightly change according to user feedback. Please submit any feedback you may have in this issue: https://github.com/pytorch/vision/issues/6753.

Note

ToDtype(dtype, scale=True)is the recommended replacement forConvertImageDtype(dtype).- Parameters:

dtype (

torch.dtypeor dict ofTVTensor->torch.dtype) – The dtype to convert to. If atorch.dtypeis passed, e.g.torch.float32, only images and videos will be converted to that dtype: this is for compatibility withConvertImageDtype. A dict can be passed to specify per-tv_tensor conversions, e.g.dtype={tv_tensors.Image: torch.float32, tv_tensors.Mask: torch.int64, "others":None}. The “others” key can be used as a catch-all for any other tv_tensor type, andNonemeans no conversion.scale (bool, optional) – Whether to scale the values for images or videos. See Dtype and expected value range. Default:

False.

Examples using

ToDtype:

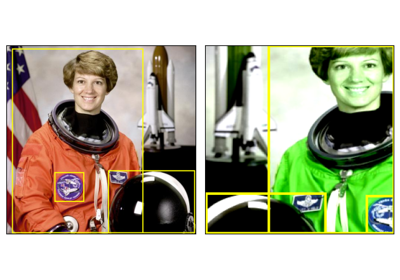

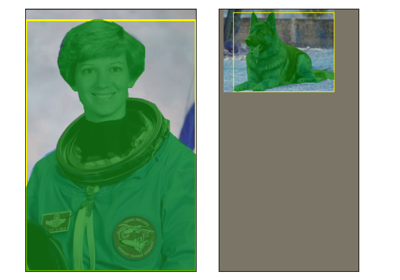

Transforms v2: End-to-end object detection/segmentation example

Transforms v2: End-to-end object detection/segmentation example