RandomResizedCrop

- class torchvision.transforms.v2.RandomResizedCrop(size: Union[int, Sequence[int]], scale: Tuple[float, float] = (0.08, 1.0), ratio: Tuple[float, float] = (0.75, 1.3333333333333333), interpolation: Union[InterpolationMode, int] = InterpolationMode.BILINEAR, antialias: Optional[Union[str, bool]] = 'warn')[source]

[BETA] Crop a random portion of the input and resize it to a given size.

Note

The RandomResizedCrop transform is in Beta stage, and while we do not expect disruptive breaking changes, some APIs may slightly change according to user feedback. Please submit any feedback you may have in this issue: https://github.com/pytorch/vision/issues/6753.

If the input is a

torch.Tensoror aTVTensor(e.g.Image,Video,BoundingBoxesetc.) it can have arbitrary number of leading batch dimensions. For example, the image can have[..., C, H, W]shape. A bounding box can have[..., 4]shape.A crop of the original input is made: the crop has a random area (H * W) and a random aspect ratio. This crop is finally resized to the given size. This is popularly used to train the Inception networks.

- Parameters:

size (int or sequence) –

expected output size of the crop, for each edge. If size is an int instead of sequence like (h, w), a square output size

(size, size)is made. If provided a sequence of length 1, it will be interpreted as (size[0], size[0]).Note

In torchscript mode size as single int is not supported, use a sequence of length 1:

[size, ].scale (tuple of python:float, optional) – Specifies the lower and upper bounds for the random area of the crop, before resizing. The scale is defined with respect to the area of the original image.

ratio (tuple of python:float, optional) – lower and upper bounds for the random aspect ratio of the crop, before resizing.

interpolation (InterpolationMode, optional) – Desired interpolation enum defined by

torchvision.transforms.InterpolationMode. Default isInterpolationMode.BILINEAR. If input is Tensor, onlyInterpolationMode.NEAREST,InterpolationMode.NEAREST_EXACT,InterpolationMode.BILINEARandInterpolationMode.BICUBICare supported. The corresponding Pillow integer constants, e.g.PIL.Image.BILINEARare accepted as well.antialias (bool, optional) –

Whether to apply antialiasing. It only affects tensors with bilinear or bicubic modes and it is ignored otherwise: on PIL images, antialiasing is always applied on bilinear or bicubic modes; on other modes (for PIL images and tensors), antialiasing makes no sense and this parameter is ignored. Possible values are:

True: will apply antialiasing for bilinear or bicubic modes. Other mode aren’t affected. This is probably what you want to use.False: will not apply antialiasing for tensors on any mode. PIL images are still antialiased on bilinear or bicubic modes, because PIL doesn’t support no antialias.None: equivalent toFalsefor tensors andTruefor PIL images. This value exists for legacy reasons and you probably don’t want to use it unless you really know what you are doing.

The current default is

Nonebut will change toTruein v0.17 for the PIL and Tensor backends to be consistent.

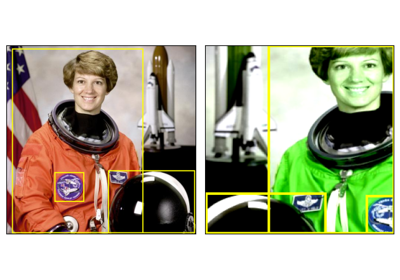

Examples using

RandomResizedCrop: