Compose

- class torchvision.transforms.v2.Compose(transforms: Sequence[Callable])[source]

[BETA] Composes several transforms together.

Note

The Compose transform is in Beta stage, and while we do not expect disruptive breaking changes, some APIs may slightly change according to user feedback. Please submit any feedback you may have in this issue: https://github.com/pytorch/vision/issues/6753.

This transform does not support torchscript. Please, see the note below.

- Parameters:

transforms (list of

Transformobjects) – list of transforms to compose.

Example

>>> transforms.Compose([ >>> transforms.CenterCrop(10), >>> transforms.PILToTensor(), >>> transforms.ConvertImageDtype(torch.float), >>> ])

Note

In order to script the transformations, please use

torch.nn.Sequentialas below.>>> transforms = torch.nn.Sequential( >>> transforms.CenterCrop(10), >>> transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)), >>> ) >>> scripted_transforms = torch.jit.script(transforms)

Make sure to use only scriptable transformations, i.e. that work with

torch.Tensor, does not require lambda functions orPIL.Image.Examples using

Compose:

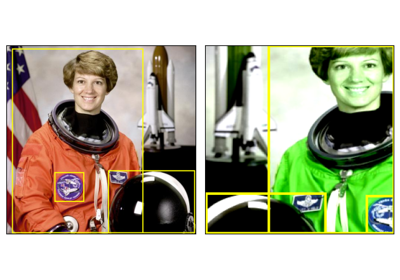

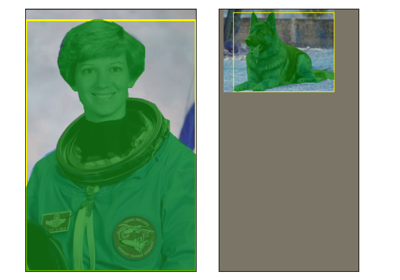

Transforms v2: End-to-end object detection/segmentation example

Transforms v2: End-to-end object detection/segmentation example- extra_repr() str[source]

Set the extra representation of the module.

To print customized extra information, you should re-implement this method in your own modules. Both single-line and multi-line strings are acceptable.

- forward(*inputs: Any) Any[source]

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.