StreamReader

- class torchaudio.io.StreamReader(src: Union[str, BinaryIO], format: Optional[str] = None, option: Optional[Dict[str, str]] = None, buffer_size: int = 4096)[source]

Fetch and decode audio/video streams chunk by chunk.

For the detailed usage of this class, please refer to the tutorial.

- Parameters:

src (str, file-like object) –

The media source. If string-type, it must be a resource indicator that FFmpeg can handle. This includes a file path, URL, device identifier or filter expression. The supported value depends on the FFmpeg found in the system.

If file-like object, it must support read method with the signature read(size: int) -> bytes. Additionally, if the file-like object has seek method, it uses the method when parsing media metadata. This improves the reliability of codec detection. The signagure of seek method must be seek(offset: int, whence: int) -> int.

Please refer to the following for the expected signature and behavior of read and seek method.

format (str or None, optional) –

Override the input format, or specify the source sound device. Default:

None(no override nor device input).This argument serves two different usecases.

Override the source format. This is useful when the input data do not contain a header.

Specify the input source device. This allows to load media stream from hardware devices, such as microphone, camera and screen, or a virtual device.

Note

This option roughly corresponds to

-foption offfmpegcommand. Please refer to the ffmpeg documentations for the possible values.https://ffmpeg.org/ffmpeg-formats.html#Demuxers

Please use

get_demuxers()to list the demultiplexers available in the current environment.For device access, the available values vary based on hardware (AV device) and software configuration (ffmpeg build).

https://ffmpeg.org/ffmpeg-devices.html#Input-Devices

Please use

get_input_devices()to list the input devices available in the current environment.option (dict of str to str, optional) –

Custom option passed when initializing format context (opening source).

You can use this argument to change the input source before it is passed to decoder.

Default:

None.buffer_size (int) –

The internal buffer size in byte. Used only when src is file-like object.

Default: 4096.

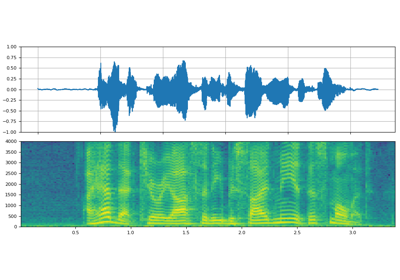

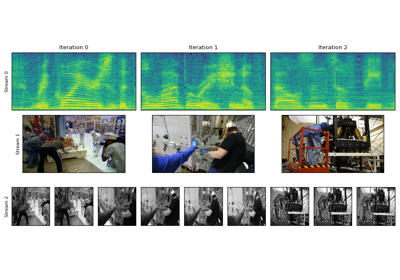

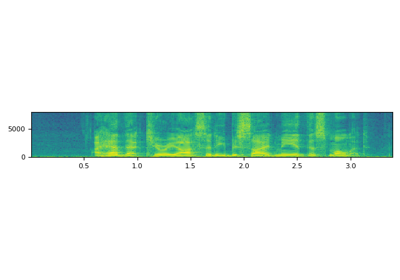

- Tutorials using

StreamReader:

Properties

default_audio_stream

- property StreamReader.default_audio_stream

The index of default audio stream.

Noneif there is no audio stream- Type:

Optional[int]

default_video_stream

- property StreamReader.default_video_stream

The index of default video stream.

Noneif there is no video stream- Type:

Optional[int]

num_out_streams

num_src_streams

Methods

add_audio_stream

- StreamReader.add_audio_stream(frames_per_chunk: int, buffer_chunk_size: int = 3, *, stream_index: Optional[int] = None, decoder: Optional[str] = None, decoder_option: Optional[Dict[str, str]] = None, filter_desc: Optional[str] = None)[source]

Add output audio stream

- Parameters:

frames_per_chunk (int) –

Number of frames returned as one chunk. If the source stream is exhausted before enough frames are buffered, then the chunk is returned as-is.

Providing

-1disables chunking andpop_chunks()method will concatenate all the buffered frames and return it.buffer_chunk_size (int, optional) –

Internal buffer size. When the number of chunks buffered exceeds this number, old frames are dropped. For example, if

frames_per_chunkis 5 andbuffer_chunk_sizeis 3, then frames older than15are dropped. Providing-1disables this behavior.Default:

3.stream_index (int or None, optional) – The source audio stream index. If omitted,

default_audio_streamis used.decoder (str or None, optional) –

The name of the decoder to be used. When provided, use the specified decoder instead of the default one.

To list the available decoders, please use

get_audio_decoders()for audio, andget_video_decoders()for video.Default:

None.decoder_option (dict or None, optional) –

Options passed to decoder. Mapping from str to str. (Default:

None)To list decoder options for a decoder, you can use

ffmpeg -h decoder=<DECODER>command.In addition to decoder-specific options, you can also pass options related to multithreading. They are effective only if the decoder support them. If neither of them are provided, StreamReader defaults to single thread.

"threads": The number of threads (in str). Providing the value"0"will let FFmpeg decides based on its heuristics."thread_type": Which multithreading method to use. The valid values are"frame"or"slice". Note that each decoder supports different set of methods. If not provided, a default value is used."frame": Decode more than one frame at once. Each thread handles one frame. This will increase decoding delay by one frame per thread"slice": Decode more than one part of a single frame at once.

filter_desc (str or None, optional) – Filter description. The list of available filters can be found at https://ffmpeg.org/ffmpeg-filters.html Note that complex filters are not supported.

add_basic_audio_stream

- StreamReader.add_basic_audio_stream(frames_per_chunk: int, buffer_chunk_size: int = 3, *, stream_index: Optional[int] = None, decoder: Optional[str] = None, decoder_option: Optional[Dict[str, str]] = None, format: Optional[str] = 'fltp', sample_rate: Optional[int] = None, num_channels: Optional[int] = None)[source]

Add output audio stream

- Parameters:

frames_per_chunk (int) –

Number of frames returned as one chunk. If the source stream is exhausted before enough frames are buffered, then the chunk is returned as-is.

Providing

-1disables chunking andpop_chunks()method will concatenate all the buffered frames and return it.buffer_chunk_size (int, optional) –

Internal buffer size. When the number of chunks buffered exceeds this number, old frames are dropped. For example, if

frames_per_chunkis 5 andbuffer_chunk_sizeis 3, then frames older than15are dropped. Providing-1disables this behavior.Default:

3.stream_index (int or None, optional) – The source audio stream index. If omitted,

default_audio_streamis used.decoder (str or None, optional) –

The name of the decoder to be used. When provided, use the specified decoder instead of the default one.

To list the available decoders, please use

get_audio_decoders()for audio, andget_video_decoders()for video.Default:

None.decoder_option (dict or None, optional) –

Options passed to decoder. Mapping from str to str. (Default:

None)To list decoder options for a decoder, you can use

ffmpeg -h decoder=<DECODER>command.In addition to decoder-specific options, you can also pass options related to multithreading. They are effective only if the decoder support them. If neither of them are provided, StreamReader defaults to single thread.

"threads": The number of threads (in str). Providing the value"0"will let FFmpeg decides based on its heuristics."thread_type": Which multithreading method to use. The valid values are"frame"or"slice". Note that each decoder supports different set of methods. If not provided, a default value is used."frame": Decode more than one frame at once. Each thread handles one frame. This will increase decoding delay by one frame per thread"slice": Decode more than one part of a single frame at once.

format (str, optional) –

Output sample format (precision).

If

None, the output chunk has dtype corresponding to the precision of the source audio.Otherwise, the sample is converted and the output dtype is changed as following.

"u8p": The output istorch.uint8type."s16p": The output istorch.int16type."s32p": The output istorch.int32type."s64p": The output istorch.int64type."fltp": The output istorch.float32type."dblp": The output istorch.float64type.

Default:

"fltp".sample_rate (int or None, optional) – If provided, resample the audio.

num_channels (int, or None, optional) – If provided, change the number of channels.

add_basic_video_stream

- StreamReader.add_basic_video_stream(frames_per_chunk: int, buffer_chunk_size: int = 3, *, stream_index: Optional[int] = None, decoder: Optional[str] = None, decoder_option: Optional[Dict[str, str]] = None, format: Optional[str] = 'rgb24', frame_rate: Optional[int] = None, width: Optional[int] = None, height: Optional[int] = None, hw_accel: Optional[str] = None)[source]

Add output video stream

- Parameters:

frames_per_chunk (int) –

Number of frames returned as one chunk. If the source stream is exhausted before enough frames are buffered, then the chunk is returned as-is.

Providing

-1disables chunking andpop_chunks()method will concatenate all the buffered frames and return it.buffer_chunk_size (int, optional) –

Internal buffer size. When the number of chunks buffered exceeds this number, old frames are dropped. For example, if

frames_per_chunkis 5 andbuffer_chunk_sizeis 3, then frames older than15are dropped. Providing-1disables this behavior.Default:

3.stream_index (int or None, optional) – The source video stream index. If omitted,

default_video_streamis used.decoder (str or None, optional) –

The name of the decoder to be used. When provided, use the specified decoder instead of the default one.

To list the available decoders, please use

get_audio_decoders()for audio, andget_video_decoders()for video.Default:

None.decoder_option (dict or None, optional) –

Options passed to decoder. Mapping from str to str. (Default:

None)To list decoder options for a decoder, you can use

ffmpeg -h decoder=<DECODER>command.In addition to decoder-specific options, you can also pass options related to multithreading. They are effective only if the decoder support them. If neither of them are provided, StreamReader defaults to single thread.

"threads": The number of threads (in str). Providing the value"0"will let FFmpeg decides based on its heuristics."thread_type": Which multithreading method to use. The valid values are"frame"or"slice". Note that each decoder supports different set of methods. If not provided, a default value is used."frame": Decode more than one frame at once. Each thread handles one frame. This will increase decoding delay by one frame per thread"slice": Decode more than one part of a single frame at once.

format (str, optional) –

Change the format of image channels. Valid values are,

"rgb24": 8 bits * 3 channels (R, G, B)"bgr24": 8 bits * 3 channels (B, G, R)"yuv420p": 8 bits * 3 channels (Y, U, V)"gray": 8 bits * 1 channels

Default:

"rgb24".frame_rate (int or None, optional) – If provided, change the frame rate.

width (int or None, optional) – If provided, change the image width. Unit: Pixel.

height (int or None, optional) – If provided, change the image height. Unit: Pixel.

hw_accel (str or None, optional) –

Enable hardware acceleration.

When video is decoded on CUDA hardware, for example decoder=”h264_cuvid”, passing CUDA device indicator to hw_accel (i.e. hw_accel=”cuda:0”) will make StreamReader place the resulting frames directly on the specified CUDA device as CUDA tensor.

If None, the frame will be moved to CPU memory. Default:

None.

add_video_stream

- StreamReader.add_video_stream(frames_per_chunk: int, buffer_chunk_size: int = 3, *, stream_index: Optional[int] = None, decoder: Optional[str] = None, decoder_option: Optional[Dict[str, str]] = None, filter_desc: Optional[str] = None, hw_accel: Optional[str] = None)[source]

Add output video stream

- Parameters:

frames_per_chunk (int) –

Number of frames returned as one chunk. If the source stream is exhausted before enough frames are buffered, then the chunk is returned as-is.

Providing

-1disables chunking andpop_chunks()method will concatenate all the buffered frames and return it.buffer_chunk_size (int, optional) –

Internal buffer size. When the number of chunks buffered exceeds this number, old frames are dropped. For example, if

frames_per_chunkis 5 andbuffer_chunk_sizeis 3, then frames older than15are dropped. Providing-1disables this behavior.Default:

3.stream_index (int or None, optional) – The source video stream index. If omitted,

default_video_streamis used.decoder (str or None, optional) –

The name of the decoder to be used. When provided, use the specified decoder instead of the default one.

To list the available decoders, please use

get_audio_decoders()for audio, andget_video_decoders()for video.Default:

None.decoder_option (dict or None, optional) –

Options passed to decoder. Mapping from str to str. (Default:

None)To list decoder options for a decoder, you can use

ffmpeg -h decoder=<DECODER>command.In addition to decoder-specific options, you can also pass options related to multithreading. They are effective only if the decoder support them. If neither of them are provided, StreamReader defaults to single thread.

"threads": The number of threads (in str). Providing the value"0"will let FFmpeg decides based on its heuristics."thread_type": Which multithreading method to use. The valid values are"frame"or"slice". Note that each decoder supports different set of methods. If not provided, a default value is used."frame": Decode more than one frame at once. Each thread handles one frame. This will increase decoding delay by one frame per thread"slice": Decode more than one part of a single frame at once.

hw_accel (str or None, optional) –

Enable hardware acceleration.

When video is decoded on CUDA hardware, for example decoder=”h264_cuvid”, passing CUDA device indicator to hw_accel (i.e. hw_accel=”cuda:0”) will make StreamReader place the resulting frames directly on the specified CUDA device as CUDA tensor.

If None, the frame will be moved to CPU memory. Default:

None.filter_desc (str or None, optional) – Filter description. The list of available filters can be found at https://ffmpeg.org/ffmpeg-filters.html Note that complex filters are not supported.

fill_buffer

- StreamReader.fill_buffer(timeout: Optional[float] = None, backoff: float = 10.0) int[source]

Keep processing packets until all buffers have at least one chunk

- Parameters:

timeout (float or None, optional) – See

process_packet(). (Default:None)backoff (float, optional) – See

process_packet(). (Default:10.0)

- Returns:

0Packets are processed properly and buffers are ready to be popped once.1The streamer reached EOF. All the output stream processors flushed the pending frames. The caller should stop calling this method.- Return type:

get_metadata

get_out_stream_info

- StreamReader.get_out_stream_info(i: int) OutputStream[source]

Get the metadata of output stream

- Parameters:

i (int) – Stream index.

- Returns:

- OutputStreamTypes

Information about the output stream. If the output stream is audio type, then

OutputAudioStreamis returned. If it is video type, thenOutputVideoStreamis returned.

get_src_stream_info

- StreamReader.get_src_stream_info(i: int) InputStream[source]

Get the metadata of source stream

- Parameters:

i (int) – Stream index.

- Returns:

Information about the source stream. If the source stream is audio type, then

SourceAudioStreamis returned. If it is video type, thenSourceVideoStreamis returned. OtherwiseSourceStreamclass is returned.- Return type:

InputStreamTypes

is_buffer_ready

pop_chunks

- StreamReader.pop_chunks() Tuple[Optional[ChunkTensor]][source]

Pop one chunk from all the output stream buffers.

- Returns:

Buffer contents. If a buffer does not contain any frame, then None is returned instead.

- Return type:

Tuple[Optional[ChunkTensor]]

process_all_packets

- StreamReader.process_all_packets()[source]

Process packets until it reaches EOF.

process_packet

- StreamReader.process_packet(timeout: Optional[float] = None, backoff: float = 10.0) int[source]

Read the source media and process one packet.

If a packet is read successfully, then the data in the packet will be decoded and passed to corresponding output stream processors.

If the packet belongs to a source stream that is not connected to an output stream, then the data are discarded.

When the source reaches EOF, then it triggers all the output stream processors to enter drain mode. All the output stream processors flush the pending frames.

- Parameters:

timeout (float or None, optional) –

Timeout in milli seconds.

This argument changes the retry behavior when it failed to process a packet due to the underlying media resource being temporarily unavailable.

When using a media device such as a microphone, there are cases where the underlying buffer is not ready. Calling this function in such case would cause the system to report EAGAIN (resource temporarily unavailable).

>=0: Keep retrying until the given time passes.0<: Keep retrying forever.None: No retrying and raise an exception immediately.

Default:

None.Note

The retry behavior is applicable only when the reason is the unavailable resource. It is not invoked if the reason of failure is other.

backoff (float, optional) –

Time to wait before retrying in milli seconds.

This option is effective only when timeout is effective. (not

None)When timeout is effective, this backoff controls how long the function should wait before retrying. Default:

10.0.

- Returns:

0A packet was processed properly. The caller can keep calling this function to buffer more frames.1The streamer reached EOF. All the output stream processors flushed the pending frames. The caller should stop calling this method.- Return type:

remove_stream

seek

- StreamReader.seek(timestamp: float, mode: str = 'precise')[source]

Seek the stream to the given timestamp [second]

- Parameters:

timestamp (float) – Target time in second.

mode (str) –

Controls how seek is done. Valid choices are;

”key”: Seek into the nearest key frame before the given timestamp.

”any”: Seek into any frame (including non-key frames) before the given timestamp.

”precise”: First seek into the nearest key frame before the given timestamp, then decode frames until it reaches the closes frame to the given timestamp.

Note

All the modes invalidate and reset the internal state of decoder. When using “any” mode and if it ends up seeking into non-key frame, the image decoded may be invalid due to lack of key frame. Using “precise” will workaround this issue by decoding frames from previous key frame, but will be slower.

stream

- StreamReader.stream(timeout: Optional[float] = None, backoff: float = 10.0) Iterator[Tuple[Optional[ChunkTensor], ...]][source]

Return an iterator that generates output tensors

- Parameters:

timeout (float or None, optional) – See

process_packet(). (Default:None)backoff (float, optional) – See

process_packet(). (Default:10.0)

- Returns:

Iterator that yields a tuple of chunks that correspond to the output streams defined by client code. If an output stream is exhausted, then the chunk Tensor is substituted with

None. The iterator stops if all the output streams are exhausted.- Return type:

Iterator[Tuple[Optional[ChunkTensor], …]]

Support Structures

ChunkTensor

- class torchaudio.io._stream_reader.ChunkTensor[source]

Decoded media frames with metadata.

The instance of this class represents the decoded video/audio frames with metadata, and the instance itself behave like

Tensor.Client codes can pass instance of this class as-if it’s

Tensorclass, or call the methods defined onTensorclass.Example

>>> # Define input streams >>> reader = StreamReader(...) >>> reader.add_audio_stream(frames_per_chunk=4000, sample_rate=8000) >>> reader.add_video_stream(frames_per_chunk=7, frame_rate=28) >>> # Decode the streams and fetch frames >>> reader.fill_buffer() >>> audio_chunk, video_chunk = reader.pop_chunks()

>>> # Access metadata >>> (audio_chunk.pts, video_chunks.pts) (0.0, 0.0) >>> >>> # The second time the PTS is different >>> reader.fill_buffer() >>> audio_chunk, video_chunk = reader.pop_chunks() >>> (audio_chunk.pts, video_chunks.pts) (0.5, 0.25)

>>> # Call PyTorch ops on chunk >>> audio_chunk.shape torch.Size([4000, 2] >>> power = torch.pow(video_chunk, 2) >>> >>> # the result is a plain torch.Tensor class >>> type(power) <class 'torch.Tensor'> >>> >>> # Metadata is not available on the result >>> power.pts AttributeError: 'Tensor' object has no attribute 'pts'

- Tutorials using

ChunkTensor:

- pts: float

Presentation time stamp of the first frame in the chunk.

Unit: second.

- Tutorials using

SourceStream

- class torchaudio.io._stream_reader.SourceStream[source]

The metadata of a source stream, returned by

get_src_stream_info().This class is used when representing streams of media type other than audio or video.

When source stream is audio or video type,

SourceAudioStreamandSourceVideoStream, which reports additional media-specific attributes, are used respectively.- media_type: str

The type of the stream. One of

"audio","video","data","subtitle","attachment"and empty string.Note

Only audio and video streams are supported for output.

Note

Still images, such as PNG and JPEG formats are reported as video.

- codec: str

Short name of the codec. Such as

"pcm_s16le"and"h264".

- codec_long_name: str

Detailed name of the codec.

Such as “PCM signed 16-bit little-endian” and “H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10”.

- format: Optional[str]

Media format. Such as

"s16"and"yuv420p".Commonly found audio values are;

"u8","u8p": Unsigned 8-bit unsigned interger."s16","s16p": 16-bit signed integer."s32","s32p": 32-bit signed integer."flt","fltp": 32-bit floating-point.

Note

p at the end indicates the format is planar. Channels are grouped together instead of interspersed in memory.

- bit_rate: Optional[int]

Bit rate of the stream in bits-per-second. This is an estimated values based on the initial few frames of the stream. For container formats and variable bit rate, it can be 0.

SourceAudioStream

- class torchaudio.io._stream_reader.SourceAudioStream[source]

The metadata of an audio source stream, returned by

get_src_stream_info().This class is used when representing audio stream.

In addition to the attributes reported by

SourceStream, the following attributes are reported.- sample_rate: float

Sample rate of the audio.

- num_channels: int

Number of channels.

SourceVideoStream

- class torchaudio.io._stream_reader.SourceVideoStream[source]

The metadata of a video source stream, returned by

get_src_stream_info().This class is used when representing video stream.

In addition to the attributes reported by

SourceStream, the following attributes are reported.- width: int

Width of the video frame in pixel.

- height: int

Height of the video frame in pixel.

- frame_rate: float

Frame rate.

OutputStream

- class torchaudio.io._stream_reader.OutputStream[source]

Output stream configured on

StreamReader, returned byget_out_stream_info().- source_index: int

Index of the source stream that this output stream is connected.

- filter_description: str

Description of filter graph applied to the source stream.

- media_type: str

The type of the stream.

"audio"or"video".

- format: str

Media format. Such as

"s16"and"yuv420p".Commonly found audio values are;

"u8","u8p": Unsigned 8-bit unsigned interger."s16","s16p": 16-bit signed integer."s32","s32p": 32-bit signed integer."flt","fltp": 32-bit floating-point.

Note

p at the end indicates the format is planar. Channels are grouped together instead of interspersed in memory.

OutputAudioStream

- class torchaudio.io._stream_reader.OutputAudioStream[source]

Information about an audio output stream configured with

add_audio_stream()oradd_basic_audio_stream().In addition to the attributes reported by

OutputStream, the following attributes are reported.- sample_rate: float

Sample rate of the audio.

- num_channels: int

Number of channels.

OutputVideoStream

- class torchaudio.io._stream_reader.OutputVideoStream[source]

Information about a video output stream configured with

add_video_stream()oradd_basic_video_stream().In addition to the attributes reported by

OutputStream, the following attributes are reported.- width: int

Width of the video frame in pixel.

- height: int

Height of the video frame in pixel.

- frame_rate: float

Frame rate.